Pairwise independence

In probability theory, a pairwise independent collection of random variables is a set of random variables any two of which are independent.[1] Any collection of mutually independent random variables is pairwise independent, but some pairwise independent collections are not mutually independent. Pairwise independent random variables with finite variance are uncorrelated.

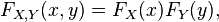

A pair of random variables X and Y are independent if and only if the random vector (X, Y) with joint cumulative distribution function (CDF)  satisfies

satisfies

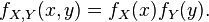

or equivalently, their joint density  satisfies

satisfies

That is, the joint distribution is equal to the product of the marginal distributions.[2]

Unless it is not clear in context, in practice the modifier "mutual" is usually dropped so that independence means mutual independence. A statement such as " X, Y, Z are independent random variables" means that X, Y, Z are mutually independent.

Example

Pairwise independence does not imply mutual independence, as shown by the following example attributed to S. Bernstein.[3]

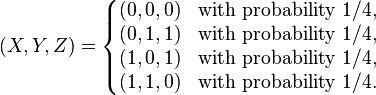

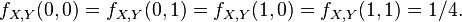

Suppose X and Y are two independent tosses of a fair coin, where we designate 1 for heads and 0 for tails. Let the third random variable Z be equal to 1 if exactly one of those coin tosses resulted in "heads", and 0 otherwise. Then jointly the triple (X, Y, Z) has the following probability distribution:

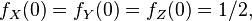

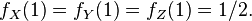

Here the marginal probability distributions are identical:  and

and

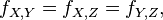

The bivariate distributions also agree:

The bivariate distributions also agree:  where

where

Since each of the pairwise joint distributions equals the product of their respective marginal distributions, the variables are pairwise independent:

- X and Y are independent, and

- X and Z are independent, and

- Y and Z are independent.

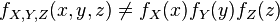

However, X, Y, and Z are not mutually independent, since  . Note that any of

. Note that any of  is completely determined by the other two (any of X, Y, Z is the sum (modulo 2) of the others). That is as far from independence as random variables can get.

is completely determined by the other two (any of X, Y, Z is the sum (modulo 2) of the others). That is as far from independence as random variables can get.

Generalization

More generally, we can talk about k-wise independence, for any k ≥ 2. The idea is similar: a set of random variables is k-wise independent if every subset of size k of those variables is independent. k-wise independence has been used in theoretical computer science, where it was used to prove a theorem about the problem MAXEkSAT.

See also

- Pairwise

- Pairwise disjoint

References

- ↑ Gut, A. (2005) Probability: a Graduate Course, Springer-Verlag. ISBN 0-387-27332-8. pp. 71–72.

- ↑ Hogg, R. V., McKean, J. W., Craig, A. T. (2005). Introduction to Mathematical Statistics (6 ed.). Upper Saddle River, NJ: Pearson Prentice Hall. ISBN 0-13-008507-3. Definition 2.5.1, page 109.

- ↑ Hogg, R. V., McKean, J. W., Craig, A. T. (2005). Introduction to Mathematical Statistics (6 ed.). Upper Saddle River, NJ: Pearson Prentice Hall. ISBN 0-13-008507-3. Remark 2.6.1, p. 120.