Markov blanket

In machine learning, the Markov blanket for a node  in a Bayesian network is the set of nodes

in a Bayesian network is the set of nodes  composed of

composed of  's parents, its children, and its children's other parents. In a Markov network, the Markov blanket of a node is its set of neighboring nodes. A Markov blanket may also be denoted by

's parents, its children, and its children's other parents. In a Markov network, the Markov blanket of a node is its set of neighboring nodes. A Markov blanket may also be denoted by  .

.

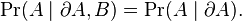

Every set of nodes in the network is conditionally independent of  when conditioned on the set

when conditioned on the set  , that is, when conditioned on the Markov blanket of the node

, that is, when conditioned on the Markov blanket of the node  . The probability has the Markov property; formally, for distinct nodes

. The probability has the Markov property; formally, for distinct nodes  and

and  :

:

The Markov blanket of a node contains all the variables that shield the node from the rest of the network. This means that the Markov blanket of a node is the only knowledge needed to predict the behavior of that node. The term was coined by Pearl in 1988.[1]

In a Bayesian network, the values of the parents and children of a node evidently give information about that node; however, its children's parents also have to be included, because they can be used to explain away the node in question. In a Markov random field, the Markov blanket for a node is simply its adjacent nodes.

See also

Notes

- ↑ Pearl, Judea (1988). Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference. Representation and Reasoning Series. San Mateo CA: Morgan Kaufmann. ISBN 0-934613-73-7.