Lyapunov equation

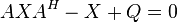

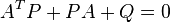

In control theory, the discrete Lyapunov equation is of the form

where  is a Hermitian matrix and

is a Hermitian matrix and  is the conjugate transpose of

is the conjugate transpose of  . The continuous Lyapunov equation is of form

. The continuous Lyapunov equation is of form

.

.

The Lyapunov equation occurs in many branches of control theory, such as stability analysis and optimal control. This and related equations are named after the Russian mathematician Aleksandr Lyapunov.

Application to stability

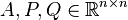

In the following theorems  , and

, and  and

and  are symmetric. The notation

are symmetric. The notation  means that the matrix

means that the matrix  is positive definite.

is positive definite.

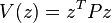

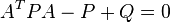

Theorem (continuous time version). Given any  , there exists a unique

, there exists a unique  satisfying

satisfying  if and only if the linear system

if and only if the linear system  is globally asymptotically stable. The quadratic function

is globally asymptotically stable. The quadratic function  is a Lyapunov function that can be used to verify stability.

is a Lyapunov function that can be used to verify stability.

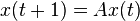

Theorem (discrete time version). Given any  , there exists a unique

, there exists a unique  satisfying

satisfying  if and only if the linear system

if and only if the linear system  is globally asymptotically stable. As before,

is globally asymptotically stable. As before,  is a Lyapunov function.

is a Lyapunov function.

Computational aspects of solution

Specialized software is available for solving Lyapunov equations. For the discrete case, the Schur method of Kitagawa is often used.[1] For the continuous Lyapunov equation the method of Bartels and Stewart can be used.[2]

Analytic Solution

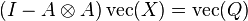

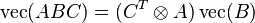

Defining the  operator as stacking the columns of a matrix

operator as stacking the columns of a matrix  and

and  as the Kronecker product of

as the Kronecker product of  and

and  , the continuous time and discrete time Lyapunov equations can be expressed as solutions of a matrix equation. Furthermore, if the matrix

, the continuous time and discrete time Lyapunov equations can be expressed as solutions of a matrix equation. Furthermore, if the matrix  is stable, the solution can also be expressed as an integral (continuous time case) or as an infinite sum (discrete time case).

is stable, the solution can also be expressed as an integral (continuous time case) or as an infinite sum (discrete time case).

Discrete time

Using the result that  , one has

, one has

where  is a conformable identity matrix.[3] One may then solve for

is a conformable identity matrix.[3] One may then solve for  by inverting or solving the linear equations. To get

by inverting or solving the linear equations. To get  , one must just reshape

, one must just reshape  appropriately.

appropriately.

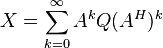

Moreover, if  is stable, the solution

is stable, the solution  can also be written as

can also be written as

.

.

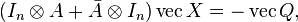

Continuous time

Using again the Kronecker product notation and the vectorization operator, one has the matrix equation

where  denotes the matrix obtained by complex conjugating the entries of

denotes the matrix obtained by complex conjugating the entries of  .

.

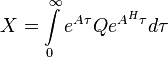

Similar to the discrete-time case, if  is stable, the solution

is stable, the solution  can also be written as

can also be written as

.

.

See also

References

- ↑ Kitagawa, G. (1977). "An Algorithm for Solving the Matrix Equation X = F X F' + S". International Journal of Control 25 (5): 745–753. doi:10.1080/00207177708922266.

- ↑ Bartels, R. H.; Stewart, G. W. (1972). "Algorithm 432: Solution of the matrix equation AX + XB = C". Comm. ACM 15 (9): 820–826. doi:10.1145/361573.361582.

- ↑ Hamilton, J. (1994). Time Series Analysis. Princeton University Press. Equations 10.2.13 and 10.2.18. ISBN 0-691-04289-6.