Local martingale

In mathematics, a local martingale is a type of stochastic process, satisfying the localized version of the martingale property. Every martingale is a local martingale; every bounded local martingale is a martingale; in particular, every local martingale that is bounded from below is a supermartingale, and every local martingale that is bounded from above is a submartingale; however, in general a local martingale is not a martingale, because its expectation can be distorted by large values of small probability. In particular, a driftless diffusion process is a local martingale, but not necessarily a martingale.

Local martingales are essential in stochastic analysis, see Itō calculus, semimartingale, Girsanov theorem.

Definition

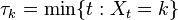

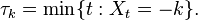

Let (Ω, F, P) be a probability space; let F∗ = { Ft | t ≥ 0 } be a filtration of F; let X : [0, +∞) × Ω → S be an F∗-adapted stochastic process on set S. Then X is called an F∗-local martingale if there exists a sequence of F∗-stopping times τk : Ω → [0, +∞) such that

- the τk are almost surely increasing: P[τk < τk+1] = 1;

- the τk diverge almost surely: P[τk → +∞ as k → +∞] = 1;

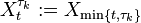

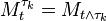

- the stopped process

- is an F∗-martingale for every k.

Examples

Example 1

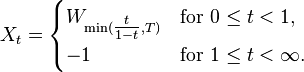

Let Wt be the Wiener process and T = min{ t : Wt = −1 } the time of first hit of −1. The stopped process Wmin{ t, T } is a martingale; its expectation is 0 at all times, nevertheless its limit (as t → ∞) is equal to −1 almost surely (a kind of gambler's ruin). A time change leads to a process

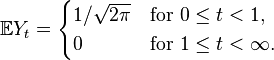

The process  is continuous almost surely; nevertheless, its expectation is discontinuous,

is continuous almost surely; nevertheless, its expectation is discontinuous,

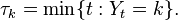

This process is not a martingale. However, it is a local martingale. A localizing sequence may be chosen as  if there is such t, otherwise τk = k. This sequence diverges almost surely, since τk = k for all k large enough (namely, for all k that exceed the maximal value of the process X). The process stopped at τk is a martingale.[details 1]

if there is such t, otherwise τk = k. This sequence diverges almost surely, since τk = k for all k large enough (namely, for all k that exceed the maximal value of the process X). The process stopped at τk is a martingale.[details 1]

Example 2

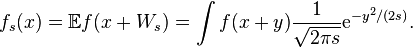

Let Wt be the Wiener process and ƒ a measurable function such that  Then the following process is a martingale:

Then the following process is a martingale:

here

The Dirac delta function  (strictly speaking, not a function), being used in place of

(strictly speaking, not a function), being used in place of  leads to a process defined informally as

leads to a process defined informally as  and formally as

and formally as

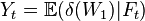

where

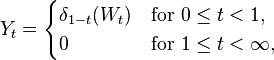

The process  is continuous almost surely (since

is continuous almost surely (since  almost surely), nevertheless, its expectation is discontinuous,

almost surely), nevertheless, its expectation is discontinuous,

This process is not a martingale. However, it is a local martingale. A localizing sequence may be chosen as

Example 3

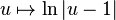

Let  be the complex-valued Wiener process, and

be the complex-valued Wiener process, and

The process  is continuous almost surely (since

is continuous almost surely (since  does not hit 1, almost surely), and is a local martingale, since the function

does not hit 1, almost surely), and is a local martingale, since the function  is harmonic (on the complex plane without the point 1). A localizing sequence may be chosen as

is harmonic (on the complex plane without the point 1). A localizing sequence may be chosen as  Nevertheless, the expectation of this process is non-constant; moreover,

Nevertheless, the expectation of this process is non-constant; moreover,

-

as

as

which can be deduced from the fact that the mean value of  over the circle

over the circle  tends to infinity as

tends to infinity as  . (In fact, it is equal to

. (In fact, it is equal to  for r ≥ 1 but to 0 for r ≤ 1).

for r ≥ 1 but to 0 for r ≤ 1).

Martingales via local martingales

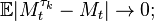

Let  be a local martingale. In order to prove that it is a martingale it is sufficient to prove that

be a local martingale. In order to prove that it is a martingale it is sufficient to prove that  in L1 (as

in L1 (as  ) for every t, that is,

) for every t, that is,  here

here  is the stopped process. The given relation

is the stopped process. The given relation  implies that

implies that  almost surely. The dominated convergence theorem ensures the convergence in L1 provided that

almost surely. The dominated convergence theorem ensures the convergence in L1 provided that

-

for every t.

for every t.

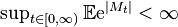

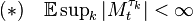

Thus, Condition (*) is sufficient for a local martingale  being a martingale. A stronger condition

being a martingale. A stronger condition

-

![\textstyle (**) \quad \mathbb{E} \sup_{s\in[0,t]} |M_s| < \infty](../I/m/87002c1bce79bc07c48d3bbd1a333d04.png) for every t

for every t

is also sufficient.

Caution. The weaker condition

-

![\textstyle \sup_{s\in[0,t]} \mathbb{E} |M_s| < \infty](../I/m/4d91ab2269043273261d509be8a53af6.png) for every t

for every t

is not sufficient. Moreover, the condition

is still not sufficient; for a counterexample see Example 3 above.

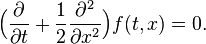

A special case:

where  is the Wiener process, and

is the Wiener process, and  is twice continuously differentiable. The process

is twice continuously differentiable. The process  is a local martingale if and only if f satisfies the PDE

is a local martingale if and only if f satisfies the PDE

However, this PDE itself does not ensure that  is a martingale. In order to apply (**) the following condition on f is sufficient: for every

is a martingale. In order to apply (**) the following condition on f is sufficient: for every  and t there exists

and t there exists  such that

such that

for all ![s \in [0,t]](../I/m/c43d809464ba4adff43639b283d54049.png) and

and

Technical details

- ↑ For the times before 1 it is a martingale since a stopped Brownian motion is. After the instant 1 it is constant. It remains to check it at the instant 1. By the bounded convergence theorem the expectation at 1 is the limit of the expectation at (n-1)/n (as n tends to infinity), and the latter does not depend on n. The same argument applies to the conditional expectation.

References

- Øksendal, Bernt K. (2003). Stochastic Differential Equations: An Introduction with Applications (Sixth edition ed.). Berlin: Springer. ISBN 3-540-04758-1.