Littlewood–Offord problem

In mathematics, the Littlewood–Offord problem is the combinatorial question in geometry of bounding from above the number of subsums made out of vectors

that fall into a fixed convex set  .

.

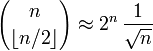

The first result (for d=1, 2) was given in a paper from 1938[1] by John Edensor Littlewood and A. Cyril Offord, on random polynomials. This Littlewood–Offord lemma states that, for real or complex numbers vi of absolute value at least one and any disc of radius one, not more than  of the 2n sums of vi fall into the disc.

of the 2n sums of vi fall into the disc.

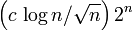

In 1945 Paul Erdős improved the upper bound for d=1 to

using Sperner's theorem. This bound is sharp; equality is attained when all v_i are equal.

Then Kleitman in 1966 showed that the same bound held for complex numbers. He extended this (1970) to vi in a normed space. See [2] for the proofs of these results.

The semi-sum

- m = ½Σ vi

can be subtracted from all the subsums. That is, by change of origin and then scaling by a factor of 2, we may as well consider sums

- Σ εivi

in which εi takes the value 1 or −1. This makes the problem into a probabilistic one, in which the question is of the distribution of these random vectors, and what can be said knowing nothing more about the vi.