Linear form

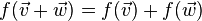

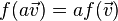

In linear algebra, a linear functional or linear form (also called a one-form or covector) is a linear map from a vector space to its field of scalars. In Rn, if vectors are represented as column vectors, then linear functionals are represented as row vectors, and their action on vectors is given by the dot product, or the matrix product with the row vector on the left and the column vector on the right. In general, if V is a vector space over a field k, then a linear functional f is a function from V to k that is linear:

for all

for all

for all

for all

The set of all linear functionals from V to k, Homk(V,k), forms a vector space over k with the addition of the operations of addition and scalar multiplication (defined pointwise). This space is called the dual space of V, or sometimes the algebraic dual space, to distinguish it from the continuous dual space. It is often written V∗ or V′ when the field k is understood.

Continuous linear functionals

If V is a topological vector space, the space of continuous linear functionals — the continuous dual — is often simply called the dual space. If V is a Banach space, then so is its (continuous) dual. To distinguish the ordinary dual space from the continuous dual space, the former is sometimes called the algebraic dual. In finite dimensions, every linear functional is continuous, so the continuous dual is the same as the algebraic dual, although this is not true in infinite dimensions.

Examples and applications

Linear functionals in Rn

Suppose that vectors in the real coordinate space Rn are represented as column vectors

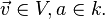

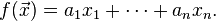

Then any linear functional can be written in these coordinates as a sum of the form:

This is just the matrix product of the row vector [a1 ... an] and the column vector  :

:

Integration

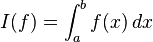

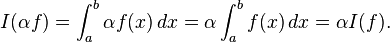

Linear functionals first appeared in functional analysis, the study of vector spaces of functions. A typical example of a linear functional is integration: the linear transformation defined by the Riemann integral

is a linear functional from the vector space C[a,b] of continuous functions on the interval [a, b] to the real numbers. The linearity of I(f) follows from the standard facts about the integral:

Evaluation

Let Pn denote the vector space of real-valued polynomial functions of degree ≤n defined on an interval [a,b]. If c ∈ [a, b], then let evc : Pn → R be the evaluation functional:

The mapping f → f(c) is linear since

If x0, ..., xn are n+1 distinct points in [a,b], then the evaluation functionals evxi, i=0,1,...,n form a basis of the dual space of Pn. (Lax (1996) proves this last fact using Lagrange interpolation.)

Application to quadrature

The integration functional I defined above defines a linear functional on the subspace Pn of polynomials of degree ≤ n. If x0, …, xn are n+1 distinct points in [a,b], then there are coefficients a0, …, an for which

for all f ∈ Pn. This forms the foundation of the theory of numerical quadrature.

This follows from the fact that the linear functionals evxi : f → f(xi) defined above form a basis of the dual space of Pn (Lax 1996).

Linear functionals in quantum mechanics

Linear functionals are particularly important in quantum mechanics. Quantum mechanical systems are represented by Hilbert spaces, which are anti–isomorphic to their own dual spaces. A state of a quantum mechanical system can be identified with a linear functional. For more information see bra–ket notation.

Distributions

In the theory of generalized functions, certain kinds of generalized functions called distributions can be realized as linear functionals on spaces of test functions.

Properties

- Any linear functional L is either trivial (equal to 0 everywhere) or surjective onto the scalar field. Indeed, this follows since just as the image of a vector subspace under a linear transformation is a subspace, so is the image of V under L. however, the only subspaces (i.e., k-subspaces) of k are {0} and k itself.

- A linear functional is continuous if and only if its kernel is closed (Rudin 1991, Theorem 1.18).

- Linear functionals with the same kernel are proportional.

- The absolute value of any linear functional is a seminorm on its vector space.

Visualizing linear functionals

In finite dimensions, a linear functional can be visualized in terms of its level sets. In three dimensions, the level sets of a linear functional are a family of mutually parallel planes; in higher dimensions, they are parallel hyperplanes. This method of visualizing linear functionals is sometimes introduced in general relativity texts, such as Gravitation by Misner, Thorne & Wheeler (1973).

Dual vectors and bilinear forms

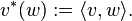

Every non-degenerate bilinear form on a finite-dimensional vector space V gives rise to an isomorphism from V to V*. Specifically, denoting the bilinear form on V by < , > (for instance in Euclidean space <v,w> = v•w is the dot product of v and w), then there is a natural isomorphism  given by

given by

The inverse isomorphism is given by  where f* is the unique element of V for which for all w ∈ V

where f* is the unique element of V for which for all w ∈ V

The above defined vector v* ∈ V* is said to be the dual vector of v ∈ V.

In an infinite dimensional Hilbert space, analogous results hold by the Riesz representation theorem. There is a mapping V → V* into the continuous dual space V*. However, this mapping is antilinear rather than linear.

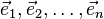

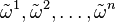

Bases in finite dimensions

Basis of the dual space in finite dimensions

Let the vector space V have a basis  , not necessarily orthogonal. Then the dual space V* has a basis

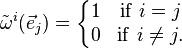

, not necessarily orthogonal. Then the dual space V* has a basis  called the dual basis defined by the special property that

called the dual basis defined by the special property that

Or, more succinctly,

where δ is the Kronecker delta. Here the superscripts of the basis functionals are not exponents but are instead contravariant indices.

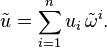

A linear functional  belonging to the dual space

belonging to the dual space  can be expressed as a linear combination of basis functionals, with coefficients ("components") ui,

can be expressed as a linear combination of basis functionals, with coefficients ("components") ui,

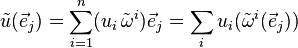

Then, applying the functional  to a basis vector ej yields

to a basis vector ej yields

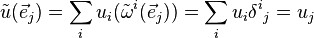

due to linearity of scalar multiples of functionals and pointwise linearity of sums of functionals. Then

that is

This last equation shows that an individual component of a linear functional can be extracted by applying the functional to a corresponding basis vector.

The dual basis and inner product

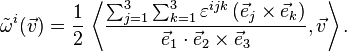

When the space V carries an inner product, then it is possible to write explicitly a formula for the dual basis of a given basis. Let V have (not necessarily orthogonal) basis  . In three dimensions (n = 3), the dual basis can be written explicitly

. In three dimensions (n = 3), the dual basis can be written explicitly

for i = 1, 2, 3, where ε is the Levi-Civita symbol and  the inner product (or dot product) on V.

the inner product (or dot product) on V.

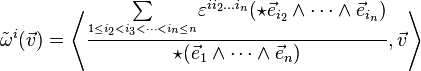

In higher dimensions, this generalizes as follows

where  is the Hodge star operator.

is the Hodge star operator.

See also

References

- Bishop, Richard; Goldberg, Samuel (1980), "Chapter 4", Tensor Analysis on Manifolds, Dover Publications, ISBN 0-486-64039-6

- Halmos, Paul (1974), Finite dimensional vector spaces, Springer, ISBN 0-387-90093-4

- Lax, Peter (1996), Linear algebra, Wiley-Interscience, ISBN 978-0-471-11111-5

- Misner, Charles W.; Thorne, Kip. S.; Wheeler, John A. (1973), Gravitation, W. H. Freeman, ISBN 0-7167-0344-0

- Rudin, Walter (1991), Functional Analysis, McGraw-Hill Science/Engineering/Math, ISBN 978-0-07-054236-5

- Schutz, Bernard (1985), "Chapter 3", A first course in general relativity, Cambridge, UK: Cambridge University Press, ISBN 0-521-27703-5

| ||||||||||||||||||||||||||||||||||

![f(\vec{x}) = [a_1 \dots a_n] \begin{bmatrix}x_1\\ \vdots\\ x_n\end{bmatrix}.](../I/m/f15700bc429adc9ab89a1ea61c8255a9.png)