Kushner equation

In filtering theory the Kushner equation[1] (after Harold Kushner) is an equation for the conditional probability density of the state of a stochastic non-linear dynamical system, given noisy measurements of the state. It therefore provides the solution of the nonlinear filtering problem in estimation theory. The equation is sometimes referred to as the Stratonovich–Kushner[2][3][4][5] (or Kushner–Stratonovich) equation. However, the correct equation in terms of Itō calculus was first derived by Kushner although a more heuristic Stratonovich version of it appeared already in Stratonovich's works in late fifties. However, the derivation in terms of Itō calculus is due to Richard Bucy.[6]

Overview

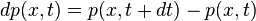

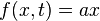

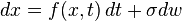

Assume the state of the system evolves according to

and a noisy measurement of the system state is available:

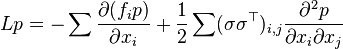

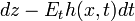

where w, v are independent Wiener processes. Then the conditional probability density p(x, t) of the state at time t is given by the Kushner equation:

where  is the Kolmogorov Forward operator and

is the Kolmogorov Forward operator and  is the variation of the conditional probability.

is the variation of the conditional probability.

The term  is the innovation i.e. the difference between the measurement and its expected value.

is the innovation i.e. the difference between the measurement and its expected value.

Kalman-Bucy filter

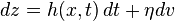

One can simply use the Kushner equation to derive the Kalman-Bucy filter for a linear diffusion process. Suppose we have  and

and  . The Kushner equation will be given by

. The Kushner equation will be given by

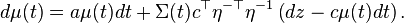

where  is the mean of the conditional probability at time

is the mean of the conditional probability at time  . Multiplying by

. Multiplying by  and integrating over it, we obtain the variation of the mean

and integrating over it, we obtain the variation of the mean

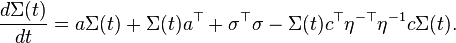

Likewise, the variation of the variance  is given by

is given by

The conditional probability is then given at every instant by a normal distribution  .

.

References

- ↑ Kushner H.J. (1964) On the differential equations satisfied by conditional probability densities of Markov processes, with applications.. J. SIAM Control Ser. A, 2(1), pp. 106-119.

- ↑ Stratonovich, R.L. (1959). Optimum nonlinear systems which bring about a separation of a signal with constant parameters from noise. Radiofizika, 2:6, pp. 892–901.

- ↑ Stratonovich, R.L. (1959). On the theory of optimal non-linear filtering of random functions. Theory of Probability and its Applications, 4, pp. 223–225.

- ↑ Stratonovich, R.L. (1960) Application of the Markov processes theory to optimal filtering. Radio Engineering and Electronic Physics, 5:11, pp. 1–19.

- ↑ Stratonovich, R.L. (1960). Conditional Markov Processes. Theory of Probability and its Applications, 5, pp. 156–178.

- ↑ Bucy, R. S. (1965) Nonlinear filtering theory. IEEE Transactions on Automatic Control, 10, pp. 198–198.

![dp(x,t) = L[p(x,t)] dt + p(x,t) [h(x,t)-E_t h(x,t) ]^T \eta^{-\top}\eta^{-1} [dz-E_t h(x,t) dt].](../I/m/0c409256e104da80d101b0d3379a52ac.png)

![dp(x,t) = L[p(x,t)] dt + p(x,t) [c x- c \mu(t)]^T \eta^{-\top}\eta^{-1} [dz-c \mu(t) dt],](../I/m/90845e322894dc25ce14bf126ac27208.png)