Holm–Bonferroni method

In statistics, the Holm–Bonferroni method [1] is a method used to counteract the problem of multiple comparisons. It is intended to control the Familywise error rate and offers a simple test uniformly more powerful than the Bonferroni correction. It is one of the earliest usage of stepwise algorithms in simultaneous inference.

It is named after Sture Holm who invented the method in 1978 and Carlo Emilio Bonferroni.

Introduction

When considering several hypotheses in the same test the problem of multiplicity arises. Intuitively, the more hypotheses we check, the higher the probability to witness a rare result. With 10 different hypotheses and significance level of 0.05, the probability of committing one or more type I errors is greater than 0.4 if the nulls are in fact true. The Holm–Bonferroni method is one of many approaches that control the overall probability of witnessing one or more type I errors (aka family-wise error rate) by adjusting the rejection criteria of each of the individual hypotheses or comparisons.

Formulation

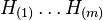

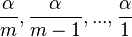

The method is as follows:

- Let

be a family of hypotheses and

be a family of hypotheses and  the corresponding P-values.

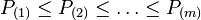

the corresponding P-values. - Start by ordering the p-values (from lowest to highest)

and let the associated hypotheses be

and let the associated hypotheses be

- For a given significance level

, let

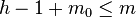

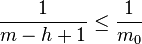

, let  be the minimal index such that

be the minimal index such that

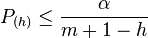

- Reject the null hypotheses

and do not reject

and do not reject

- If

then do not reject any of the null hypotheses and if no such

then do not reject any of the null hypotheses and if no such  exist then reject all of the null hypotheses.

exist then reject all of the null hypotheses.

The Holm–Bonferroni method ensures that this method will control the  , where

, where  is the Familywise error rate

is the Familywise error rate

Proof that Holm-Bonferroni controls the FWER

Let  be a family of hypotheses, and

be a family of hypotheses, and  be the sorted p-values. Let

be the sorted p-values. Let  be the set of indices corresponding to the (unknown) true null hypotheses, having

be the set of indices corresponding to the (unknown) true null hypotheses, having  members.

members.

Let us assume that we wrongly reject a true hypothesis. We have to prove that the probability of this event is at most  . Let

. Let  be the first rejected true hypothesis (first in the ordering given by the Bonferroni–Holm test). So

be the first rejected true hypothesis (first in the ordering given by the Bonferroni–Holm test). So  is the last false hypothesis rejected and

is the last false hypothesis rejected and  . From there, we get

. From there, we get  (1). Since

(1). Since  is rejected we have

is rejected we have  by definition of the test. Using (1), the right hand side is at most

by definition of the test. Using (1), the right hand side is at most  . Thus, if we wrongly reject a true hypothesis, there has to be a true hypothesis with P-value at most

. Thus, if we wrongly reject a true hypothesis, there has to be a true hypothesis with P-value at most  .

.

So let us define  . Whatever the (unknown) set of true hypotheses

. Whatever the (unknown) set of true hypotheses  is, we have

is, we have  (by the Bonferroni inequalities). Therefore, the probability to reject a true hypothesis is at most

(by the Bonferroni inequalities). Therefore, the probability to reject a true hypothesis is at most  .

.

Proof that Holm-Bonferroni controls the FWER using the closure principle

The Holm–Bonferroni method can be viewed as closed testing procedure,[2] with Bonferroni method applied locally on each of the intersections of null hypotheses.

It is a shortcut procedure since practically the number of comparisons to be made equal to  or less, while the number of all intersections of null hypotheses to be tested is of order

or less, while the number of all intersections of null hypotheses to be tested is of order  .

.

The closure principle states that a hypothesis  in a family of hypotheses

in a family of hypotheses  is rejected - while controlling the family-wise error rate of

is rejected - while controlling the family-wise error rate of  - if and only if all the sub-families of the intersections with

- if and only if all the sub-families of the intersections with  are controlled at level of family-wise error rate of

are controlled at level of family-wise error rate of  .

.

In Holm-Bonferroni procedure, we first test  . If it is not rejected then the intersection of all null hypotheses

. If it is not rejected then the intersection of all null hypotheses  is not rejected too, such that there exist at least one intersection hypothesis for each of elementary hypotheses

is not rejected too, such that there exist at least one intersection hypothesis for each of elementary hypotheses  that is not rejected, thus we reject none of the elementary hypotheses.

that is not rejected, thus we reject none of the elementary hypotheses.

If  is rejected at level

is rejected at level  then all the intersection sub-families that contain it are rejected too, thus

then all the intersection sub-families that contain it are rejected too, thus  is rejected.

This is because

is rejected.

This is because  is the smallest in each one of the intersection sub-families and the size of the sub-families is the most

is the smallest in each one of the intersection sub-families and the size of the sub-families is the most  , such that the Bonferroni threshold larger than

, such that the Bonferroni threshold larger than  .

.

The same rationale applies for  . However, since

. However, since  already rejected, it sufficient to reject all the intersection sub-families of

already rejected, it sufficient to reject all the intersection sub-families of  without

without  . Once

. Once  holds all the intersections that contains

holds all the intersections that contains  are rejected.

are rejected.

The same applies for each  .

.

Example

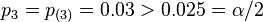

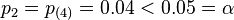

Consider four null hypotheses  with unadjusted p-values

with unadjusted p-values  ,

,  ,

,  and

and  , to be tested at significance level

, to be tested at significance level  . Since the procedure is step-down, we first test

. Since the procedure is step-down, we first test  , which has the smallest p-value

, which has the smallest p-value  . The p-value is compared to

. The p-value is compared to  , the null hypothesis is rejected and we continue to the next one. Since

, the null hypothesis is rejected and we continue to the next one. Since  we reject

we reject  as well and continue. The next hypothesis

as well and continue. The next hypothesis  is not rejected since

is not rejected since  . We stop testing and conclude that

. We stop testing and conclude that  and

and  are rejected and

are rejected and  and

and  are not rejected while controlling the Family Wise Error Rate at level

are not rejected while controlling the Family Wise Error Rate at level  . Note that even though

. Note that even though  applies,

applies,  is not rejected. This is because the testing procedure stops once there is no rejection.

is not rejected. This is because the testing procedure stops once there is no rejection.

Extensions

The Holm–Bonferroni method is an example of a closed test procedure.[3] As such, it controls the familywise error rate for all the k hypotheses at level α in the strong sense. Each intersection is tested using the simple Bonferroni test.

Adjusted P-value

The adjusted P-values for Holm–Bonferroni method are:

, where

, where  .

.

In the earlier example , the adjusted p-values are  ,

,  ,

,  and

and  . Only hypotheses

. Only hypotheses  and

and  are rejected at level

are rejected at level  .

.

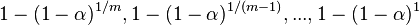

Šidák version

When hypotheses are independent, it is possible to replace  with:

with:

resulting in a slightly more powerful test.

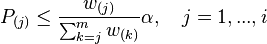

Weighted version

Let  be the ordered unadjusted p-values. Let

be the ordered unadjusted p-values. Let  ,

,  correspond to

correspond to  . Reject

. Reject  as long as

as long as

adjusted p-values: The adjusted weighted p-value are :

, where

, where  .

.

A hypothesis is rejected at level α if and only if its adjusted p-value is less than α. In the earlier example using equal weights, the adjusted p-values are 0.03, 0.06, 0.06, and 0.02. This is another way to see that using α = 0.05, only hypotheses one and four are rejected by this procedure.

Alternatives and usage

Holm–Bonferroni method is uniformly more powerful than the classic Bonferroni correction. Since no assumptions required, it can always substitute the Bonferroni correction. However, it is not the best simultaneous inference controlling procedure available. There are many other methods that intend to control the family-wise error rate, many of them are more powerful than Holm-Bonferroni. Among those there is the Hochberg procedure (1988) and Hommel procedure [4] .

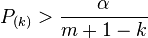

In Hochberg procedure rejection of  is made after finding the maximal index

is made after finding the maximal index  such that

such that  . Thus, The Hochberg procedure is more powerful by construction. However, the Hochberg procedure require the hypotheses to be independent (or under some forms of positive dependence), while Holm-Bonferroni can be applied with no further assumptions on the data.

. Thus, The Hochberg procedure is more powerful by construction. However, the Hochberg procedure require the hypotheses to be independent (or under some forms of positive dependence), while Holm-Bonferroni can be applied with no further assumptions on the data.

Bonferroni contribution

Carlo Emilio Bonferroni did not take part in inventing the method described here. Holm originally called the method the "sequentially rejective Bonferroni test", and it became known as Holm-Bonferroni only after some time. Holm's motives for naming his method after Bonferroni are explained in the original paper: "The use of the Boole inequality within multiple inference theory is usually called the Bonferroni technique, and for this reason we will call our test the sequentially rejective Bonferroni test."

See also

- Multiple comparisons

- Bonferroni correction

- Familywise error rate

- Closed testing procedure

References

- ↑ Holm, S. (1979). "A simple sequentially rejective multiple test procedure". Scandinavian Journal of Statistics 6 (2): 65–70. JSTOR 4615733. MR 538597.

- ↑ Marcus, R.; Peritz, E.; Gabriel, K. R. (1976). "On closed testing procedures with special reference to ordered analysis of variance". Biometrika 63 (3): 655–660. doi:10.1093/biomet/63.3.655.

- ↑ Marcus, R.; Peritz, E.; Gabriel, K. R. (1976). "On closed testing procedures with special reference to ordered analysis of variance". Biometrika 63 (3): 655–660. doi:10.1093/biomet/63.3.655.

- ↑ Hommel, G. (1988). "A stagewise rejective multiple test procedure based on a modified Bonferroni test". Biometrika 75 (2): 383–386. doi:10.1093/biomet/75.2.383. ISSN 0006-3444.