Hewitt–Savage zero–one law

The Hewitt–Savage zero–one law is a theorem in probability theory, similar to Kolmogorov's zero–one law and the Borel–Cantelli lemma, that specifies that a certain type of event will either almost surely happen or almost surely not happen. It is sometimes known as the Hewitt–Savage law for symmetric events. It is named after Edwin Hewitt and Leonard Jimmie Savage.

Statement of the Hewitt–Savage zero–one law

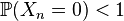

Let  be a sequence of independent and identically-distributed random variables taking values in a set

be a sequence of independent and identically-distributed random variables taking values in a set  . The Hewitt–Savage zero–one law says that any event whose occurrence or non-occurrence is determined by the values of these random variables and whose occurrence or non-occurrence is unchanged by finite permutations of the indices, has probability either 0 or 1 (a "finite" permutation is one that leaves all but finitely many of the indices fixed).

. The Hewitt–Savage zero–one law says that any event whose occurrence or non-occurrence is determined by the values of these random variables and whose occurrence or non-occurrence is unchanged by finite permutations of the indices, has probability either 0 or 1 (a "finite" permutation is one that leaves all but finitely many of the indices fixed).

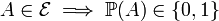

Somewhat more abstractly, define the exchangeable sigma algebra or sigma algebra of symmetric events  to be the set of events (depending on the sequence of variables

to be the set of events (depending on the sequence of variables  ) which are invariant under finite permutations of the indices in the sequence

) which are invariant under finite permutations of the indices in the sequence  . Then

. Then  .

.

Since any finite permutation can be written as a product of transpositions, if we wish to check whether or not an event  is symmetric (lies in

is symmetric (lies in  ), it is enough to check if its occurrence is unchanged by an arbitrary transposition

), it is enough to check if its occurrence is unchanged by an arbitrary transposition  ,

,  .

.

Examples

Example 1

Let the sequence  take values in

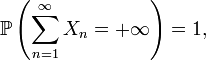

take values in  . Then the event that the series

. Then the event that the series  converges (to a finite value) is a symmetric event in

converges (to a finite value) is a symmetric event in  , since its occurrence is unchanged under transpositions (for a finite re-ordering, the convergence or divergence of the series — and, indeed, the numerical value of the sum itself — is independent of the order in which we add up the terms). Thus, the series either converges almost surely or diverges almost surely. If we assume in addition that the common expected value

, since its occurrence is unchanged under transpositions (for a finite re-ordering, the convergence or divergence of the series — and, indeed, the numerical value of the sum itself — is independent of the order in which we add up the terms). Thus, the series either converges almost surely or diverges almost surely. If we assume in addition that the common expected value ![\mathbb{E}[X_n] > 0](../I/m/94ce0ec35f1ee2ac3cd5ff4aac6f797a.png) (which essentially means that

(which essentially means that  because of the random variables' non-negativity), we may conclude that

because of the random variables' non-negativity), we may conclude that

i.e. the series diverges almost surely. This is a particularly simple application of the Hewitt–Savage zero–one law. In many situations, it can be easy to apply the Hewitt–Savage zero–one law to show that some event has probability 0 or 1, but surprisingly hard to determine which of these two extreme values is the correct one.

Example 2

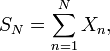

Continuing with the previous example, define

which is the position at step N of a random walk with the iid increments Xn. The event { SN = 0 infinitely often } is invariant under finite permutations. Therefore, the zero–one law is applicable and one infers that the probability of a random walk with real iid increments visiting the origin infinitely often is either one or zero. Visiting the origin infinitely often is a tail event with respect to the sequence (SN), but SN are not independent and therefore the Kolmogorov's zero–one law is not directly applicable here. This example is from pages 381 and 382 of the second edition of the probability theory book of Albert Shiryaev.

References

- Hewitt, E.; Savage, L. J. (1955). "Symmetric measures on Cartesian products". Trans. Amer. Math. Soc. 80: 470–501. doi:10.1090/s0002-9947-1955-0076206-8.

- Shiryaev, A. (1996). Probability Theory (Second ed.). New York: Springer-Verlag.