Hausdorff moment problem

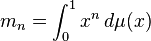

In mathematics, the Hausdorff moment problem, named after Felix Hausdorff, asks for necessary and sufficient conditions that a given sequence { mn : n = 0, 1, 2, ... } be the sequence of moments

of some Borel measure μ supported on the closed unit interval [0, 1]. In the case m0 = 1, this is equivalent to the existence of a random variable X supported on [0, 1], such that E Xn = mn.

The essential difference between this and other well-known moment problems is that this is on a bounded interval, whereas in the Stieltjes moment problem one considers a half-line [0, ∞), and in the Hamburger moment problem one considers the whole line (−∞, ∞).

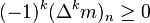

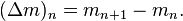

In 1921, Hausdorff showed that { mn : n = 0, 1, 2, ... } is such a moment sequence if and only if the sequence is completely monotonic, i.e., its difference sequences satisfy the equation

for all n,k ≥ 0. Here, Δ is the difference operator given by

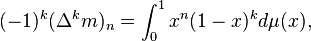

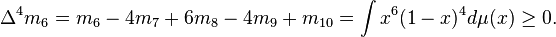

The necessity of this condition is easily seen by the identity

which is ≥ 0, being the integral of an almost sure non-negative function. For example, it is necessary to have

See also

- Total monotonicity

References

- Hausdorff, F. "Summationsmethoden und Momentfolgen. I." Mathematische Zeitschrift 9, 74-109, 1921.

- Hausdorff, F. "Summationsmethoden und Momentfolgen. II." Mathematische Zeitschrift 9, 280-299, 1921.

- Feller, W. "An Introduction to Probability Theory and Its Applications", volume II, John Wiley & Sons, 1971.

- Shohat, J.A.; Tamarkin, J. D. The Problem of Moments, American mathematical society, New York, 1943.