GV-linear-code

In coding theory, the bound of parameters such as rate R, relative distance, block length, etc. is usually concerned. Here Gilbert–Varshamov bound theorem claims the lower bound of the rate of the general code. Gilbert–Varshamov bound is the best in term of relative distance for codes over alphabets of size less than 49.

Gilbert–Varshamov bound theorem

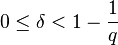

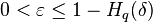

Theorem: Let  . For every

. For every  , and

, and  , there exists a code with rate

, there exists a code with rate  , and relative distance

, and relative distance  .

.

Here  is the q-ary entropy function defined as follows:

is the q-ary entropy function defined as follows:

The above result was proved by Edgar Gilbert for general code using the greedy method as here. For linear code, Varshamov proved using the probabilistic method for the random linear code. This proof will be shown in the following part.

High-level proof:

To show the existence of the linear code that satisfies those constraints, the probabilistic method is used to construct the random linear code. Specifically the linear code is chosen randomly by choosing the random generator matrix  in which the element is chosen uniformly over the field

in which the element is chosen uniformly over the field  . Also the Hamming distance of the linear code is equal to the minimum weight of the codeword. So to prove that the linear code generated by

. Also the Hamming distance of the linear code is equal to the minimum weight of the codeword. So to prove that the linear code generated by  has Hamming distance

has Hamming distance  , we will show that for any

, we will show that for any  . To prove that, we prove the opposite one; that is, the probability that the linear code generated by

. To prove that, we prove the opposite one; that is, the probability that the linear code generated by  has the Hamming distance less than

has the Hamming distance less than  is exponentially small in

is exponentially small in  . Then by probabilistic method, there exists the linear code satisfying the theorem.

. Then by probabilistic method, there exists the linear code satisfying the theorem.

Formal proof:

By using the probabilistic method, to show that there exists a linear code that has a Hamming distance greater than  , we will show that the probability that the random linear code having the distance less than

, we will show that the probability that the random linear code having the distance less than  is exponentially small in

is exponentially small in  .

.

We know that the linear code is defined using the generator matrix. So we use the "random generator matrix"  as a mean to describe the randomness of the linear code. So a random generator matrix

as a mean to describe the randomness of the linear code. So a random generator matrix  of size

of size  contains

contains  elements which are chosen independently and uniformly over the field

elements which are chosen independently and uniformly over the field  .

.

Recall that in a linear code, the distance = the minimum weight of the non-zero codeword. This fact is one of the properties of linear code.

Denote  be the weight of the codeword

be the weight of the codeword  .

So

.

So

Also if codeword  belongs to a linear code generated by

belongs to a linear code generated by  , then

, then  for some vector

for some vector  .

.

Therefore ![P = {\Pr}_{\text{random }G} [\text{there exists a vector }m \in \mathbb{F}_q^k \backslash \{ 0\}\text{ such that }wt(mG) < d]](../I/m/586791ad58350214c7c213d25e674baa.png)

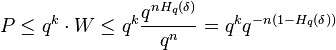

By Boole's inequality, we have:

Now for a given message  , we want to compute

, we want to compute ![W = {\Pr}_{\text{random }G} [wt(mG) < d]](../I/m/6ef1fd561cea6584d75832fdb13902cc.png)

Denote  be a Hamming distance of two messages

be a Hamming distance of two messages  and

and

Then for any message  , we have:

, we have:  .

.

Using this fact, we can come up with the following equality:

Due to the randomness of  ,

,  is a uniformly random vector from

is a uniformly random vector from  .

.

So ![{\Pr}_{\text{random }G} [mG = y] = q^{ - n}](../I/m/24dd0189c1de936e79b67329a3e48514.png)

Let  is a volume of Hamming ball with the radius

is a volume of Hamming ball with the radius  . Then:

. Then:

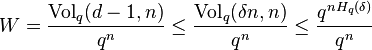

(The later inequality comes from the upper bound of the Volume of Hamming ball)

Then

By choosing  , the above inequality becomes

, the above inequality becomes

Finally  , which is exponentially small in n, that is what we want before. Then by the probabilistic method, there exists a linear code

, which is exponentially small in n, that is what we want before. Then by the probabilistic method, there exists a linear code  with relative distance

with relative distance  and rate

and rate  at least

at least  , which completes the proof.

, which completes the proof.

Comments

- The Varshamov construction above is not explicit; that is, it does not specify the deterministic method to construct the linear code that satisfies the Gilbert–Varshamov bound. The naive way that we can do is to go over all the generator matrices

of size

of size  over the field

over the field  and check if that linear code has the satisfied Hamming distance. That leads to the exponential time algorithm to implement it.

and check if that linear code has the satisfied Hamming distance. That leads to the exponential time algorithm to implement it. - We also have a Las Vegas construction that takes a random linear code and checks if this code has good Hamming distance. Nevertheless, this construction has the exponential running time.

See also

- Gilbert–Varshamov bound due to Gilbert construction for the general code

- Hamming Bound

- Probabilistic method

![\begin{align}

P & = {\Pr}_{\text{random }G} [\text{linear code generated by }G\text{ has distance} < d] \\

& = {\Pr}_{\text{random }G} [\text{there exists a codeword }y \ne 0\text{ in a linear code generated by }G\text{ such that }\mathrm{wt}(y) < d]

\end{align}](../I/m/b1dfab65ef02083f6bc5bf05c117ad94.png)

![P \le \sum\limits_{m \in \mathbb{F}_q^k \backslash \{ 0\} } {{\Pr}_{\text{random }G} } [wt(mG) < d]](../I/m/d03b70a1bc0eae4618a616a6e3a21200.png)

![W = \sum\limits_{\text{all }y \in \mathbb{F}_q^n \text{s.t. }\Delta (0,y) \le d - 1} {{\Pr}_{\text{random }G} [mG = y]}](../I/m/53106ad14c2789d03aa925a7ab729786.png)