Fast inverse square root

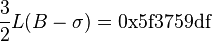

Fast inverse square root (sometimes referred to as Fast InvSqrt() or by the hexadecimal constant 0x5f3759df) is a method of calculating x−½, the reciprocal (or multiplicative inverse) of a square root for a 32-bit floating point number in IEEE 754 floating point format. The algorithm was probably developed at Silicon Graphics in the early 1990s, and an implementation appeared in 1999 in the Quake III Arena source code, but the method did not appear on public forums such as Usenet until 2002 or 2003.[1] At the time, the primary advantage of the algorithm came from avoiding computationally expensive floating point operations in favor of integer operations. Inverse square roots are used to compute angles of incidence and reflection for lighting and shading in computer graphics.

The algorithm accepts a 32-bit floating point number as the input and stores a halved value for later use. Then, treating the bits representing the floating point number as a 32-bit integer, a logical shift right of one bit is performed and the result subtracted from the "magic constant" 0x5f3759df. This is the first approximation of the inverse square root of the input. Treating the bits again as floating point it runs one iteration of Newton's method to return a more precise approximation. This computes an approximation of the inverse square root of a floating point number approximately four times faster than floating point division.

The algorithm was originally attributed to John Carmack, but an investigation showed that the code had deeper roots in both the hardware and software side of computer graphics. Adjustments and alterations passed through both Silicon Graphics and 3dfx Interactive, with Gary Tarolli's implementation for the SGI Indigo as the earliest known use. It is not known how the constant was originally derived, though investigation has shed some light on possible methods.

Motivation

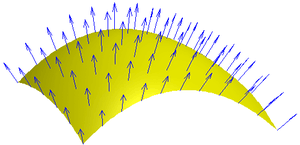

The inverse square root of a floating point number is used in calculating a normalized vector.[2] Since a 3D graphics program uses these normalized vectors to determine lighting and reflection, millions of these calculations must be done per second. Before the creation of specialized hardware to handle transform and lighting, software computations could be slow. Specifically, when the code was developed in the early 1990s, most floating point processing power lagged behind the speed of integer processing.[1]

To normalize a vector, the length of the vector is determined by calculating its Euclidean norm: the square root of the sum of squares of the vector components. When each component of the vector is divided by that length, the new vector will be a unit vector pointing in the same direction.

is the Euclidean norm of the vector, analogous to the calculation of the Euclidean distance between two points in Euclidean space.

is the Euclidean norm of the vector, analogous to the calculation of the Euclidean distance between two points in Euclidean space.

is the normalized (unit) vector. Using

is the normalized (unit) vector. Using  to represent

to represent  ,

,

, which relates the unit vector to the inverse square root of the distance components.

, which relates the unit vector to the inverse square root of the distance components.

Quake III Arena used the fast inverse square root algorithm to speed graphics processing unit computation, but the algorithm has since been implemented in some dedicated hardware vertex shaders using field-programmable gate arrays (FPGA).[3]

Overview of the code

The following code is the fast inverse square root implementation from Quake III Arena, stripped of C preprocessor directives, but including the exact original comment text:[4]

float Q_rsqrt( float number ) { long i; float x2, y; const float threehalfs = 1.5F; x2 = number * 0.5F; y = number; i = * ( long * ) &y; // evil floating point bit level hacking i = 0x5f3759df - ( i >> 1 ); // what the fuck? y = * ( float * ) &i; y = y * ( threehalfs - ( x2 * y * y ) ); // 1st iteration // y = y * ( threehalfs - ( x2 * y * y ) ); // 2nd iteration, this can be removed return y; }

In order to determine the inverse square root, an approximation for  would be determined by the software, then some numerical method would revise that approximation until it came within an acceptable error range of the actual result. Common software methods in the early 1990s drew a first approximation from a lookup table.[5] This bit of code proved faster than table lookups and approximately four times faster than regular floating point division.[6] Some loss of precision occurred, but was offset by the significant gains in performance.[7] The algorithm was designed with the IEEE 754-1985 32-bit floating point specification in mind, but investigation from Chris Lomont and later Charles McEniry showed that it could be implemented in other floating point specifications.

would be determined by the software, then some numerical method would revise that approximation until it came within an acceptable error range of the actual result. Common software methods in the early 1990s drew a first approximation from a lookup table.[5] This bit of code proved faster than table lookups and approximately four times faster than regular floating point division.[6] Some loss of precision occurred, but was offset by the significant gains in performance.[7] The algorithm was designed with the IEEE 754-1985 32-bit floating point specification in mind, but investigation from Chris Lomont and later Charles McEniry showed that it could be implemented in other floating point specifications.

The advantages in speed offered by the fast inverse square root kludge came from treating the longword[note 1] containing the floating point number as an integer then subtracting it from a specific constant, 0x5f3759df. The purpose of the constant is not immediately clear to someone viewing the code, so, like other such constants found in code, it is often called a "magic number".[1][8][9][10] This integer subtraction and bit shift results in a longword which when treated as a floating point number is a rough approximation for the inverse square root of the input number. One iteration of Newton's method is performed to gain some precision, and the code is finished. The algorithm generates reasonably accurate results using a unique first approximation for Newton's method; however, it is much slower and less accurate than using the SSE instruction rsqrtss on x86 processors also released in 1999.[11]

A worked example

As an example, consider the number x = 0.15625, for which we want to calculate 1/√x ≈ 2.52982. The first steps of the algorithm are illustrated below:

00111110001000000000000000000000 Bit pattern of both x and i 00011111000100000000000000000000 Shift right one position: (i >> 1) 01011111001101110101100111011111 The magic number 0x5f3759df 01000000001001110101100111011111 The result of 0x5f3759df - (i >> 1)

Reinterpreting this last bit pattern as a floating point number gives the approximation y = 2.61486, which has an error of about 3.4%. After the single iteration of Newton's method, the final result is y = 2.52549, in error by only 0.17%.

Working of the algorithm

The algorithm computes 1/√x by performing the following steps:

- Alias the argument x to an integer, as a way to compute an approximation of log2(x)

- use this approximation to compute an approximation of log2(1/√x)

- alias back to a float, as a way to compute an approximation of the base-2 exponential

- refine the approximation using a single iteration of the Newton's method.

Floating point representation

Since this algorithm relies heavily on the bit-level representation of single-precision floating point numbers, a short overview of this representation is provided here. In order to encode a non-zero real number x as a single precision float, the first step is to write x as a normalized binary number:

where the exponent ex is an integer, mx ∈ [0, 1), and 1.b1b2b3... is the binary representation of the “significand” (1 + mx). It should be noted that, since the single bit before the point in the significand is always 1, it need not be stored. From this form, three unsigned integers are computed:

- Sx, the “sign bit”, is 0 if x > 0, and 1 if x < 0 (1 bit)

- Ex = ex + B is the “biased exponent”, where B = 127 is the “exponent bias”[note 2] (8 bits)

- Mx = mx × L, where L = 223[note 3] (23 bits)

These fields are then packed, left to right, into a 32 bit container.

As an example, consider again the number x = 0.15625 = 0.001012. Normalizing x yields:

and thus, the three unsigned integer fields are:

- S = 0

- E = −3 + 127 = 124 = 011111002

- M = 0.25 × 223 = 2097152 = 010000000000000000000002

these fields are packed as shown in the figure below:

Aliasing to an integer as an approximate logarithm

If one had to calculate 1/√x without a computer or a calculator, a table of logarithms would be useful, together with the identity logb(1/√x) = −½ logb(x), which is valid for every base b. The fast inverse square root is based on this identity, and on the fact that aliasing a float32 to an integer gives a rough approximation of its logarithm. Here is how:

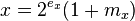

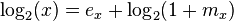

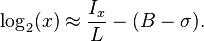

If x is a positive normal number:

then we have

but since mx ∈ [0, 1), the logarithm on the right hand side can be approximated by[12]

where σ is a free parameter used to tune the approximation. For example, σ = 0 yields exact results at both ends of the interval, while σ ≈ 0.0430357 yields the optimal approximation (the best in the sense of the uniform norm of the error).

Thus we have the approximation

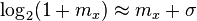

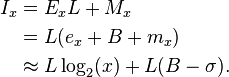

On the other hand, interpreting the bit-pattern of x as an integer yields[note 4]

It then appears that Ix is a scaled and shifted piecewise-linear approximation of log2(x), as illustrated in the figure on the right. In other words, log2(x) is approximated by

First approximation of the result

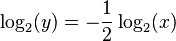

The calculation of y = 1/√x is based on the identity

Using the approximation of the logarithm above, applied to both x and y, the above equation gives:

which is written in the code as

i = 0x5f3759df - ( i >> 1 );

The first term above is the “magic number”

from which it can be inferred σ ≈ 0.0450466. The second term, ½ Ix, is calculated by shifting the bits of Ix one position to the right.[13]

Newton's method

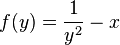

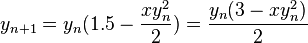

After performing those integer operations, the algorithm once again treats the longword as a floating point number (y = *(float*)&i;) and performs a floating point multiplication operation (y = y*(1.5f - xhalf*y*y);). The floating point operation represents a single iteration of Newton's method of finding roots for a given equation. For this example,

is the inverse square root, or, as a function of y,

is the inverse square root, or, as a function of y,

.

.

- As

represents a general expression of Newton's method with

represents a general expression of Newton's method with  as the first approximation,

as the first approximation,

is the particularized expression where

is the particularized expression where  and

and  .

.

- Hence

y = y*(1.5f - xhalf*y*y);is the same as

The first approximation is generated above through the integer operations and input into the last two lines of the function. Repeated iterations of the algorithm, using the output of the function ( ) as the input of the next iteration, cause the algorithm to converge on the root with increasing precision.[14] For the purposes of the Quake III engine, only one iteration was used. A second iteration remained in the code but was commented out.[10]

) as the input of the next iteration, cause the algorithm to converge on the root with increasing precision.[14] For the purposes of the Quake III engine, only one iteration was used. A second iteration remained in the code but was commented out.[10]

Accuracy

As noted above, the approximation is surprisingly accurate. The graph on the right plots the error of the function (that is, the error of the approximation after it has been improved by running one iteration of Newton's method), for inputs starting at 0.01, where the standard library gives 10.0 as a result, while InvSqrt() gives 9.982522, making the difference 0.017479, or 0.175%. The absolute error only drops from then on, while the relative error stays within the same bounds across all orders of magnitude.

History and investigation

The source code for Quake III was not released until QuakeCon 2005, but copies of the fast inverse square root code appeared on Usenet and other forums as early as 2002 or 2003.[1] Initial speculation pointed to John Carmack as the probable author of the code, but he demurred and suggested it was written by Terje Mathisen, an accomplished assembly programmer who had previously helped id Software with Quake optimization. Mathisen had written an implementation of a similar bit of code in the late 1990s, but the original authors proved to be much further back in the history of 3D computer graphics with Gary Tarolli's implementation for the SGI Indigo as a possible earliest known use. Rys Sommefeldt concluded that the original algorithm was devised by Greg Walsh at Ardent Computer in consultation with Cleve Moler, the creator of MATLAB.[15] Cleve Moler learned about this trick from code written by William Kahan and K.C. Ng at Berkeley around 1986 (see the comment section at the end of fdlibm code for sqrt[16]).[17] Jim Blinn also demonstrated a simple approximation of the inverse square root in a 1997 column for IEEE Computer Graphics and Applications.[18]

It is not known precisely how the exact value for the magic number was determined. Chris Lomont developed a function to minimize approximation error by choosing the magic number R over a range. He first computed the optimal constant for the linear approximation step as 0x5f37642f, close to 0x5f3759df, but this new constant gave slightly less accuracy after one iteration of Newton's method.[19] Lomont then searched for a constant optimal even after one and two Newton iterations and found 0x5f375a86, which is more accurate than the original at every iteration stage.[19] He concluded by asking whether the exact value of the original constant was chosen through derivation or trial and error.[20] Lomont pointed out that the "magic number" for 64 bit IEEE754 size type double is 0x5fe6ec85e7de30da, but it was later shown by Matthew Robertson to be exactly 0x5fe6eb50c7b537a9.[21] Charles McEniry performed a similar but more sophisticated optimization over likely values for R. His initial brute force search resulted in the same constant that Lomont determined.[22] When he attempted to find the constant through weighted bisection, the specific value of R used in the function occurred, leading McEniry to believe that the constant may have originally been derived through "bisecting to a given tolerance".[23]

See also

Notes

- ↑ Use of the type

longreduces the portability of this code on modern systems. For the code to execute properly,sizeof(long)must be 4 bytes, otherwise negative outputs may result. Under many modern 64-bit systems,sizeof(long)is 8 bytes. - ↑ Ex should be in the range [1, 254] for x to be representable as a normal number.

- ↑ The only real numbers that can be represented exactly as floating point are those for which Mx is an integer. Other numbers can only be represented approximately by rounding them to the nearest exactly representable number.

- ↑ Sx = 0 since x > 0.

References

- ↑ 1.0 1.1 1.2 1.3 Sommefeldt, Rys (November 29, 2006). "Origin of Quake3's Fast InvSqrt()". Beyond3D. Retrieved 2009-02-12.

- ↑ Blinn 2003, p. 130.

- ↑ Middendorf 2007, pp. 155-164.

- ↑ "quake3-1.32b/code/game/q_math.c". Quake III Arena. id Software. Retrieved 2010-11-15.

- ↑ Eberly 2001, p. 504.

- ↑ Lomont 2003, p. 1.

- ↑ McEniry 2007, p. 1.

- ↑ Lomont 2003, p. 3.

- ↑ McEniry 2007, p. 2, 16.

- ↑ 10.0 10.1 Eberly 2002, p. 2.

- ↑ Ruskin, Elan (2009-10-16). "Timing square root". Some Assembly Required. Retrieved 2010-09-13.

- ↑ McEniry 2007, p. 3.

- ↑ Hennessey & Patterson 1998, p. 305.

- ↑ McEniry 2007, p. 6.

- ↑ Sommefeldt, Rys (2006-12-19). "Origin of Quake3's Fast InvSqrt() - Part Two". Beyond3D. Retrieved 2008-04-19.

- ↑ "sqrt implementation in fdlibm".

- ↑ Moler, Cleve. "Symplectic Spacewar". MATLAB Central - Cleve's Corner. MATLAB. Retrieved 21 July 2014.

- ↑ Blinn 1997, pp. 80–84.

- ↑ 19.0 19.1 Lomont 2003, p. 10.

- ↑ Lomont 2003, pp. 10–11.

- ↑ Matthew Robertson (2012-04-24). "A Brief History of InvSqrt" (PDF). UNBSJ.

- ↑ McEniry 2007, pp. 11–12.

- ↑ McEniry 2007, p. 16.

Documents

- Blinn, Jim (July 1997). "Floating Point Tricks". Computer Graphics & Applications, IEEE 17 (4): 80. doi:10.1109/38.595279.

- Blinn, Jim (2003). Jim Blinn's Corner: Notation, notation notation. Morgan Kaufmann. ISBN 1-55860-860-5.

- Eberly, David (2001). 3D Game Engine Design. Morgan Kaufmann. ISBN 978-1-55860-593-0.

- Eberly, David (2002). "Fast Inverse Square Root" (PDF). Geometric Tools. Retrieved 2009-03-22.

- Hennessey, John; Patterson, David A. (1998). Computer Organization and Design (2nd ed.). San Francisco, CA: Morgan Kaufmann Publishers. ISBN 978-1-55860-491-9.

- Kushner, David (August 2002). "The wizardry of Id". IEEE Spectrum 39 (8): 42–47. doi:10.1109/MSPEC.2002.1021943.

- Lomont, Chris (February 2003). "Fast Inverse Square Root" (PDF). Retrieved 2009-02-13.

- McEniry, Charles (August 2007). "The Mathematics Behind the Fast Inverse Square Root Function Code" (PDF). Retrieved 2009-02-13.

- Middendorf, Lars; Mühlbauer, Felix; Umlauf, George; Bodba, Christophe (June 1, 2007). "Embedded Vertex Shader in FPGA". In Rettberg, Achin. Embedded System Design: Topics, Techniques and Trends. IFIP TC10 Working Conference:International Embedded Systems Symposium (IESS). et al. Irvine, California: Springer. ISBN 978-0-387-72257-3.

- Striegel, Jason (December 4, 2008). "Quake's fast inverse square root". Hackszine. O'Reilly Media. Archived from the original on 2009-02-15. Retrieved 2013-01-07.

External links

- A Brief History of InvSqrt by Matthew Robertson

- 0x5f3759df, further investigations into accuracy and generalizability of the algorithm by Christian Plesner Hansen

- Origin of Quake3's Fast InvSqrt()

- Quake III Arena source code

- Margolin, Tomer (27 August 2005). "Magical Square Root Implementation In Quake III". CodeMaestro. The Coding Experience.

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||

| ||||||||||||||||||||||||||||||||||||