Eigenvalue perturbation

In mathematics, an eigenvalue perturbation problem is that of finding the eigenvectors and eigenvalues of a system that is perturbed from one with known eigenvectors and eigenvalues. This is useful for studying how sensitive the original system's eigenvectors and eigenvalues are to changes in the system. This type of analysis popularized by Lord Rayleigh, in his investigation of harmonic vibrations of a string perturbed by small inhomogeneities.[1]

The derivations in this article are essentially self-contained and can be found in many texts on numerical linear algebra[2] or numerical functional analysis.

Example

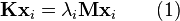

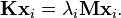

Suppose we have solutions to the generalized eigenvalue problem,

where  and

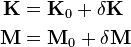

and  are matrices. That is, we know the eigenvalues λ0i and eigenvectors x0i for i = 1, ..., N. Now suppose we want to change the matrices by a small amount. That is, we want to find the eigenvalues and eigenvectors of

are matrices. That is, we know the eigenvalues λ0i and eigenvectors x0i for i = 1, ..., N. Now suppose we want to change the matrices by a small amount. That is, we want to find the eigenvalues and eigenvectors of

where

with the perturbations  and

and  much smaller than

much smaller than  and

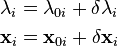

and  respectively. Then we expect the new eigenvalues and eigenvectors to be similar to the original, plus small perturbations:

respectively. Then we expect the new eigenvalues and eigenvectors to be similar to the original, plus small perturbations:

Steps

We assume that the matrices are symmetric and positive definite, and assume we have scaled the eigenvectors such that

where δij is the Kronecker delta. Now we want to solve the equation

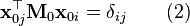

Substituting, we get

which expands to

Canceling from (1) leaves

Removing the higher-order terms, this simplifies to

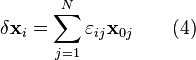

When the matrix is symmetric, the unperturbed eigenvectors are orthogonal and so we use them as a basis for the perturbed eigenvectors. That is, we want to construct

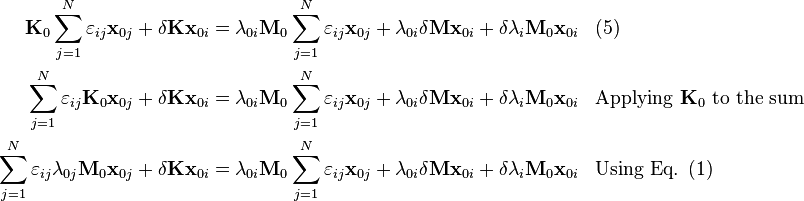

where the εij are small constants that are to be determined. Substituting (4) into (3) and rearranging gives

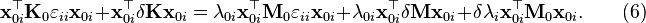

Because the eigenvectors are M0-orthogonal when M0 is positive definite, we can remove the summations by left multiplying by  :

:

By use of equation (1) again:

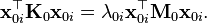

The two terms containing εii are equal because left-multiplying (1) by  gives

gives

Canceling those terms in (6) leaves

Rearranging gives

But by (2), this denominator is equal to 1. Thus

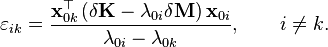

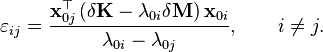

Then, by left-multiplying equation (5) by x0k:

Or by changing the name of the indices:

To find εii, use the fact that:

implies:

Summary

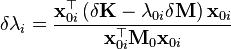

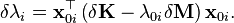

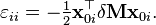

for infinitesimal δK and δM (the high order terms in (3) being negligible)

Results

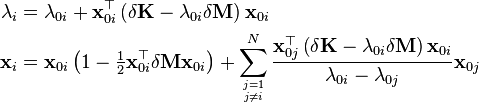

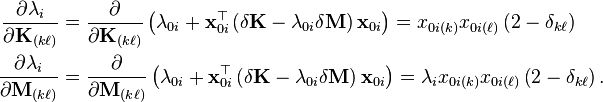

This means it is possible to efficiently do a sensitivity analysis on λi as a function of changes in the entries of the matrices. (Recall that the matrices are symmetric and so changing Kkℓ will also change Kℓk, hence the (2 − δkℓ) term.)

Similarly

Existence of eigenvectors

Note that in the above example we assumed that both the unperturbed and the perturbed systems involved symmetric matrices, which guaranteed the existence of  linearly independent eigenvectors. An eigenvalue problem involving non-symmetric matrices is not guaranteed to have

linearly independent eigenvectors. An eigenvalue problem involving non-symmetric matrices is not guaranteed to have  linearly independent eigenvectors, though a sufficient condition is that

linearly independent eigenvectors, though a sufficient condition is that  and

and  be simultaneously diagonalisable.

be simultaneously diagonalisable.

See also

References

- ↑ Rayleigh, J. W. S. (1894). Theory of Sound I (2nd ed.). London: Macmillan. pp. 115–118. ISBN 1-152-06023-6.

- ↑ Trefethen, Lloyd N. (1997). Numerical Linear Algebra. SIAM (Philadelphia, PA). p. 258. ISBN 0-89871-361-7.

Further reading

- Ren-Cang Li (2014). "Matrix Perturbation Theory". In Hogben, Leslie. Handbook of linear algebra (Second edition. ed.). ISBN 1466507284.

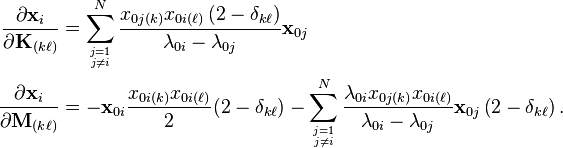

![\begin{align}

\mathbf{K}_0\mathbf{x}_{0i} &+ \delta \mathbf{K}\mathbf{x}_{0i} + \mathbf{K}_0\delta \mathbf{x}_i + \delta \mathbf{K}\delta \mathbf{x}_i = \\[6pt]

&=\lambda_{0i}\mathbf{M}_0\mathbf{x}_{0i}+\lambda_{0i}\mathbf{M}_0\delta\mathbf{x}_i + \lambda_{0i} \delta \mathbf{M} \mathbf{x}_{0i} +\delta\lambda_i\mathbf{M}_0\mathbf{x}_{0i} + \lambda_{0i} \delta \mathbf{M} \delta\mathbf{x}_i + \delta\lambda_i \delta \mathbf{M}\mathbf{x}_{0i} + \delta\lambda_i\mathbf{M}_0\delta\mathbf{x}_i + \delta\lambda_i \delta \mathbf{M} \delta\mathbf{x}_i.

\end{align}](../I/m/47c9c7ef6e65d5e1c4ca9b5956d61e75.png)