Duality gap

In optimization problems in applied mathematics, the duality gap is the difference between the primal and dual solutions. If  is the optimal dual value and

is the optimal dual value and  is the optimal primal value then the duality gap is equal to

is the optimal primal value then the duality gap is equal to  . This value is always greater than or equal to 0. The duality gap is zero if and only if strong duality holds. Otherwise the gap is strictly positive and weak duality holds.[1]

. This value is always greater than or equal to 0. The duality gap is zero if and only if strong duality holds. Otherwise the gap is strictly positive and weak duality holds.[1]

In general given two dual pairs separated locally convex spaces  and

and  . Then given the function

. Then given the function  , we can define the primal problem by

, we can define the primal problem by

If there are constraint conditions, these can be built into the function  by letting

by letting  where

where  is the indicator function. Then let

is the indicator function. Then let  be a perturbation function such that

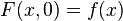

be a perturbation function such that  . The duality gap is the difference given by

. The duality gap is the difference given by

where  is the convex conjugate in both variables.[2][3][4]

is the convex conjugate in both variables.[2][3][4]

In computational optimization, another "duality gap" is often reported, which is the difference in value between any dual solution and the value of a feasible but suboptimal iterate for the primal problem. This alternative "duality gap" quantifies the discrepancy between the value of a current feasible but suboptimal iterate for the primal problem and the value of the dual problem; the value of the dual problem is, under regularity conditions, equal to the value of the convex relaxation of the primal problem: The convex relaxation is the problem arising replacing a non-convex feasible set with its closed convex hull and with replacing a non-convex function with its convex closure, that is the function that has the epigraph that is the closed convex hull of the original primal objective function.[5] [6] [7] [8] [9] [10] [11] [12][13]

References

- ↑ Borwein, Jonathan; Zhu, Qiji (2005). Techniques of Variational Analysis. Springer. ISBN 978-1-4419-2026-3.

- ↑ Radu Ioan Boţ; Gert Wanka; Sorin-Mihai Grad (2009). Duality in Vector Optimization. Springer. ISBN 978-3-642-02885-4.

- ↑ Ernö Robert Csetnek (2010). Overcoming the failure of the classical generalized interior-point regularity conditions in convex optimization. Applications of the duality theory to enlargements of maximal monotone operators. Logos Verlag Berlin GmbH. ISBN 978-3-8325-2503-3.

- ↑ Zălinescu, C. (2002). Convex analysis in general vector spaces. River Edge, NJ: World Scientific Publishing Co. Inc. pp. 106–113. ISBN 981-238-067-1. MR 1921556.

- ↑ Ahuja, Ravindra K.; Magnanti, Thomas L.; and Orlin, James B. (1993). Network Flows: Theory, Algorithms and Applications. Prentice Hall. ISBN 0-13-617549-X.

- ↑ Bertsekas, Dimitri P. (1999). Nonlinear Programming (2nd ed.). Athena Scientific. ISBN 1-886529-00-0.

- ↑ Bonnans, J. Frédéric; Gilbert, J. Charles; Lemaréchal, Claude; Sagastizábal, Claudia A. (2006). Numerical optimization: Theoretical and practical aspects. Universitext (Second revised ed. of translation of 1997 French ed.). Berlin: Springer-Verlag. pp. xiv+490. doi:10.1007/978-3-540-35447-5. ISBN 3-540-35445-X. MR 2265882.

- ↑ Hiriart-Urruty, Jean-Baptiste; Lemaréchal, Claude (1993). Convex analysis and minimization algorithms, Volume I: Fundamentals. Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences] 305. Berlin: Springer-Verlag. pp. xviii+417. ISBN 3-540-56850-6. MR 1261420.

- ↑ Hiriart-Urruty, Jean-Baptiste; Lemaréchal, Claude (1993). "14 Duality for Practitioners". Convex analysis and minimization algorithms, Volume II: Advanced theory and bundle methods. Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences] 306. Berlin: Springer-Verlag. pp. xviii+346. ISBN 3-540-56852-2. MR 1295240.

- ↑ Lasdon, Leon S. (2002) [Reprint of the 1970 Macmillan]. Optimization theory for large systems. Mineola, New York: Dover Publications, Inc. pp. xiii+523. ISBN 978-0-486-41999-2. MR 1888251.

- ↑ Lemaréchal, Claude (2001). "Lagrangian relaxation". In Jünger, Michael; and Naddef, Denis. Computational combinatorial optimization: Papers from the Spring School held in Schloß Dagstuhl, May 15–19, 2000. Lecture Notes in Computer Science (LNCS) 2241. Berlin: Springer-Verlag. pp. 112–156. doi:10.1007/3-540-45586-8_4. ISBN 3-540-42877-1. MR 1900016.

- ↑ Minoux, Michel (1986). Mathematical programming: Theory and algorithms. Egon Balas (forward); Steven Vajda (trans) from the (1983 Paris: Dunod) French. Chichester: A Wiley-Interscience Publication. John Wiley & Sons, Ltd. pp. xxviii+489. ISBN 0-471-90170-9. MR 868279. (2008 Second ed., in French: Programmation mathématique : Théorie et algorithmes, Éditions Tec & Doc, Paris, 2008. xxx+711 pp. ISBN 978-2-7430-1000-3. MR 2571910).

- ↑ Shapiro, Jeremy F. (1979). Mathematical programming: Structures and algorithms. New York: Wiley-Interscience [John Wiley & Sons]. pp. xvi+388. ISBN 0-471-77886-9. MR 544669.

![\inf_{x \in X} [F(x,0)] - \sup_{y^* \in Y^*} [-F^*(0,y^*)]](../I/m/881ac616d971dbc446b645e325a5cd62.png)