Doob martingale

A Doob martingale (also known as a Levy martingale) is a mathematical construction of a stochastic process which approximates a given random variable and has the martingale property with respect to the given filtration. It may be thought of as the evolving sequence of best approximations to the random variable based on information accumulated up to a certain time.

When analyzing sums, random walks, or other additive functions of independent random variables, one can often apply the central limit theorem, law of large numbers, Chernoff's inequality, Chebyshev's inequality or similar tools. When analyzing similar objects where the differences are not independent, the main tools are martingales and Azuma's inequality.

Definition

A Doob martingale (named after Joseph L. Doob)[1] is a generic construction that is always a martingale. Specifically, consider any set of random variables

taking values in a set  for which we are interested in the function

for which we are interested in the function  and define:

and define:

where the above expectation is itself a random quantity since the expectation is only taken over

and

are treated as random variables. It is possible to show that  is always a martingale regardless of the properties of

is always a martingale regardless of the properties of  .

.

The sequence  is the Doob martigale for f.[2]

is the Doob martigale for f.[2]

Application

Thus if one can bound the differences

,

,

one can apply Azuma's inequality and show that with high probability  is concentrated around its expected value

is concentrated around its expected value

McDiarmid's inequality

One common way of bounding the differences and applying Azuma's inequality to a Doob martingale is called McDiarmid's inequality.[3]

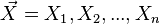

Suppose  are independent and assume that

are independent and assume that

satisfies

satisfies

(In other words, replacing the  -th coordinate

-th coordinate  by some other value changes the value of

by some other value changes the value of  by at most

by at most  .)

.)

It follows that

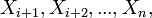

and therefore Azuma's inequality yields the following McDiarmid inequalities for any  :

:

and

and

See also

- Markov inequality

- Chebyshev's inequality

- Bernstein inequalities (probability theory)

- Dvoretzky–Kiefer–Wolfowitz inequality

Notes

- ↑ J. L. Doob, Transactions of the American Mathematical Society 47 (1940), 486, Theorem 3.7

- ↑ Anupam Gupta (2011) http://www.cs.cmu.edu/~avrim/Randalgs11/lectures/lect0321.pdf Lecture notes

- ↑ McDiarmid, Colin (1989). "On the Method of Bounded Differences". Surveys in Combinatorics 141: 148–188.

References

- McDiarmid, Colin (1989). "On the Method of Bounded Differences". Surveys in Combinatorics 141: 148–188.

![B_i=E_{X_{i+1},X_{i+2},...,X_{n}}[f(\vec{X})|X_{1},X_{2},...X_{i}]](../I/m/609782902a6c9587576074066c212627.png)

![E[f(\vec{X})]=B_0.](../I/m/6930fd3b320829197acf77844a2fb5cf.png)

![\Pr \left\{ f(X_1, X_2, \dots, X_n) - E[f(X_1, X_2, \dots, X_n)] \ge \varepsilon \right\}

\le

\exp \left( - \frac{2 \varepsilon^2}{\sum_{i=1}^n c_i^2} \right)](../I/m/e84d24b3707634e0641090ac542f2efc.png)

![\Pr \left\{ E[f(X_1, X_2, \dots, X_n)] - f(X_1, X_2, \dots, X_n) \ge \varepsilon \right\}

\le

\exp \left( - \frac{2 \varepsilon^2}{\sum_{i=1}^n c_i^2} \right)](../I/m/876cf80cb1e802d877c643c578508129.png)

![\Pr \left\{ |E[f(X_1, X_2, \dots, X_n)] - f(X_1, X_2, \dots, X_n)| \ge \varepsilon \right\}

\le 2 \exp \left( - \frac{2 \varepsilon^2}{\sum_{i=1}^n c_i^2} \right). \;](../I/m/e6d9a3391ff0f26989c03a6c87e7a80e.png)