Document mosaicing

Document mosaicing is a process that stitches multiple, overlapping snapshot images of a document together in order to produce one large, high resolution composite. The document is slid under a stationary, over-the-desk camera by hand until all parts of the document are snapshotted by the camera’s field of view. As the document slid under the camera, all motion of the document is coarsely tracked by the vision system. The document is periodically snapshotted such that the successive snapshots are overlap by about 50%. The system then finds the overlapped pairs and stitches them together repeatedly until all pairs are stitched together as one piece of document.[1]

The document mosaicing can be divided into four main processes.

- Tracking

- Feature detecting

- Correspondences establishing

- Images mosaicing.

Tracking (simple correlation process)

In this process, the motion of the document slid under the camera is coarsely tracked by the system. Tracking is performed by a process called simple correlation process. In the first frame of snapshots, a small patch is extracted from the center of the image as a correlation template as shown in Figure 1. The correlation process is performed in the four times size of the patch area of the next frame. The motion of the paper is indicated by the peak in the correlation function. The peak in the correlation function indicates the motion of the paper. The template is resampled from this frame and the tracking continues until the template reaches the edge of the document. After the template reaches the edge of the document, another snapshot is taken and the tracking process performs repeatedly until the whole document is imaged. The snapshots are stored in an ordered list in order to pair the overlapped images in later processes.s

Feature detecting for efficient matching

Feature detection is the process of finding the transformation which aligns one image with another. There are two main approaches for feature detection.[2][3]

- Feature-based approach : Motion parameters are estimated from point correspondences. This approach is suitable for the case that there is plenty supply of stable and detectable features.

- Featureless approach : When the motion between the two images is small, the motion parameters are estimated using optical flow. On the other hand, when the motion between the two images is large, the motion parameters are estimated using generalised cross-correlation. However, this approach requires a computationally expensive resources.

Each image is segmented into a hierarchy of columns, lines and words in order to match with the organised sets of features across images. Skew angle estimation and columns, lines and words finding are the examples of feature detection operations.

Skew angle estimation

Firstly, the angle that the rows of text make with the image raster lines (skew angle) is estimated. It is assumed to lie in the range of ±20°. A small patch of text in the image is selected randomly and then rotated in the range of ±20° until the variance of the pixel intensities of the patch summed along the raster lines is maximised.[4] See Figure 2.

In order to ensure that the found skew angle is accurate, the document mosaic system performs calculation at many image patches and derive the final estimation by finding the average of the individual angles weighted by the variance of the pixel intensities of each patch.

Columns, lines and words finding

In this operation, the de-skewed document is intuitively segmented into a hierarchy of columns, lines and words. The sensitivity to illumination and page coloration of the de-skewed document can be removed by applying a Sobel operator to the de-skewed image and thresholding the output to obtain the binary gradient, de-skewed image.[5] See Figure 3.

The operation can be roughly separated into 3 steps : column segmentation, line segmentation and word segmentation.

- Columns are easily segmented from the binary gradient, de-skewed images by summing pixels vertically as shown in Figure 4.

- Baselines of each row are segmented in the same way as the column segmentation process but horizontally.

- Finally, individual words are segmented by applying the vertical process at each segmented row.

These segmentations are important because the document mosaic will be created by matching the lower right corners of words in overlapping images pair. Moreover, the segmentation operation can organize the list of images in the context of a hierarchy of rows and column reliably.

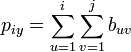

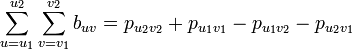

The segmentation operation involves a considerable amount of summing in the binary gradient, de-skewed images, which done by construct a matrix of partial sums[6] whose elements are given by

The matrix of partial sums is calculated in one pass through the binary gradient, de-skewed image.[6]

Correspondences establishing

The two images are now organized in hierarchy of linked lists in following structure :

- image = list of columns

- row = list of words

- column = list of row

- word = length (in pixels)

At the bottom of the structure, the length of each word is recorded for establishing correspondence between two images in order to reduce to search only the corresponding structures for the groups of words with the matching lengths.

Seed match finding

A seed match finding is done by comparing each row in image1 with each row in image2. The two rows are then compared to each other by every word. If the length (in pixel) of the two words (one from image1 and one from image2) and their immediate neighbours agree with each other within a predefined tolerance threshold (5 pixels, for example), then they are assumed to be matched. The row of each image is assumed to be matched if there are three or more word matches between the two rows. The seed match finding operation is terminated when two pairs of consecutive row match are found.

Match List Building

After finish a seed match finding operation, the next process is to build the match list to generate the correspondences points of the two images. The process is done by searching the matching pairs of rows away from the seed row.

Images mosaicing

Given the list of corresponding points of the two images, finding the transformation of the overlapping portion of the images is next process to be performed. Assuming a pinhole camera model, the transformation between pixels (u,v) of image 1 and pixels (u0, v0) of image 2 is demonstrated by a plane-to-plane projectivity.[7]

![\left[\begin{array}{c}

su'\\

sv'\\

s

\end{array}\right]=\left[\begin{array}{ccc}

p_{11} & p_{12} & p_{13}\\

p_{21} & p_{22} & p_{23}\\

p_{31} & p_{32} & 1

\end{array}\right]\left[\begin{array}{c}

u\\

v\\

1

\end{array}\right]

\qquad Eq.1](../I/m/32a3d0fbff3903d1c1bd211a7a9658f6.png)

The parameters of the projectivity is found from four pairs of matching points. RANSAC regression[8] technique is used to reject outlying matches and estimate the projectivity from the remaining good matches.

The projectivity is fine-tuned using correlation at the corners of the overlapping portion in order to obtain four correspondences to sub-pixel accuracy. Therefore, image1 is then transformed into image2’s coordinate system using Eq.1. The typical result of the process is shown in Figure 5.

Many images coping

Finally, the whole page composition is built up by mapping all the images into the coordinate system of an “anchor” image, which is normally the one nearest the page center. The transformations to the anchor frame are calculated by concatenating the pair-wise transformations which found earlier. The raw document mosaic is shown in Figure 6.

However, there might be a problem of non-consecutive images that are overlap. This problem can be solved by performing Hierarchical sub-mosaics. As shown in Figure 7, image1 and image2 are registered, as are image3 and image4, creating two sub-mosaics. These two sub-mosaics are later stitched together in another mosaicing process.

Applied areas

There are various areas that the technique of document mosaicing can be applied to such as :

- Text segmentation of images of documents[5]

- Document Recognition[4]

- Interaction with paper on the digital desk[9]

- Video mosaics for virtual environments[10]

- Image registration techniques[3]

Relevant research papers

- T. S Huang, A.N. Netravali. Motion structure from feature correspondences : A review, Proceedings of the IEEE 82 (2) (1984), 252–268.

- D.G. Lowe. Perceptual Organization and Visual Recognition. Kluwer Academic Publishers, Boston, 1985.

- M. Irani, S. Peleg. Improving resolution by image registration, CVGIP: Graphical Models and Image Processing 53 (3) (1991), 231 – 239.

- P. Shivakumara, G. Hemantha Kumar, D. S. Guru, and P. Nagabhushan. Sliding window based approach for document image mosaicing. Image Vision Comput. 24, 1 (January 2006), 94–100.

- Camera-Based Document Image Mosaicing. (n.d.). Image (Rochester, N.Y.), 1.

- Kumar, G. H., Shivakumara, P., Guru, D. S., & Nagabhushan, [www.ias.ac.in/sadhana/Pdf2004Jun/Pe1149.pdf] Document image mosaicing : A novel approach. Text, 29(June), 329–341.

- Sato, T., Ikeda, S., Kanbara, M., Iketani, A., Nakajima, N., Yokoya, N., & Yamada, K. (n.d.). High-resolution Video Mosaicing for Documents and Photos by Estimating Camera Motion. Mosaic A Journal For The Interdisciplinary Study Of Literature.

References

- ↑ 1.0 1.1 Anthony, Zappalá; Andrew Gee; Michael Taylor (1999). "Document mosaicing". Image and Vision Computing 17 (8): 589–595. doi:10.1016/S0262-8856(98)00178-4.

- ↑ S., Mann; R.W. Picard (1995). "Video orbits of the projective group: A new perspective on image mosaicing". Technical Report (Perceptual Computing Section), MIT Media Laboratory (338).

- ↑ 3.0 3.1 L.G., Brown (1992). "A survey of image registration techniques". ACM Computing Surveys (24): 325–376.

- ↑ 4.0 4.1 D.S., Bloomberg; G.E. Kopec, L. Dasari (1995). "Measuring document image skew and orientation". SPIE 2422 (Document Recognition II): 302–315.

- ↑ 5.0 5.1 M.J., Taylor; J. Lee (1997). "Interactive text segmentation of greyscale images of documents". Technical Report EPC, Rank Xerox Research Centre 104 (Document Recognition II): 302–315.

- ↑ 6.0 6.1 F.P., Preparata; M.I. Shamos (1985). "Computational Geometry: An Introduction". Springer–Verlag.

- ↑ J.L., Mundy; A. Zisserman (1992). "Appendix-Projective geometry for machine vision". Geometric Invariance in Computer Vision, MIT Press, Cambridge MA.

- ↑ Martin A. Fischler and Robert C. Bolles of SRI International (1981). "Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography". Communications of the ACM 24 (6): 381–395. doi:10.1145/358669.358692.

- ↑ P., Wellner (1993). "Interacting with paper on the digital desk". Communications of the ACM 36 (7): 87–97.

- ↑ R., Szeliski (1996). "Video mosaics for virtual environments". IEEE Computer Graphics and Applications 16 (2): 22–306. doi:10.1109/38.486677.

Bibliography

- Anthony, Zappalá; Andrew Gee; Michael Taylor (1999). "Document mosaicing". Image and Vision Computing 17 (8): 589–595. doi:10.1016/S0262-8856(98)00178-4.