Craps principle

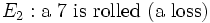

In probability theory, the craps principle is a theorem about event probabilities under repeated iid trials. Let  and

and  denote two mutually exclusive events which might occur on a given trial. Then for each trial, the conditional probability that

denote two mutually exclusive events which might occur on a given trial. Then for each trial, the conditional probability that  occurs given that

occurs given that  or

or  occur is

occur is

The events  and

and  need not be collectively exhaustive.

need not be collectively exhaustive.

Proof

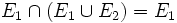

Since  and

and  are mutually exclusive,

are mutually exclusive,

Also due to mutual exclusion,

Combining these three yields the desired result.

Application

If the trials are repetitions of a game between two players, and the events are

then the craps principle gives the respective conditional probabilities of each player winning a certain repetition, given that someone wins (i.e., given that a draw does not occur). In fact, the result is only affected by the relative marginal probabilities of winning ![\operatorname{P}[E_1]](../I/m/4c52b554aa6f36f8c1b2086fa827cfaf.png) and

and ![\operatorname{P}[E_2]](../I/m/a5216a28f0f3703f7e970ddb6484493f.png) ; in particular, the probability of a draw is irrelevant.

; in particular, the probability of a draw is irrelevant.

Stopping

If the game is played repeatedly until someone wins, then the conditional probability above turns out to be the probability that the player wins the game.

Etymology

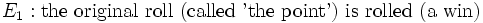

If the game being played is craps, then this principle can greatly simplify the computation of the probability of winning in a certain scenario. Specifically, if the first roll is a 4, 5, 6, 8, 9, or 10, then the dice are repeatedly re-rolled until one of two events occurs:

Since  and

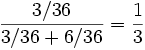

and  are mutually exclusive, the craps principle applies. For example, if the original roll was a 4, then the probability of winning is

are mutually exclusive, the craps principle applies. For example, if the original roll was a 4, then the probability of winning is

This avoids having to sum the infinite series corresponding to all the possible outcomes:

Mathematically, we can express the probability of rolling  ties followed by rolling the point:

ties followed by rolling the point:

The summation becomes an infinite geometric series:

which agrees with the earlier result.

References

Pitman, Jim (1993). Probability. Berlin: Springer-Verlag. ISBN 0-387-97974-3.

![\operatorname{P}\left[E_1\mid E_1\cup E_2\right]=\frac{\operatorname{P}[E_1]}{\operatorname{P}[E_1]+\operatorname{P}[E_2]}](../I/m/8063c98b2253398e5abdaa78559a099d.png)

![\operatorname{P}[E_1\cup E_2]=\operatorname{P}[E_1]+\operatorname{P}[E_2]](../I/m/6d3decf83147dece4ac57f7b85f59bcd.png)

![\operatorname{P}[E_1\cap(E_1\cup E_2)]=\operatorname{P}\left[E_1\mid E_1\cup E_2\right]\operatorname{P}\left[E_1\cup E_2\right]](../I/m/7aa3905bec4e3d318b736b58dc7b4eea.png)

![\sum_{i=0}^{\infty}\operatorname{P}[\textrm{first\ }i\textrm{\ rolls\ are\ ties,\ }(i+1)^\textrm{th}\textrm{\ roll\ is\ 'the\ point'}]](../I/m/f9ed32b1472a75d20cb4fb185522287f.png)

![\operatorname{P}[\textrm{first\ }i\textrm{\ rolls\ are\ ties,\ }(i+1)^\textrm{th}\textrm{\ roll\ is\ 'the\ point'}]

= (1-\operatorname{P}[E_1]-\operatorname{P}[E_2])^i\operatorname{P}[E_1]](../I/m/419e0c8fe352c20ab5fec00476769142.png)

![\sum_{i=0}^{\infty} (1-\operatorname{P}[E_1]-\operatorname{P}[E_2])^i\operatorname{P}[E_1]

= \operatorname{P}[E_1] \sum_{i=0}^{\infty} (1-\operatorname{P}[E_1]-\operatorname{P}[E_2])^i](../I/m/211227969f93dbcfbe0d04363e047fdf.png)

![= \frac{\operatorname{P}[E_1]}{1-(1-\operatorname{P}[E_1]-\operatorname{P}[E_2])}

= \frac{\operatorname{P}[E_1]}{\operatorname{P}[E_1]+\operatorname{P}[E_2]}](../I/m/3f5702dc0362d543632bb32be63a8c22.png)