Cramér's V

In statistics, Cramér's V (sometimes referred to as Cramér's phi and denoted as φc) is a measure of association between two nominal variables, giving a value between 0 and +1 (inclusive). It is based on Pearson's chi-squared statistic and was published by Harald Cramér in 1946.[1]

Usage and interpretation

φc is the intercorrelation of two discrete variables[2] and may be used with variables having two or more levels. φc is a symmetrical measure, it does not matter which variable we place in the columns and which in the rows. Also, the order of rows/columns doesn't matter, so φc may be used with nominal data types or higher (ordered, numerical, etc.)

Cramér's V may also be applied to goodness of fit chi-squared models when there is a 1×k table (e.g.: r=1). In this case k is taken as the number of optional outcomes and it functions as a measure of tendency towards a single outcome.

Cramér's V varies from 0 (corresponding to no association between the variables) to 1 (complete association) and can reach 1 only when the two variables are equal to each other.

φc2 is the mean square canonical correlation between the variables.

In the case of a 2×2 contingency table Cramér's V is equal to the Phi coefficient.

Note that as chi-squared values tend to increase with the number of cells, the greater the difference between r (rows) and c (columns), the more likely φc will tend to 1 without strong evidence of a meaningful correlation.

V may be viewed as the association between two variables as a percentage of their maximum possible variation. V2 is the mean square canonical correlation between the variables.

Calculation

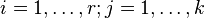

Let a sample of size n of the simultaneously distributed variables  and

and  for

for  be given by the frequencies

be given by the frequencies

number of times the values

number of times the values  were observed.

were observed.

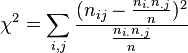

The chi-squared statistic then is:

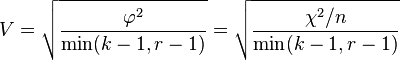

Cramér's V is computed by taking the square root of the chi-squared statistic divided by the sample size and the minimum dimension minus 1:

where:

-

is the phi coefficient.

is the phi coefficient. -

is derived from Pearson's chi-squared test

is derived from Pearson's chi-squared test -

is the grand total of observations and

is the grand total of observations and -

being the number of columns.

being the number of columns. -

being the number of rows.

being the number of rows.

The p-value for the significance of V is the same one that is calculated using the Pearson's chi-squared test.

The formula for the variance of V=φc is known.[3]

See also

Other measures of correlation for nominal data:

- The phi coefficient

- Tschuprow's T

- The uncertainty coefficient

- The Lambda coefficient

Other related articles:

References

- ↑ Cramér, Harald. 1946. Mathematical Methods of Statistics. Princeton: Princeton University Press, p282. ISBN 0-691-08004-6

- ↑ Sheskin, David J. (1997). Handbook of Parametric and Nonparametric Statistical Procedures. Boca Raton, Fl: CRC Press.

- ↑ Liebetrau, Albert M. (1983). Measures of association. Newbury Park, CA: Sage Publications. Quantitative Applications in the Social Sciences Series No. 32. (pages 15–16)

- Cramér, H. (1999). Mathematical Methods of Statistics, Princeton University Press

External links

- A Measure of Association for Nonparametric Statistics (Alan C. Acock and Gordon R. Stavig Page 1381 of 1381–1386)

- Nominal Association: Phi and Cramer's Vl from the homepage of Pat Dattalo.

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||