Coordinate descent

Coordinate descent is a non-derivative optimization algorithm. To find a local minimum of a function, one does line search along one coordinate direction at the current point in each iteration. One uses different coordinate directions cyclically throughout the procedure. On non-separable functions the algorithm may fail to find the optimum in a reasonable number of function evaluations. To improve the convergence an appropriate coordinate system can be gradually learned, such that new search coordinates obtained using PCA are as decorrelated as possible with respect to the objective function (see Adaptive coordinate descent for more details).

Description

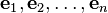

Coordinate descent is based on the idea that the minimization of a multivariable function  can be achieved by minimizing it along one direction at a time. Instead of varying descent direction according to gradient, one fixes descent direction at the outset. For instance, one chooses some basis as the search directions:

can be achieved by minimizing it along one direction at a time. Instead of varying descent direction according to gradient, one fixes descent direction at the outset. For instance, one chooses some basis as the search directions:  . One cyclically iterates through each direction, one at a time, minimizing the objective function with respect to that coordinate direction. It follows that, if

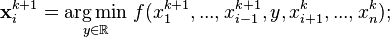

. One cyclically iterates through each direction, one at a time, minimizing the objective function with respect to that coordinate direction. It follows that, if  is given, the

is given, the  th coordinate of

th coordinate of  is given by

is given by

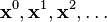

Thus, one begins with an initial guess  for a local minimum of

for a local minimum of  , and get a sequence

, and get a sequence

iteratively.

iteratively.

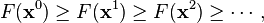

By doing line search in each iteration, We automatically have

It can be shown that this sequence has similar convergence properties as steepest descent. No improvement after one cycle of line search along coordinate directions implies a stationary point is reached.

This process is illustrated below.

Examples

Coordinate descent has problems with non-smooth functions. The following picture shows that coordinate descent iteration may get stuck at a non-stationary point if the level curves of a function are not smooth.

Applications

Coordinate descent algorithms are used in machine learning, e.g. for training linear support vector machines[1] (see LIBLINEAR) and non-negative matrix factorization.[2]

See also

- Adaptive coordinate descent

- Conjugate gradient

- Gradient descent

- Newton's method

- Mathematical optimization

- Line search

References

- ↑ Hsieh, C. J.; Chang, K. W.; Lin, C. J.; Keerthi, S. S.; Sundararajan, S. (2008). "A dual coordinate descent method for large-scale linear SVM". Proceedings of the 25th international conference on Machine learning - ICML '08 (PDF). p. 408. doi:10.1145/1390156.1390208. ISBN 9781605582054.

- ↑ Hsieh, C. J.; Dhillon, I. S. (2011). Fast coordinate descent methods with variable selection for non-negative matrix factorization (PDF). Proceedings of the 17th ACM SIGKDD international conference on Knowledge discovery and data mining - KDD '11. p. 1064. doi:10.1145/2020408.2020577. ISBN 9781450308137.

- Bezdek, J. C.; Hathaway, R. J.; Howard, R. E.; Wilson, C. A.; Windham, M. P. (1987), "Local convergence analysis of a grouped variable version of coordinate descent", Journal of Optimization theory and applications (Kluwer Academic/Plenum Publishers) 54 (3): 471–477, doi:10.1007/BF00940196

- Bertsekas, Dimitri P. (1999). Nonlinear Programming, Second Edition Athena Scientific, Belmont, Massachusetts. ISBN 1-886529-00-0.

- Canutescu, AA; Dunbrack, RL (2003), "Cyclic coordinate descent: A robotics algorithm for protein loop closure.", Protein science 12 (5): 963–72, doi:10.1110/ps.0242703, PMID 12717019.

- Luo, Zhiquan; Tseng, P. (1992), "On the convergence of the coordinate descent method for convex differentiable minimization", Journal of Optimization theory and applications (Kluwer Academic/Plenum Publishers) 72 (1): 7–35, doi:10.1007/BF00939948.

- Wu, TongTong; Lange, Kenneth (2008), "Coordinate descent algorithms for Lasso penalized regression", The Annals of Applied Statistics (Institute of Mathematical Statistics) 2 (1): 224–244, doi:10.1214/07-AOAS147.

- Richtarik, Peter; Takac, Martin (April 2011), "Iteration complexity of randomized block-coordinate descent methods for minimizing a composite function", Mathematical Programming (Springer), doi:10.1007/s10107-012-0614-z.

- Richtarik, Peter; Takac, Martin (December 2012), "Parallel coordinate descent methods for big data optimization", arXiv:1212.0873.