Commutation matrix

In mathematics, especially in linear algebra and matrix theory, the commutation matrix is used for transforming the vectorized form of a matrix into the vectorized form of its transpose. Specifically, the commutation matrix K(m,n) is the mn × mn matrix which, for any m × n matrix A, transforms vec(A) into vec(AT):

- K(m,n) vec(A) = vec(AT) .

Here vec(A) is the mn × 1 column vector obtain by stacking the columns of A on top of one another:

- vec(A) = [ A1,1, ..., Am,1, A1,2, ..., Am,2, ..., A1,n, ..., Am,n ]T

where A = [Ai,j].

The commutation matrix is a special type of permutation matrix, and is therefore orthogonal. Replacing A with AT in the definition of the commutation matrix shows that K(m,n) = (K(n,m))T. Therefore in the special case of m = n the commutation matrix is an involution and symmetric.

The main use of the commutation matrix, and the source of its name, is to commute the Kronecker product: for every m × n matrix A and every r × q matrix B,

- K(r,m)(A

B)K(n,q) = B

B)K(n,q) = B  A.

A.

An explicit form for the commutation matrix is as follows: if er,j denotes the j-th canonical vector of dimension r (i.e. the vector with 1 in the j-th coordinate and 0 elsewhere) then

- K(r,m) =

er,iem,jT

er,iem,jT em,jer,iT.

em,jer,iT.

Example

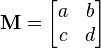

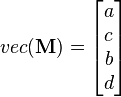

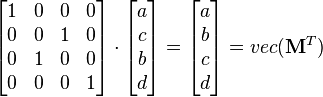

Let M be a 2x2 square matrix.

Then we have

And K(2,2) is the 4x4 square matrix that will transform vec(M) into vec(MT)

References

Jan R. Magnus and Heinz Neudecker (1988), Matrix Differential Calculus with Applications in Statistics and Econometrics, Wiley.