Cochran's theorem

In statistics, Cochran's theorem, devised by William G. Cochran,[1] is a theorem used to justify results relating to the probability distributions of statistics that are used in the analysis of variance.[2]

Statement

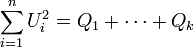

Suppose U1, ..., Un are independent standard normally distributed random variables, and an identity of the form

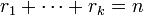

can be written, where each Qi is a sum of squares of linear combinations of the Us. Further suppose that

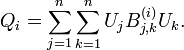

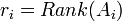

where ri is the rank of Qi. Cochran's theorem states that the Qi are independent, and each Qi has a chi-squared distribution with ri degrees of freedom.[1] Here the rank of Qi should be interpreted as meaning the rank of the matrix B(i), with elements Bj,k(i), in the representation of Qi as a quadratic form:

Less formally, it is the number of linear combinations included in the sum of squares defining Qi, provided that these linear combinations are linearly independent.

Proof

We first show that the matrices B(i) can be simultaneously diagonalized and that their non-zero eigenvalues are all equal to +1. We then use the vector basis that diagonalize them to simplify their characteristic function and show their independence and distribution.[3]

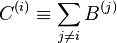

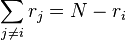

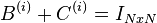

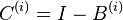

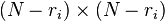

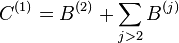

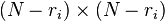

Each of the matrices B(i) has rank ri and so has exactly ri non-zero eigenvalues. For each i, the sum  has at most rank

has at most rank  . Since

. Since  , it follows that C(i) has exactly rank N-ri.

, it follows that C(i) has exactly rank N-ri.

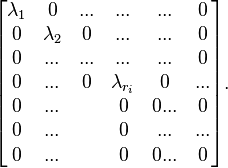

Therefore B(i) and C(i) can be simultaneously diagonalized. This can be shown by first diagonalizing B(i). In this basis, it is of the form:

Thus the lower  rows are zero. Since

rows are zero. Since  , it follows these rows in C(i) in this basis contain a right block which is a

, it follows these rows in C(i) in this basis contain a right block which is a  unit matrix, with zeros in the rest of these rows. But since C(i) has rank N-ri, it must be zero elsewhere. Thus it is diagonal in this basis as well. Moreover, it follows that all the non-zero eigenvalues of both B(i) and C(i) are +1.

unit matrix, with zeros in the rest of these rows. But since C(i) has rank N-ri, it must be zero elsewhere. Thus it is diagonal in this basis as well. Moreover, it follows that all the non-zero eigenvalues of both B(i) and C(i) are +1.

It follows that the non-zero eigenvalues of all the B-s are equal to +1. Moreover, the above analysis can be repeated in the diagonal basis for  . In this basis

. In this basis  is the identity of an

is the identity of an  vector space, so it follows that both B(2) and

vector space, so it follows that both B(2) and  are simultaneously diagonalizable in this vector space (and hence also together B(1)). By repeating this over and over it follows that all the B-s are simultaneously diagonalizable.

are simultaneously diagonalizable in this vector space (and hence also together B(1)). By repeating this over and over it follows that all the B-s are simultaneously diagonalizable.

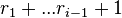

Thus there exists an orthogonal matrix S such that for all i between 1 and k:  is diagonal with the diagonal having 1-s at the places between

is diagonal with the diagonal having 1-s at the places between  and

and  .

.

Let  be the independent variables

be the independent variables  after transformation by S.

after transformation by S.

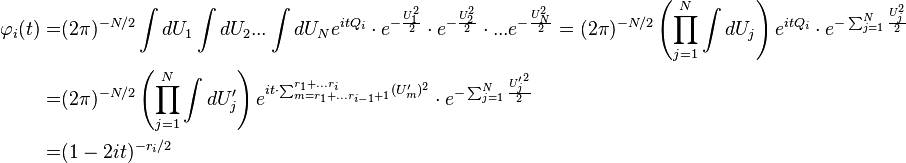

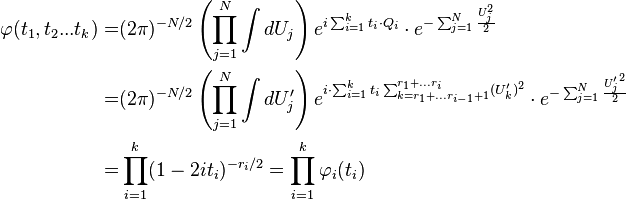

The characteristic function of Qi is:

This is the Fourier transform of the chi-squared distribution with ri degrees of freedom. Therefore this is the distribution of Qi.

Moreover, the characteristic function of the joint distribution of all the Qi-s is:

From which it follows that all the Qi-s are statistically independent.

Examples

Sample mean and sample variance

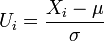

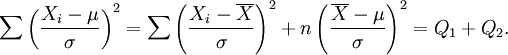

If X1, ..., Xn are independent normally distributed random variables with mean μ and standard deviation σ then

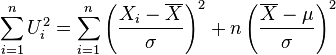

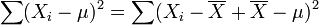

is standard normal for each i. It is possible to write

(here  is the sample mean). To see this identity, multiply throughout by

is the sample mean). To see this identity, multiply throughout by  and note that

and note that

and expand to give

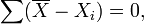

The third term is zero because it is equal to a constant times

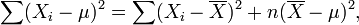

and the second term has just n identical terms added together. Thus

and hence

Now the rank of Q2 is just 1 (it is the square of just one linear combination of the standard normal variables). The rank of Q1 can be shown to be n − 1, and thus the conditions for Cochran's theorem are met.

Cochran's theorem then states that Q1 and Q2 are independent, with chi-squared distributions with n − 1 and 1 degree of freedom respectively. This shows that the sample mean and sample variance are independent. This can also be shown by Basu's theorem, and in fact this property characterizes the normal distribution – for no other distribution are the sample mean and sample variance independent.[4]

Distributions

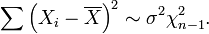

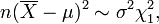

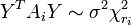

The result for the distributions is written symbolically as

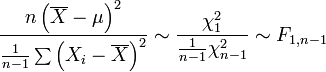

Both these random variables are proportional to the true but unknown variance σ2. Thus their ratio does not depend on σ2 and, because they are statistically independent. The distribution of their ratio is given by

where F1,n − 1 is the F-distribution with 1 and n − 1 degrees of freedom (see also Student's t-distribution). The final step here is effectively the definition of a random variable having the F-distribution.

Estimation of variance

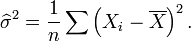

To estimate the variance σ2, one estimator that is sometimes used is the maximum likelihood estimator of the variance of a normal distribution

Cochran's theorem shows that

and the properties of the chi-squared distribution show that the expected value of  is σ2(n − 1)/n.

is σ2(n − 1)/n.

Alternative formulation

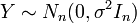

The following version is often seen when considering linear regression. Suppose that  is a standard multivariate normal random vector (here

is a standard multivariate normal random vector (here  denotes the n-by-n identity matrix), and if

denotes the n-by-n identity matrix), and if  are all n-by-n symmetric matrices with

are all n-by-n symmetric matrices with  . Then, on defining

. Then, on defining  , any one of the following conditions implies the other two:

, any one of the following conditions implies the other two:

-

-

(thus the

(thus the  are positive semidefinite)

are positive semidefinite) -

is independent of

is independent of  for

for

See also

- Cramér's theorem, on decomposing normal distribution

- Infinite divisibility (probability)

References

- ↑ 1.0 1.1 Cochran, W. G. (April 1934). "The distribution of quadratic forms in a normal system, with applications to the analysis of covariance". Mathematical Proceedings of the Cambridge Philosophical Society 30 (2): 178–191. doi:10.1017/S0305004100016595.

- ↑ Bapat, R. B. (2000). Linear Algebra and Linear Models (Second ed.). Springer. ISBN 978-0-387-98871-9.

- ↑ Craig A.T. (1938) On The Independence of Certain Estimates of Variances. Ann. Math. Statist. 9, pp. 48-55

- ↑ Geary, R.C. (1936). "The Distribution of the "Student's" Ratio for the Non-Normal Samples". Supplement to the Journal of the Royal Statistical Society 3 (2): 178–184. doi:10.2307/2983669. JFM 63.1090.03. JSTOR 2983669.

| ||||||||||||||||||||||