Change of basis

In linear algebra, a basis for a vector space of dimension n is a sequence of n vectors (α1, …, αn) with the property that every vector in the space can be expressed uniquely as a linear combination of the basis vectors. The matrix representations of operators are also determined by the chosen basis. Since it is often desirable to work with more than one basis for a vector space, it is of fundamental importance in linear algebra to be able to easily transform coordinate-wise representations of vectors and operators taken with respect to one basis to their equivalent representations with respect to another basis. Such a transformation is called a change of basis.

Although the terminology of vector spaces is used below and the symbol R can be taken to mean the field of real numbers, the results discussed hold whenever R is a commutative ring and vector space is everywhere replaced with free R-module.

Preliminary notions

The standard basis for Rn is the ordered sequence (e1, …, en), where ej is the element of Rn with 1 in the jth place and 0s elsewhere.

If T : Rn → Rm is a linear transformation, the m × n matrix of T is the matrix t whose jth column is T(ej) for j = 1, …, n. In this case we have T(x) = tx for all x in Rn, where we regard x as a column vector and the multiplication on the right side is matrix multiplication. It is a basic fact in linear algebra that the vector space Hom(Rn, Rm) of all linear transformations from Rn to Rm is naturally isomorphic to the space Rm × n of m × n matrices over R; that is, a linear transformation T : Rn → Rm is for all intents and purposes equivalent to its matrix t.

We will also make use of the following simple observation.

Theorem Let V and W be vector spaces, let {α1, …, αn} be a basis for V, and let {γ1, …, γn} be any n vectors in W. Then there exists a unique linear transformation T : V → W with T(αj) = γj for j = 1, …, n.

This unique T is defined by T(x1α1 + … + xnαn) = x1γ1 + … + xnγn. Of course, if {γ1, …, γn} happens to be a basis for W, then T is bijective as well as linear; in other words, T is an isomorphism. If in this case we also have W = V, then T is said to be an automorphism.

Now let V be a vector space over R and suppose {α1, …, αn} is a basis for V. By definition, if ξ is a vector in V then ξ = x1α1 + … + xnαn for a unique choice of scalars x1, …, xn in R called the coordinates of ξ relative to the ordered basis {α1, …, αn}. The vector x = (x1, …, xn) in Rn is called the coordinate tuple of ξ (relative to this basis). The unique linear map φ : Rn → V with φ(ej) = αj for j = 1, …, n is called the coordinate isomorphism for V and the basis {α1, …, αn}. Thus φ(x) = ξ if and only if ξ = x1α1 + … + xnαn.

Matrix of a set of vectors

A set of vectors can be represented by a matrix of which each column consists of the components of the corresponding vector of the set. As a basis is a set of vectors, a basis can be given by a matrix of this kind. Later it will be shown that the change of basis of any object of the space is related to this matrix. For example vectors change with its inverse (and they are therefore called contravariant objects).

Change of coordinates of a vector

First we examine the question of how the coordinates of a vector ξ, in the vector space V, change when we select another basis.

Two dimensions

This means that given a matrix M whose columns are the vectors of the new basis of the space (new basis matrix), the new coordinates for a column vector v are given by the matrix product M−1v. For this reason, it is said that normal vectors are contravariant objects.

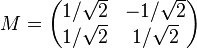

Any finite set of vectors can be represented by a matrix in which its columns are the coordinates of the given vectors. As an example in dimension 2, a pair of vectors obtained by rotating the standard basis counterclockwise for 45°. The matrix whose columns are the coordinates of these vectors is

If we want to change any vector of the space to this new basis, we only need to left-multiply its components by the inverse of this matrix.

Three dimensions

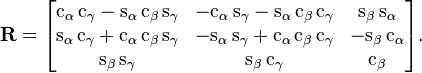

For example, be a new basis given by its Euler angles. The matrix of the basis will have as columns the components of each vector. Therefore, this matrix will be (See Euler angles article):

Again, any vector of the space can be changed to this new basis by left-multiplying its components by the inverse of this matrix.

General case

Suppose {α1, …, αn} and {α′1, …, α′n} are two ordered bases for V. Let φ1 and φ2 be the corresponding coordinate isomorphisms (linear maps) from Rn to V, i.e. φ1(ej) = αj and φ2(ej) = α′j for j = 1, …, n.

If x = (x1, …, xn) is the coordinate n-tuple of ξ with respect to the first basis, so that ξ = φ1(x), then the coordinate tuple of ξ with respect to the second basis is φ2−1(ξ) = φ2−1(φ1(x)). Now the map φ2−1 ∘ φ1 is an automorphism on Rn and therefore has a matrix p. Moreover, the jth column of p is φ2−1 ∘ φ1(ej) = φ2−1(αj), that is, the coordinate n-tuple of αj with respect to the second basis {α′1, …, α′n}. Thus y = φ2−1(φ1(x)) = px is the coordinate n-tuple of ξ with respect to the basis {α′1, …, α′n}.

The matrix of a linear transformation

Now suppose T : V → W is a linear transformation, {α1, …, αn} is a basis for V and {β1, …, βm} is a basis for W. Let φ and ψ be the coordinate isomorphisms for V and W, respectively, relative to the given bases. Then the map T1 = ψ−1 ∘ T ∘ φ is a linear transformation from Rn to Rm, and therefore has a matrix t; its jth column is ψ−1(T(αj)) for j = 1, …, n. This matrix is called the matrix of T with respect to the ordered bases {α1, …, αn} and {β1, …, βm}. If η = T(ξ) and y and x are the coordinate tuples of η and ξ, then y = ψ−1(T(φ(x))) = tx. Conversely, if ξ is in V and x = φ−1(ξ) is the coordinate tuple of ξ with respect to {α1, …, αn}, and we set y = tx and η = ψ(y), then η = ψ(T1(x)) = T(ξ). That is, if ξ is in V and η is in W and x and y are their coordinate tuples, then y = tx if and only if η = T(ξ).

Theorem Suppose U, V and W are vector spaces of finite dimension and an ordered basis is chosen for each. If T : U → V and S : V → W are linear transformations with matrices s and t, then the matrix of the linear transformation S ∘ T : U → W (with respect to the given bases) is st.

Change of basis

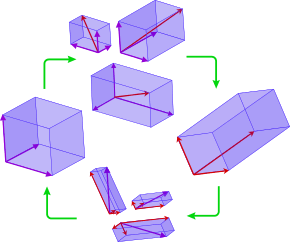

Now we ask what happens to the matrix of T : V → W when we change bases in V and W. Let {α1, …, αn} and {β1, …, βm} be ordered bases for V and W respectively, and suppose we are given a second pair of bases {α′1, …, α′n} and {β′1, …, β′m}. Let φ1 and φ2 be the coordinate isomorphisms taking the usual basis in Rn to the first and second bases for V, and let ψ1 and ψ2 be the isomorphisms taking the usual basis in Rm to the first and second bases for W.

Let T1 = ψ1−1 ∘ T ∘ φ1, and T2 = ψ2−1 ∘ T ∘ φ2 (both maps taking Rn to Rm), and let t1 and t2 be their respective matrices. Let p and q be the matrices of the change-of-coordinates automorphisms φ2−1 ∘ φ1 on Rn and ψ2−1 ∘ ψ1 on Rm.

The relationships of these various maps to one another are illustrated in the following commutative diagram.

Since we have T2 = ψ2−1 ∘ T ∘ φ2 = (ψ2−1 ∘ ψ1) ∘ T1 ∘ (φ1−1 ∘ φ2), and since composition of linear maps corresponds to matrix multiplication, it follows that

- t2 = q t1 p−1.

Given that the change of basis has once the basis matrix and once its inverse, this objects are said to be 1-co, 1-contra-variant.

The matrix of an endomorphism

An important case of the matrix of a linear transformation is that of an endomorphism, that is, a linear map from a vector space V to itself: that is, the case that W = V. We can naturally take {β1, …, βn} = {α1, …, αn} and {β′1, …, β′m} = {α′1, …, α′n}. The matrix of the linear map T is necessarily square.

Change of basis

We apply the same change of basis, so that q = p and the change of basis formula becomes

- t2 = p t1 p−1.

In this situation the invertible matrix p is called a change-of-basis matrix for the vector space V, and the equation above says that the matrices t1 and t2 are similar.

The matrix of a bilinear form

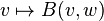

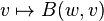

A bilinear form on a vector space V over a field R is a mapping V × V → R which is linear in both arguments. That is, B : V × V → R is bilinear if the maps

are linear for each w in V. This definition applies equally well to modules over a commutative ring with linear maps being module homomorphisms.

The Gram matrix G attached to a basis  is defined by

is defined by

If  and

and  are the expressions of vectors v, w with respect to this basis, then the bilinear form is given by

are the expressions of vectors v, w with respect to this basis, then the bilinear form is given by

The matrix will be symmetric if the bilinear form B is a symmetric bilinear form.

Change of basis

If P is the invertible matrix representing a change of basis from

to

to  then the Gram matrix transforms by the matrix congruence

then the Gram matrix transforms by the matrix congruence

Important instances

In abstract vector space theory the change of basis concept is innocuous; it seems to add little to science. Yet there are cases in associative algebras where a change of basis is sufficient to turn a caterpillar into a butterfly, figuratively speaking:

- In the split-complex number plane there is an alternative "diagonal basis". The standard hyperbola xx − yy = 1 becomes xy = 1 after the change of basis. Transformations of the plane that leave the hyperbolae in place correspond to each other, modulo a change of basis. The contextual difference is profound enough to then separate Lorentz boost from squeeze mapping. A panoramic view of the literature of these mappings can be taken using the underlying change of basis.

- With the 2 × 2 real matrices one finds the beginning of a catalogue of linear algebras due to Arthur Cayley. His associate James Cockle put forward in 1849 his algebra of coquaternions or split-quaternions, which are the same algebra as the 2 × 2 real matrices, just laid out on a different matrix basis. Once again it is the concept of change of basis that synthesizes Cayley’s matrix algebra and Cockle’s coquaternions.

- A change of basis turns a 2 × 2 complex matrix into a biquaternion.

See also

- Coordinate vector

- integral transform, the continuous analogue of change of basis.

- Active and passive transformation

External links

- MIT Linear Algebra Lecture on Change of Basis, from MIT OpenCourseWare

- Khan Academy Lecture on Change of Basis, from Khan Academy