Bussgang theorem

In mathematics, the Bussgang theorem is a theorem of stochastic analysis. The theorem states that the crosscorrelation of a Gaussian signal before and after it has passed through a nonlinear operation are equal up to a constant. It was first published by Julian J. Bussgang in 1952 while he was at the Massachusetts Institute of Technology.[1]

Statement of the theorem

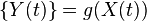

Let  be a zero-mean stationary Gaussian random process and

be a zero-mean stationary Gaussian random process and  where

where  is a nonlinear amplitude distortion.

is a nonlinear amplitude distortion.

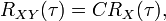

If  is the autocorrelation function of

is the autocorrelation function of  , then the cross-correlation function of

, then the cross-correlation function of  and

and  is

is

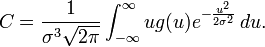

where  is a constant that depends only on

is a constant that depends only on  .

.

It can be further shown that

Application

This theorem implies that a simplified correlator can be designed. Instead of having to multiply two signals, the cross-correlation problem reduces to the gating of one signal with another.

References

- ↑ J.J. Bussgang,"Cross-correlation function of amplitude-distorted Gaussian signals", Res. Lab. Elec., Mas. Inst. Technol., Cambridge MA, Tech. Rep. 216, March 1952.

Further reading

- E.W. Bai; V. Cerone; D. Regruto (2007) "Separable inputs for the identification of block-oriented nonlinear systems", Proceedings of the 2007 American Control Conference (New York City, July 11–13, 2007) 1548–1553