Bonferroni correction

In statistics, the Bonferroni correction is a method used to counteract the problem of multiple comparisons. It is considered the simplest and most conservative method to control the familywise error rate.

It is named after Italian mathematician Carlo Emilio Bonferroni[1] for the use of Bonferroni inequalities, but modern usage is credited to Olive Jean Dunn, who first used it in a pair of articles written in 1959[2] and 1961.[3]

Informal introduction

Statistical inference logic is based on rejecting the null hypotheses if the likelihood of the observed data under the null hypotheses is low. The problem of multiplicity arises from the fact that as we increase the number of hypotheses in a test, we also increase the likelihood of witnessing a rare event, and therefore, the chance to reject the null hypotheses when it's true (type I error).

The Bonferroni correction is based on the idea that if an experimenter is testing m dependent or independent hypotheses on a set of data, then one way of maintaining the familywise error rate (FWER) is to test each individual hypothesis at a statistical significance level of 1/m times what it would be if only one hypothesis were tested.

So, if the desired significance level for the whole family of tests should be (at most) α, then the Bonferroni correction would test each individual hypothesis at a significance level of α/m. For example, if a trial is testing eight hypotheses with a desired α = 0.05, then the Bonferroni correction would test each individual hypothesis at α = 0.05/8 = 0.00625.

Statistically significant simply means that a given result is unlikely to have occurred by chance assuming the null hypothesis is actually correct (i.e., no difference among groups, no effect of treatment, no relation among variables).

Definition

Let  be a family of hypotheses and

be a family of hypotheses and  the corresponding p-values. Let

the corresponding p-values. Let  be the (unknown) subset of the true null hypotheses, having

be the (unknown) subset of the true null hypotheses, having  members.

members.

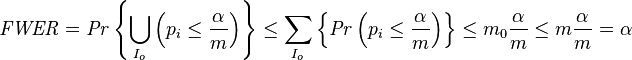

The familywise error rate is the probability of rejecting at least one of the members in  ; that is, to make one or more type I error. The Bonferroni Correction states that choosing all

; that is, to make one or more type I error. The Bonferroni Correction states that choosing all  will control the

will control the  . The proof follows from Boole's inequality:

. The proof follows from Boole's inequality:

This result does not require that the tests be independent.

Modifications

Generalization

We have used the fact that  , but the correction can be generalized and applied to any

, but the correction can be generalized and applied to any  , as long as the weights are defined prior to the test.

, as long as the weights are defined prior to the test.

Confidence intervals

Bonferroni correction can be used to adjust confidence intervals. If we are forming  confidence intervals, and wish to have overall confidence level of

confidence intervals, and wish to have overall confidence level of  , then adjusting each individual confidence interval to the level of

, then adjusting each individual confidence interval to the level of  will be the analog confidence interval correction.

will be the analog confidence interval correction.

Simultaneous inference and selective inference

Bonferroni correction is the basic type of simultaneous inference, that aims to control the familywise error rate. Significant statistical research was done in the field from early 1960s until late '90s, and many improvements were offered. Most notably are the Holm–Bonferroni method, which offers a uniformly more powerful test procedure (i.e., more powerful regardless of the values of the unobservable parameters), and the Hochberg (1988) method, guaranteed to be no less powerful and is in many cases more powerful when the tests are independent (and also under some forms of positive dependence).

In 1995 Benjamini and Hochberg suggested to control the false discovery rate instead of the familywise error rate and do selective inference corrections. This approach addresses the technological improvements that occurred at the end of the century, and provides the researcher with better tools to do large-scale inferences, which was considered one of the weak points of simultaneous inferences methodologies.

Alternatives

This list includes only some of the alternatives that control the familywise error rate.

Holm–Bonferroni method

A uniformly more powerful test procedure (i.e., more powerful regardless of the values of the unobservable parameters) than the Bonferroni correction.

Šidák correction

A uniformly more powerful test procedure (i.e., more powerful regardless of the values of the unobservable parameters) than the Bonferroni correction.

Criticisms

The Bonferroni correction can be somewhat conservative if there are a large number of tests and/or the test statistics are positively correlated. Bonferroni correction controls the probability of false positives only. The correction ordinarily comes at the cost of increasing the probability of producing false negatives, and consequently reducing statistical power. When testing a large number of hypotheses, this can result in large critical values.

Another criticism concerns the concept of a family of hypotheses. The statistical community has not reached a consensus on how to define such a family. Currently it is defined subjectively per test. As there is no standard definition, test results may change dramatically, only by modifying the way we consider the hypotheses families.

See also

- Bonferroni inequalities

- Familywise error rate

- Holm–Bonferroni method

- Multiple testing

References

- ↑ Bonferroni, C. E., Teoria statistica delle classi e calcolo delle probabilità, Pubblicazioni del R Istituto Superiore di Scienze Economiche e Commerciali di Firenze 1936

- ↑ Olive Jean Dunn, Estimation of the Medians for Dependent Variables, The Annals of Mathematical Statistics 1959 http://projecteuclid.org/download/pdf_1/euclid.aoms/1177706374

- ↑ Olive Jean Dunn, Multiple Comparisons Among Means, Journal of the American Statistical Association 1961 http://sci2s.ugr.es/keel/pdf/algorithm/articulo/1961-Bonferroni_Dunn-JASA.pdf

- Abdi, H. (2007). "Bonferroni and Šidák corrections for multiple comparisons". In Salkind, N. J. Encyclopedia of Measurement and Statistics (PDF). Thousand Oaks, CA: Sage.

- Manitoba Centre for Health Policy (2008). "Concept: Multiple Comparisons".

- Dunn, O. J. (1961). "Multiple Comparisons Among Means". Journal of the American Statistical Association 56 (293): 52–64. doi:10.1080/01621459.1961.10482090.

- Dunnett, C. W. (1955). "A multiple comparisons procedure for comparing several treatments with a control". Journal of the American Statistical Association 50 (272): 1096–1121. doi:10.1080/01621459.1955.10501294.

- Dunnett, C. W. (1964). "New tables for multiple comparisons with a control". Biometrics 20 (3): 482–491. JSTOR 2528490.

- Perneger, Thomas V. (1998). "What's wrong with Bonferroni adjustments". British Medical Journal 316 (7139): 1236–1238. doi:10.1136/bmj.316.7139.1236. See also the Rapid Response to this suggesting much of it is mistaken.

- School of Psychology, University of New England, New South Wales, Australia, 2000, http://www.une.edu.au/WebStat/unit_materials/c5_inferential_statistics/bonferroni.html

- Weisstein, Eric W., "Bonferroni correction", MathWorld.

- Shaffer, J. P. (1995). "Multiple Hypothesis Testing". Annual Review of Psych 46: 561–584. doi:10.1146/annurev.ps.46.020195.003021.

- Strassburger, K.; Bretz, Frank (2008). "Compatible simultaneous lower confidence bounds for the Holm procedure and other Bonferroni-based closed tests". Statistics in Medicine 27 (24): 4914–4927. doi:10.1002/sim.3338.

- Šidák, Z. (1967). "Rectangular confidence regions for the means of multivariate normal distributions". Journal of the American Statistical Association 62 (318): 626–633. doi:10.1080/01621459.1967.10482935.

- Hochberg, Yosef (1988). "A Sharper Bonferroni Procedure for Multiple Tests of Significance" (PDF). Biometrika 75 (4): 800–802. doi:10.1093/biomet/75.4.800.