Binary logarithm

In mathematics, the binary logarithm (log2 n) is the logarithm to the base 2. It is the inverse function of the power of two function. The binary logarithm of n is the power to which the number 2 must be raised to obtain the value n. That is:

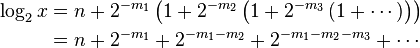

For example, the binary logarithm of 1 is 0, the binary logarithm of 2 is 1, the binary logarithm of 4 is 2, the binary logarithm of 8 is 3, the binary logarithm of 16 is 4 and the binary logarithm of 32 is 5.

The binary logarithm is closely connected to the binary numeral system. Historically, the first application of binary logarithms was in music theory, by Leonhard Euler. Other areas in which the binary logarithm is frequently used include information theory, combinatorics, computer science, bioinformatics, the design of sports tournaments, and photography.

History

A table of powers of two published by Michael Stifel in 1544 can also be interpreted (by reversing its rows) as being a table of binary logarithms.[1][2] The application of binary logarithms to music theory was established by Leonhard Euler in 1739, long before information theory and computer science became disciplines of study. As part of his work in this area, Euler included a table of binary logarithms of the integers from 1 to 8, to seven decimal digits of accuracy.[3][4]

Notation

In mathematics, the binary logarithm of a number n is written as log2 n. However, several other notations for this function have been used or proposed, especially in application areas.

Some authors write the binary logarithm as lg n.[5][6] Donald Knuth credits this notation to a suggestion of Edward Reingold,[7] but its use in both information theory and computer science dates to before Reingold was active.[8][9] The binary logarithm has also been written as log n, with a prior statement that the default base for the logarithm is 2.[10][11][12]

Another notation that is sometimes used for the same function (especially in the German language) is ld n, from Latin logarithmus duālis.[13] The ISO 31-11 and ISO 80000-2 specifications recommend yet another notation, lb n; in this specification, lg n is instead reserved for log10 n. However, the ISO notation has not come into common use.

Applications

Information theory

The number of digits (bits) in the binary representation of a positive integer n is the integral part of 1 + log2 n, i.e.[6]

In information theory, the definition of the amount of self-information and information entropy is often expressed with the binary logarithm, corresponding to making the bit be the fundamental unit of information. However, the natural logarithm and the nat are also used in alternative notations for these definitions.[14]

Combinatorics

Although the natural logarithm is more important than the binary logarithm in many areas of pure mathematics such as number theory and mathematical analysis, the binary logarithm has several applications in combinatorics:

- Every binary tree with n leaves has height at least

, with equality when n is a power of two and the tree is a complete binary tree.[15]

, with equality when n is a power of two and the tree is a complete binary tree.[15] - Every family of sets with n different sets has at least

elements in its union, with equality when the family is a power set.[16]

elements in its union, with equality when the family is a power set.[16] - Every partial cube with n vertices has isometric dimension at least

, and at most

, and at most  edges, with equality when the partial cube is a hypercube graph.[17]

edges, with equality when the partial cube is a hypercube graph.[17] - According to Ramsey's theorem, every n-vertex undirected graph has either a clique or an independent set of size logarithmic in n. The precise size that can be guaranteed is not known, but the best bounds known on its size involve binary logarithms. In particular, all graphs have a clique or independent set of size at least

and almost all graphs do not have a clique or independent set of size larger than

and almost all graphs do not have a clique or independent set of size larger than  .[18]

.[18] - From a mathematical analysis of the Gilbert–Shannon–Reeds model of random shuffles, one can show that the number of times one needs to shuffle an n-card deck of cards, using riffle shuffles, to get a distribution on permutations that is close to uniformly random, is approximately

. This calculation forms the basis for a recommendation that 52-card decks should be shuffled seven times.[19]

. This calculation forms the basis for a recommendation that 52-card decks should be shuffled seven times.[19]

Computational complexity

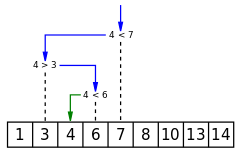

The binary logarithm also frequently appears in the analysis of algorithms,[12] not only because of the frequent use of binary number arithmetic in algorithms, but also because binary logarithms occur in the analysis of algorithms based on two-way branching.[7] If a problem initially has n choices for its solution, and each iteration of the algorithm reduces the number of choices by a factor of two, then the number of iterations needed to select a single choice is again the integral part of log2 n. This idea is used in the analysis of several algorithms and data structures. For example, in binary search, the size of the problem to be solved is halved with each iteration, and therefore roughly log2n iterations are needed to obtain a problem of size 1, which is solved easily in constant time. Similarly, a perfectly balanced binary search tree containing n elements has height log2 n + 1.

However, the running time of an algorithm is usually expressed in big O notation, ignoring constant factors. Since log2 n = (logk n)/(logk 2), where k can be any number greater than 1, algorithms that run in O(log2 n) time can also be said to run in, say, O(log13 n) time. The base of the logarithm in expressions such as O(log n) or O(n log n) is therefore not important.[5] In other contexts, though, the base of the logarithm needs to be specified. For example O(2log2 n) is not the same as O(2ln n) because the former is equal to O(n) and the latter to O(n0.6931...).

Algorithms with running time O(n log n) are sometimes called linearithmic.[20] Some examples of algorithms with running time O(log n) or O(n log n) are:

- Average time quicksort and other comparison sort algorithms[21]

- Searching in balanced binary search trees[22]

- Exponentiation by squaring[23]

- Longest increasing subsequence[24]

Binary logarithms also occur in the exponents of the time bounds for some divide and conquer algorithms, such as the Karatsuba algorithm for multiplying n-bit numbers in time  .[25]

.[25]

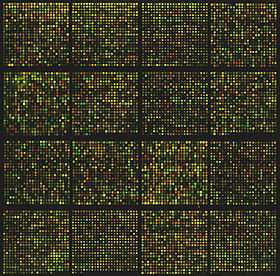

Bioinformatics

In the analysis of microarray data in bioinformatics, expression rates of genes are often compared by using the binary logarithm of the ratio of expression rates. By using base 2 for the logarithm, a doubled expression rate can be described by a log ratio of 1, a halved expression rate can be described by a log ratio of −1, and an unchanged expression rate can be described by a log ratio of zero, for instance.[26] Data points obtained in this way are often visualized as a scatterplot in which one or both of the coordinate axes are binary logarithms of intensity ratios, or in visualizations such as the MA plot and RA plot which rotate and scale these log ratio scatterplots.[27]

Music theory

In music theory, the interval or perceptual difference between two tones is determined by the ratio of their frequencies. Intervals coming from rational number ratios with small numerators and denominators are perceived as particularly euphonius. The simplest and most important of these intervals is the octave, a frequency ratio of 2:1. The number of octaves by which two tones differ is the binary logarithm of their frequency ratio.[28]

In order to study tuning systems and other aspects of music theory requiring finer distinctions between tones, it is helpful to have a measure of the size of an interval that is finer than an octave and is additive (as logarithms are) rather than multiplicative (as frequency ratios are). That is, if tones x, y, and z form a rising sequence of tones, then the measure of the interval from x to y plus the measure of the interval from y to z should equal the measure of the interval from x to z. Such a measure is given by the cent, which divides the octave into 1200 equal intervals (12 semitones of 100 cents each). Mathematically, given tones with frequencies f1 and f2, the number of cents in the interval from x to y is[28]

The millioctave is defined in the same way, but with a multiplier of 1000 instead of 1200.

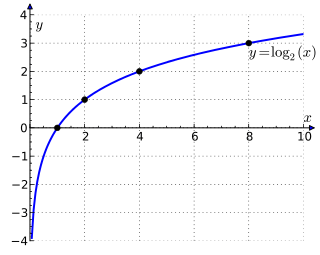

Sports scheduling

In competitive games and sports involving two players or teams in each game or match, the binary logarithm indicates the number of rounds necessary in a single-elimination tournament in order to determine a winner. For example, a tournament of 4 players requires log2(4) = 2 rounds to determine the winner, a tournament of 32 teams requires log2(32) = 5 rounds, etc. In this case, for n players/teams where n is not a power of 2, log2n is rounded up since it will be necessary to have at least one round in which not all remaining competitors play. For example, log2(6) is approximately 2.585, rounded up, indicates that a tournament of 6 requires 3 rounds (either 2 teams will sit out the first round, or one team will sit out the second round). The same number of rounds is also necessary to determine a clear winner in a Swiss-system tournament.[29]

Photography

In photography, exposure values are measured in terms of the binary logarithm of the amount of light reaching the film or sensor, in accordance with the Weber–Fechner law describing a logarithmic response of the human visual system to light. A single stop of exposure is one unit on a base-2 logarithmic scale.[30][31] More precisely, the exposure value of a photograph is defined as

where  is the f-number measuring the aperture of the lens during the exposure, and

is the f-number measuring the aperture of the lens during the exposure, and  is the number of seconds of exposure.

is the number of seconds of exposure.

Binary logarithms (expressed as stops) are also used in densitometry, to express the dynamic range of light-sensitive materials or digital sensors.[32]

Calculation

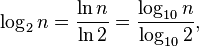

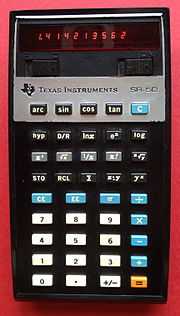

Conversion from other bases

An easy way to calculate the log2(n) on calculators that do not have a log2-function is to use the natural logarithm (ln) or the common logarithm (log) functions, which are found on most scientific calculators. The specific change of logarithm base formulae for this are:[31][33]

or approximately

Integer rounding

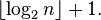

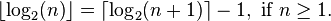

The binary logarithm can be made into a function from integers and to integers by rounding it up or down. These two forms of integer binary logarithm are related by this formula:

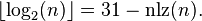

The definition can be extended by defining  . Extended in this way, this function is related to the number of leading zeros of the 32-bit unsigned binary representation of x, nlz(x).

. Extended in this way, this function is related to the number of leading zeros of the 32-bit unsigned binary representation of x, nlz(x).

The integer binary logarithm can be interpreted as the zero-based index of the most significant 1 bit in the input. In this sense it is the complement of the find first set operation, which finds the index of the least significant 1 bit. Many hardware platforms include support for finding the number of leading zeros, or equivalent operations, which can be used to quickly find the binary logarithm; see find first set for details. The fls and flsl functions in the Linux kernel[35] and in some versions of the libc software library also compute the binary logarithm (rounded up to an integer, plus one).

Recursive approximation

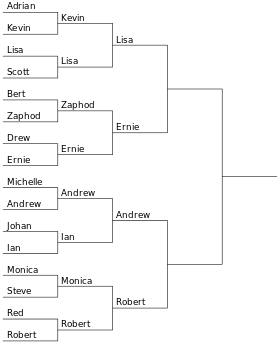

For a general positive real number, the binary logarithm may be computed in two parts:[36]

- Compute the integer part,

(called the mantissa of the logarithm)

(called the mantissa of the logarithm) - Compute the fractional part (the characteristic of the logarithm)

Computing the integer part is straightforward. For any x > 0, there exists a unique integer n such that 2n ≤ x < 2n+1, or equivalently 1 ≤ 2−nx < 2. Now the integer part of the logarithm is simply n, and the fractional part is log2(2−nx).[36] In other words:

The fractional part of the result is  , and can be computed recursively, using only elementary multiplication and division.[36] To compute the fractional part:

, and can be computed recursively, using only elementary multiplication and division.[36] To compute the fractional part:

- Start with a real number

. If

. If  , then we are done and the fractional part is zero.

, then we are done and the fractional part is zero. - Otherwise, square

repeatedly until the result is

repeatedly until the result is  . Let

. Let  be the number of squarings needed. That is,

be the number of squarings needed. That is,  with

with  chosen such that

chosen such that  .

. - Taking the logarithm of both sides and doing some algebra:

- Once again

is a real number in the interval

is a real number in the interval  . Return to step 1, and compute the binary logarithm of

. Return to step 1, and compute the binary logarithm of  using the same method recursively.

using the same method recursively.

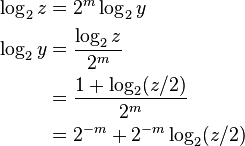

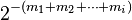

The result of this is expressed by the following formulas, in which  is the number of squarings required in the i-th recursion of the algorithm:

is the number of squarings required in the i-th recursion of the algorithm:

In the special case where the fractional part in step 1 is found to be zero, this is a finite sequence terminating at some point. Otherwise, it is an infinite series which converges according to the ratio test, since each term is strictly less than the previous one (since every  ). For practical use, this infinite series must be truncated to reach an approximate result. If the series is truncated after the i-th term, then the error in the result is less than

). For practical use, this infinite series must be truncated to reach an approximate result. If the series is truncated after the i-th term, then the error in the result is less than  .

.

An alternative algorithm that computes a single bit of the output in each iteration, using a sequence of shift and comparison operations to determine which bit to output, is also possible.[37]

Software library support

The log2 function is included in the standard C mathematical functions. The default version of this function takes double precision arguments but variants of it allow the argument to be single-precision or to be a long double.[38]

References

- ↑ Groza, Vivian Shaw; Shelley, Susanne M. (1972), Precalculus mathematics, New York: Holt, Rinehart and Winston, p. 182, ISBN 978-0-03-077670-0.

- ↑ Stifel, Michael (1544), Arithmetica integra (in Latin), p. 31. A copy of the same table with two more entries appears on p. 237, and another copy extended to negative powers appears on p. 249b.

- ↑ Euler, Leonhard (1739), "Chapter VII. De Variorum Intervallorum Receptis Appelationibus", Tentamen novae theoriae musicae ex certissismis harmoniae principiis dilucide expositae (in Latin), Saint Petersburg Academy, pp. 102–112.

- ↑ Tegg, Thomas (1829), "Binary logarithms", London encyclopaedia; or, Universal dictionary of science, art, literature and practical mechanics: comprising a popular view of the present state of knowledge, Volume 4, pp. 142–143.

- ↑ 5.0 5.1 Cormen, Thomas H.; Leiserson, Charles E., Rivest, Ronald L., Stein, Clifford (2001) [1990]. Introduction to Algorithms (2nd ed.). MIT Press and McGraw-Hill. pp. 34, 53–54. ISBN 0-262-03293-7.

- ↑ 6.0 6.1 Sedgewick, Robert; Wayne, Kevin Daniel (2011), Algorithms, Addison-Wesley Professional, p. 185, ISBN 9780321573513.

- ↑ 7.0 7.1 Knuth, Donald E. (1997), The Art of Computer Programming, Volume 1: Fundamental Algorithms (3rd ed.), Addison-Wesley Professional, ISBN 9780321635747, p. 11. The same notation was in the 1973 2nd edition of the same book (p. 23) but without the credit to Reingold.

- ↑ Trucco, Ernesto (1956), "A note on the information content of graphs", Bull. Math. Biophys. 18: 129–135, doi:10.1007/BF02477836, MR 0077919.

- ↑ Mitchell, John N. (1962), "Computer multiplication and division using binary logarithms", IRE Transactions on Electronic Computers, EC-11 (4): 512–517, doi:10.1109/TEC.1962.5219391.

- ↑ Fiche, Georges; Hebuterne, Gerard (2013), Mathematics for Engineers, John Wiley & Sons, p. 152, ISBN 9781118623336,

In the following, and unless otherwise stated, the notation log x always stands for the logarithm to the base 2 of x

. - ↑ Cover, Thomas M.; Thomas, Joy A. (2012), Elements of Information Theory (2nd ed.), John Wiley & Sons, p. 33, ISBN 9781118585771,

Unless otherwise specified, we will take all logarithms to base 2

. - ↑ 12.0 12.1 Goodrich, Michael T.; Tamassia, Roberto (2002), Algorithm Design: Foundations, Analysis, and Internet Examples, John Wiley & Sons, p. 23,

One of the interesting and sometimes even surprising aspects of the analysis of data structures and algorithms is the ubiquitous presence of logarithms ... As is the custom in the computing literature, we omit writing the base b of the logarithm when b = 2.

- ↑ For instance, see Bauer, Friedrich L. (2009), Origins and Foundations of Computing: In Cooperation with Heinz Nixdorf MuseumsForum, Springer Science & Business Media, p. 54, ISBN 9783642029929.

- ↑ Van der Lubbe, Jan C. A. (1997), Information Theory, Cambridge University Press, p. 3, ISBN 9780521467605.

- ↑ Leiss, Ernst L. (2006), A Programmer's Companion to Algorithm Analysis, CRC Press, p. 28, ISBN 9781420011708.

- ↑ Equivalently, a family with k distinct elements has at most 2k distinct sets, with equality when it is a power set.

- ↑ Eppstein, David (2005), "The lattice dimension of a graph", European Journal of Combinatorics 26 (5): 585–592, arXiv:cs.DS/0402028, doi:10.1016/j.ejc.2004.05.001, MR 2127682.

- ↑ Graham, Ronald L.; Rothschild, Bruce L.; Spencer, Joel H. (1980), Ramsey Theory, Wiley-Interscience, p. 78.

- ↑ Bayer, Dave; Diaconis, Persi (1992), "Trailing the dovetail shuffle to its lair", The Annals of Applied Probability 2 (2): 294–313, doi:10.1214/aoap/1177005705, JSTOR 2959752, MR 1161056.

- ↑ Sedgewick & Wayne (2011), p. 186.

- ↑ Cormen et al., p. 156; Goodrich & Tamassia, p. 238.

- ↑ Cormen et al., p. 276; Goodrich & Tamassia, p. 159.

- ↑ Cormen et al., pp. 879–880; Goodrich & Tamassia, p. 464.

- ↑ Edmonds, Jeff (2008), How to Think About Algorithms, Cambridge University Press, p. 302, ISBN 9781139471756.

- ↑ Cormen et al., p. 844; Goodrich & Tamassia, p. 279.

- ↑ Causton, Helen; Quackenbush, John; Brazma, Alvis (2009), Microarray Gene Expression Data Analysis: A Beginner's Guide, John Wiley & Sons, pp. 49–50, ISBN 9781444311563.

- ↑ Eidhammer, Ingvar; Barsnes, Harald; Eide, Geir Egil; Martens, Lennart (2012), Computational and Statistical Methods for Protein Quantification by Mass Spectrometry, John Wiley & Sons, p. 105, ISBN 9781118493786.

- ↑ 28.0 28.1 Campbell, Murray; Greated, Clive (1994), The Musician's Guide to Acoustics, Oxford University Press, p. 78, ISBN 9780191591679.

- ↑ France, Robert (2008), Introduction to Physical Education and Sport Science, Cengage Learning, p. 282, ISBN 9781418055295.

- ↑ Allen, Elizabeth; Triantaphillidou, Sophie (2011), The Manual of Photography, Taylor & Francis, p. 228, ISBN 9780240520377.

- ↑ 31.0 31.1 Davis, Phil (1998), Beyond the Zone System, CRC Press, p. 17, ISBN 9781136092947.

- ↑ Zwerman, Susan; Okun, Jeffrey A. (2012), Visual Effects Society Handbook: Workflow and Techniques, CRC Press, p. 205, ISBN 9781136136146.

- ↑ Bauer, Craig P. (2013), Secret History: The Story of Cryptology, CRC Press, p. 332, ISBN 9781466561861.

- ↑ 34.0 34.1 Warren Jr., Henry S. (2002). Hacker's Delight. Addison Wesley. p. 215. ISBN 978-0-201-91465-8.

- ↑ fls, Linux kernel API, kernel.org, retrieved 2010-10-17.

- ↑ 36.0 36.1 36.2 Majithia, J. C.; Levan, D. (1973), "A note on base-2 logarithm computations", Proceedings of the IEEE 61 (10): 1519–1520, doi:10.1109/PROC.1973.9318.

- ↑ Kostopoulos, D. K. (1991), "An algorithm for the computation of binary logarithms", IEEE Transactions on Computers 40 (11): 1267–1270, doi:10.1109/12.102831.

- ↑ "7.12.6.10 The log2 functions", ISO/IEC 9899:1999 specification, p. 226.