Bayesian game

In game theory, a Bayesian game is one in which information about characteristics of the other players (i.e. payoffs) is incomplete. Following John C. Harsanyi's framework,[1] a Bayesian game can be modelled by introducing Nature as a player in a game. Nature assigns a random variable to each player which could take values of types for each player and associating probabilities or a probability density function with those types (in the course of the game, nature randomly chooses a type for each player according to the probability distribution across each player's type space). Harsanyi's approach to modelling a Bayesian game in such a way allows games of incomplete information to become games of imperfect information (in which the history of the game is not available to all players). The type of a player determines that player's payoff function and the probability associated with the type is the probability that the player for whom the type is specified is that type. In a Bayesian game, the incompleteness of information means that at least one player is unsure of the type (and so the payoff function) of another player.

Such games are called Bayesian because of the probabilistic analysis inherent in the game. Players have initial beliefs about the type of each player (where a belief is a probability distribution over the possible types for a player) and can update their beliefs according to Bayes' Rule as play takes place in the game, i.e. the belief a player holds about another player's type might change on the basis of the actions they have played. The lack of information held by players and modelling of beliefs mean that such games are also used to analyse imperfect information scenarios.

Specification of games

The normal form representation of a non-Bayesian game with perfect information is a specification of the strategy spaces and payoff functions of players. A strategy for a player is a complete plan of action that covers every contingency of the game, even if that contingency can never arise. The strategy space of a player is thus the set of all strategies available to a player. A payoff function is a function from the set of strategy profiles to the set of payoffs (normally the set of real numbers), where a strategy profile is a vector specifying a strategy for every player.

In a Bayesian game, it is to specify the strategy spaces, type spaces, payoff functions and beliefs for every player. A strategy for a player is a complete plan of action that covers every contingency that might arise for every type that player might be. A strategy must not only specify the actions of the player given the type that he is, but must specify the actions that he would take if he were of another type. Strategy spaces are defined as above. A type space for a player is just the set of all possible types of that player. The beliefs of a player describe the uncertainty of that player about the types of the other players. Each belief is the probability of the other players having particular types, given the type of the player with that belief (i.e. the belief is  ). A payoff function is a 2-place function of strategy profiles and types. If a player has payoff function

). A payoff function is a 2-place function of strategy profiles and types. If a player has payoff function  and he has type t, the payoff he receives is

and he has type t, the payoff he receives is  , where

, where  is the strategy profile played in the game (i.e. the vector of strategies played).

is the strategy profile played in the game (i.e. the vector of strategies played).

One of the formal definitions of such a game looks like the following:

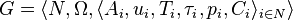

The game is defined as:

, where

, where

-

is the set of players.

is the set of players. -

is the set of the states of the nature. For instance, in a card game, it can be any order of the cards.

is the set of the states of the nature. For instance, in a card game, it can be any order of the cards. -

is the set of actions for player

is the set of actions for player  . Let

. Let  .

. -

is the type of player

is the type of player  , decided by the function

, decided by the function  . So for each state of the nature, the game will have different types of players. The outcome of the players is what determines its type. Players with the same outcome belong to the same type.

. So for each state of the nature, the game will have different types of players. The outcome of the players is what determines its type. Players with the same outcome belong to the same type. -

defines the available actions for player

defines the available actions for player  of some type in

of some type in  .

. -

is the payoff function for player

is the payoff function for player  . More formally, let

. More formally, let  , and

, and  .

. -

is the probability distribution over

is the probability distribution over  for each player

for each player  , that is to say, each player has different views of the probability distribution over the states of the nature. In the game, they never know the exact state of the nature.

, that is to say, each player has different views of the probability distribution over the states of the nature. In the game, they never know the exact state of the nature.

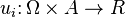

The pure strategy  should satisfy

should satisfy  for all

for all  . So the strategy for each player only depends on his type, since he may not have any knowledge about other players' types. And the expected payoff to player

. So the strategy for each player only depends on his type, since he may not have any knowledge about other players' types. And the expected payoff to player  for such a strategy profile is

for such a strategy profile is ![u_i(S)=E_{ \omega \sim p_i}[u_i( \omega ,s_1(\tau_1( \omega )),\dotsc,s_N(\tau_N( \omega )))]](../I/m/8e3f7a6abd41bc10a655350779c9d40f.png) .

.

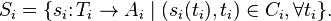

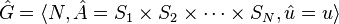

Let  be the set of pure strategies,

be the set of pure strategies,

A Bayesian Equilibrium of the game  is defined to be a (possibly mixed strategy) Nash equilibrium of the game

is defined to be a (possibly mixed strategy) Nash equilibrium of the game  . So for any finite game

. So for any finite game  , Bayesian Equilibria always exist.

, Bayesian Equilibria always exist.

Signaling

Signaling games constitute an example of Bayesian games. In such a game, the informed party (the “agent”) knows their type, whereas the uninformed party (the “principal”) does not know the (agent’s) type. In some such games, it is possible for the principal to deduce the agent's type based on the actions the agent takes (in the form of a signal sent to the principal) in what is known as a “separating equilibrium”.

A specific example of a signaling game is a model of the job market. The players are the applicant (agent) and the employer (principal). There are two types of applicant, skilled and unskilled. The employer does not know which the applicant is, but he does know that 90% of applicants are unskilled and 10% are skilled (type 'skilled' has a probability of 0.1 and type 'unskilled' has a 0.9 probability).

The employer's action space is the set of natural numbers, representing wages—these are used to form a contract based on how productive the applicant is expected to be. Paying larger wages to skilled workers will generate larger payoffs for employers, while wages given to unskilled workers will have a less pronounced effect. The payoff of the employer is determined thus by the skill of the applicant (if the applicant accepts a contract) and the wage paid. Crucially, the employer chooses his or her action (the wage offered) according to his or her belief as to how skilled the applicant is and this belief is largely determined through signals sent by the applicant.

The applicant's action space consists of two actions: either obtain a university education or abstain from university. It is less costly for a skilled worker to obtain an education, as he or she may receive scholarships, find classes less taxing, and so on. University education therefore serves as a signal, a means with which the applicant may communicate to the employer that he or she is, in fact, skilled.

One strategy the employer may use is to give all applicants a wage such that skilled applicants may attend university (due to its lower cost) but which is insufficient to provide university education for unskilled applicants. This creates a separating equilibrium: skilled applicants can now signify their skill by going to university, and unskilled applicants cannot. The employer can observe which workers are able to go to university, and can then maximize his or her payoff by providing high wages to skilled workers and low wages to unskilled.

Bayesian Nash equilibrium

In a non-Bayesian game, a strategy profile is a Nash equilibrium if every strategy in that profile is a best response to every other strategy in the profile; i.e., there is no strategy that a player could play that would yield a higher payoff, given all the strategies played by the other players. In a Bayesian game (where players are modeled as risk-neutral), rational players are seeking to maximize their expected payoff, given their beliefs about the other players (in the general case, where players may be risk averse or risk-loving, the assumption is that players are expected utility-maximizing).

A Bayesian Nash equilibrium is defined as a strategy profile and beliefs specified for each player about the types of the other players that maximizes the expected payoff for each player given their beliefs about the other players' types and given the strategies played by the other players.

This solution concept yields an abundance of equilibria in dynamic games, when no further restrictions are placed on players' beliefs. This makes Bayesian Nash equilibrium an incomplete tool with which to analyze dynamic games of incomplete information.

Perfect Bayesian equilibrium

Bayesian Nash equilibrium results in some implausible equilibria in dynamic games, where players take turns sequentially rather than simultaneously. Similarly, implausible equilibria might arise in the same way that implausible Nash equilibria arise in games of perfect and complete information, such as incredible threats and promises. Such equilibria might be eliminated in perfect and complete information games by applying subgame perfect Nash equilibrium. However, it is not always possible to avail oneself of this solution concept in incomplete information games because such games contain non-singleton information sets and since subgames must contain complete information sets, sometimes there is only one subgame—the entire game—and so every Nash equilibrium is trivially subgame perfect. Even if a game does have more than one subgame, the inability of subgame perfection to cut through information sets can result in implausible equilibria not being eliminated.

To refine the equilibria generated by the Bayesian Nash solution concept or subgame perfection, one can apply the Perfect Bayesian equilibrium solution concept. PBE is in the spirit of subgame perfection in that it demands that subsequent play be optimal. However, it places player beliefs on decision nodes that enables moves in non-singleton information sets to be dealt more satisfactorily.

So far in discussing Bayesian games, it has been assumed that information is perfect (or if imperfect, play is simultaneous). In examining dynamic games, however, it might be necessary to have the means to model imperfect information. PBE affords this means: players place beliefs on nodes occurring in their information sets, which means that the information set can be generated by nature (in the case of incomplete information) or by other players (in the case of imperfect information).

Belief systems

The beliefs held by players in Bayesian games can be approached more rigorously in PBE. A belief system is an assignment of probabilities to every node in the game such that the sum of probabilities in any information set is 1. The beliefs of a player are exactly those probabilities of the nodes in all the information sets at which that player has the move (a player belief might be specified as a function from the union of his information sets to [0,1]). A belief system is consistent for a given strategy profile if and only if the probability assigned by the system to every node is computed as the probability of that node being reached given the strategy profile, i.e. by Bayes' rule.

Sequential rationality

The notion of sequential rationality is what determines the optimality of subsequent play in PBE. A strategy profile is sequentially rational at a particular information set for a particular belief system if and only if the expected payoff of the player whose information set it is (i.e. who has the move at that information set) is maximal given the strategies played by all the other players. A strategy profile is sequentially rational for a particular belief system if it satisfies the above for every information set.

Definition

A perfect Bayesian equilibrium is a strategy profile and a belief system such that the strategies are sequentially rational given the belief system and the belief system is consistent, wherever possible, given the strategy profile.

It is necessary to stipulate the 'wherever possible' clause because some information sets might not be reached with the given strategy profile and hence Bayes' rule cannot be employed to calculate the probability at the nodes in those sets. Such information sets are said to be off the equilibrium path and any beliefs can be assigned to them. Stronger notions of consistency further restricts the beliefs that can be assigned to off-equilibrium information sets to "reasonable" ones.

Example

Information in the game on the left is imperfect since player 2 does not know what player 1 does when he comes to play. If both players are rational and both know that both players are rational and everything that is known by any player is known to be known by every player (i.e. player 1 knows player 2 knows that player 1 is rational and player 2 knows this, etc. ad infinitum – common knowledge), play in the game will be as follows according to perfect Bayesian equilibrium:

Player 2 cannot observe player 1's move. Player 1 would like to fool player 2 into thinking he has played U when he has actually played D so that player 2 will play D' and player 1 will receive 3. In fact, there is a perfect Bayesian equilibrium where player 1 plays D and player 2 plays U' and player 2 holds the belief that player 1 will definitely play D (i.e. player 2 places a probability of 1 on the node reached if player 1 plays D). In this equilibrium, every strategy is rational given the beliefs held and every belief is consistent with the strategies played. In this case, the perfect Bayesian equilibrium is the only Nash equilibrium.

References

- ↑ Harsanyi, John C., 1967/1968. "Games with Incomplete Information Played by Bayesian Players, I-III." Management Science 14 (3): 159-183 (Part I), 14 (5): 320-334 (Part II), 14 (7): 486-502 (Part III).