Autoencoder

| Machine learning and data mining |

|---|

|

| Problems |

| Clustering |

|

| Dimensionality reduction |

| Structured prediction |

| Anomaly detection |

|

| Neural nets |

| Theory |

|

|

An autoencoder, autoassociator or Diabolo network[1]:19 is an artificial neural network used for learning efficient codings.[2][3] The aim of an auto-encoder is to learn a compressed, distributed representation (encoding) for a set of data, typically for the purpose of dimensionality reduction. Autoencoder is based on the concept of Sparse coding proposed in a seminal paper by Olshausen et al. [4] in 1996.

Overview

Architecturally, the simplest form of the autoencoder is a feedforward, non-recurrent neural net that is very similar to the multilayer perceptron (MLP), with an input layer, an output layer and one or more hidden layers connecting them. The difference with the MLP is that in an autoencoder, the output layer has equally many nodes as the input layer, and instead of training it to predict some target value y given inputs x, an autoencoder is trained to reconstruct its own inputs x. I.e., the training algorithm can be summarized as

- For each input x,

- Do a feed-forward pass to compute activations at all hidden layers, then at the output layer to obtain an output x̂

- Measure the deviation of x̂ from the input x (typically using squared error, i)

- Backpropagate the error through the net and perform weight updates.

(This algorithm trains one sample at a time, but batch learning is also possible.)

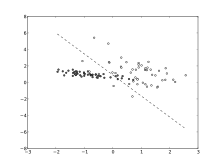

If the hidden layers are narrower (have fewer nodes) than the input/output layers, then the activations of the final hidden layer can be regarded as a compressed representation of the input. All the usual activation functions from MLPs can be used in autoencoders; if linear activations are used, or only a single sigmoid hidden layer, then the optimal solution to an auto-encoder is strongly related to principal component analysis (PCA).[5] When the hidden layers are larger than the input layer, an autoencoder can potentially learn the identity function and become useless; however, experimental results have shown that such autoencoders might still learn useful features in this case.[1]:19

Auto-encoders can also be used to learn overcomplete feature representations of data. They are the precursor to Deep belief networks.

Training

An auto-encoder is often trained using one of the many backpropagation variants (conjugate gradient method, steepest descent, etc.). Though often reasonably effective, there are fundamental problems with using backpropagation to train networks with many hidden layers. Once the errors get backpropagated to the first few layers, they are minuscule, and quite ineffectual. This causes the network to almost always learn to reconstruct the average of all the training data. Though more advanced backpropagation methods (such as the conjugate gradient method) help with this to some degree, it still results in very slow learning and poor solutions. This problem is remedied by using initial weights that approximate the final solution. The process to find these initial weights is often called pretraining.

A pretraining technique developed by Geoffrey Hinton for training many-layered "deep" auto-encoders involves treating each neighboring set of two layers like a restricted Boltzmann machine for pre-training to approximate a good solution and then using a backpropagation technique to fine-tune.[6]

References

- ↑ 1.0 1.1 Bengio, Y. (2009). "Learning Deep Architectures for AI". Foundations and Trends in Machine Learning 2. doi:10.1561/2200000006.

- ↑ Modeling word perception using the Elman network, Liou, C.-Y., Huang, J.-C. and Yang, W.-C., Neurocomputing, Volume 71, 3150–3157 (2008), doi:10.1016/j.neucom.2008.04.030

- ↑ Autoencoder for Words, Liou, C.-Y., Cheng, C.-W., Liou, J.-W., and Liou, D.-R., Neurocomputing, Volume 139, 84–96 (2014), doi:10.1016/j.neucom.2013.09.055

- ↑ Olshausen, Bruno A. "Emergence of simple-cell receptive field properties by learning a sparse code for natural images." Nature 381.6583 (1996): 607-609.

- ↑ Bourlard, H.; Kamp, Y. (1988). "Auto-association by multilayer perceptrons and singular value decomposition". Biological Cybernetics 59 (4–5): 291–294. doi:10.1007/BF00332918. PMID 3196773.

- ↑ Reducing the Dimensionality of Data with Neural Networks (Science, 28 July 2006, Hinton & Salakhutdinov)