Alternant matrix

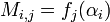

In linear algebra, an alternant matrix is a matrix with a particular structure, in which successive columns have a particular function applied to their entries. An alternant determinant is the determinant of an alternant matrix. Such a matrix of size m × n may be written out as

or more succinctly

for all indices i and j. (Some authors use the transpose of the above matrix.)

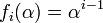

Examples of alternant matrices include Vandermonde matrices, for which  , and Moore matrices, for which

, and Moore matrices, for which  .

.

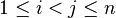

If  and the

and the  functions are all polynomials, there are some additional results: if

functions are all polynomials, there are some additional results: if  for any

for any  , then the determinant of any alternant matrix is zero (as a row is then repeated), thus

, then the determinant of any alternant matrix is zero (as a row is then repeated), thus  divides the determinant for all

divides the determinant for all  . As such, if one takes

. As such, if one takes

(a Vandermonde matrix), then  divides such polynomial alternant determinants. The ratio

divides such polynomial alternant determinants. The ratio  is called a bialternant. The case where each function

is called a bialternant. The case where each function  forms the classical definition of the Schur polynomials.

forms the classical definition of the Schur polynomials.

Alternant matrices are used in coding theory in the construction of alternant codes.

See also

References

- Thomas Muir (1960). A treatise on the theory of determinants. Dover Publications. pp. 321–363.

- A. C. Aitken (1956). Determinants and Matrices. Oliver and Boyd Ltd. pp. 111–123.

- Richard P. Stanley (1999). Enumerative Combinatorics. Cambridge University Press. pp. 334–342.