AVL tree

| AVL tree | ||

|---|---|---|

| Type | Tree | |

| Invented | 1962 | |

| Invented by | Georgy Adelson-Velsky and E. M. Landis | |

| Time complexity in big O notation | ||

| Average | Worst case | |

| Space | O(n) | O(n) |

| Search | O(log n) | O(log n) |

| Insert | O(log n) | O(log n) |

| Delete | O(log n) | O(log n) |

In computer science, an AVL tree (Georgy Adelson-Velsky and Landis' tree, named after the inventors) is a self-balancing binary search tree. It was the first such data structure to be invented.[1] In an AVL tree, the heights of the two child subtrees of any node differ by at most one; if at any time they differ by more than one, rebalancing is done to restore this property. Lookup, insertion, and deletion all take O(log n) time in both the average and worst cases, where n is the number of nodes in the tree prior to the operation. Insertions and deletions may require the tree to be rebalanced by one or more tree rotations.

The AVL tree is named after its two Soviet inventors, Georgy Adelson-Velsky and E. M. Landis, who published it in their 1962 paper "An algorithm for the organization of information".[2]

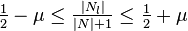

AVL trees are often compared with red-black trees because both support the same set of operations and take O(log n) time for the basic operations. For lookup-intensive applications, AVL trees are faster than red-black trees because they are more rigidly balanced.[3] Similar to red-black trees, AVL trees are height-balanced. Both are in general not weight-balanced nor μ-balanced for any  ;[4] that is, sibling nodes can have hugely differing numbers of descendants.

;[4] that is, sibling nodes can have hugely differing numbers of descendants.

Operations

Basic operations of an AVL tree involve carrying out the same actions as would be carried out on an unbalanced binary search tree, but modifications are followed by zero or more operations called tree rotations, which help to restore the height balance of the subtrees.

Searching

Searching for a specific key in an AVL Tree can be done the same way as that of a normal unbalanced Binary Search Tree.

Traversal

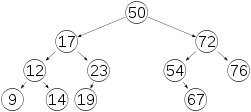

Once a node has been found in a balanced tree, the next or previous nodes can be explored in amortized constant time. Some instances of exploring these "nearby" nodes require traversing up to log(n) links (particularly when moving from the rightmost leaf of the root's left subtree to the root or from the root to the leftmost leaf of the root's right subtree; in the example AVL tree, moving from node 14 to the next but one node 19 takes 4 steps). However, exploring all n nodes of the tree in this manner would use each link exactly twice: one traversal to enter the subtree rooted at that node, another to leave that node's subtree after having explored it. And since there are n−1 links in any tree, the amortized cost is found to be 2×(n−1)/n, or approximately 2.

Insertion

After inserting a node, it is necessary to check each of the node's ancestors for consistency with the rules of AVL ("retracing"). The balance factor is calculated as follows:

balanceFactor = height(left subtree) - height(right subtree)

Since with a single insertion the height of an AVL subtree cannot increase by more than one, the temporary balance factor of a node will be in the range from −2 to +2. For each node checked, if the balance factor remains in the range from −1 to +1 then only corrections of the balance factor, but no rotations are necessary. However, if the balance factor becomes less than −1 or greater than +1, the subtree rooted at this node is unbalanced.

- Description of the Rotations

Let us first assume the balance factor of a node P is 2 (as opposed to the other possible unbalanced value −2). This case is depicted in the left column of the illustration with P:=5. We then look at the left subtree (the higher one) with root N. If this subtree does not lean to the right - i.e. N has balance factor 1 (or, when deletion also 0) - we can rotate the whole tree to the right to get a balanced tree. This is labelled as the "Left Left Case" in the illustration with N:=4. If the subtree does lean to the right - i.e. N:=3 has balance factor −1 - we first rotate the subtree to the left and end up the previous case. This second case is labelled as "Left Right Case" in the illustration.

If the balance factor of the node P is −2 (this case is depicted in the right column of the illustration P:=3) we can mirror the above algorithm. I.e. if the root N of the (higher) right subtree has balance factor −1 (or, when deletion also 0) we can rotate the whole tree to the left to get a balanced tree. This is labelled as the "Right Right Case" in the illustration with N:=4. If the root N:=5 of the right subtree has balance factor 1 ("Right Left Case") we can rotate the subtree to the right to end up in the "Right Right Case".

The whole retracing loop for an insertion looks like this:

// N is the child of P whose height increases by 1. do { if (N == left_child(P)) { if (balance_factor(P) == 1) { // The left column in the picture // Temporary balance_factor(P) == 2 ==> rebalancing is required. if (balance_factor(N) == -1) { // Left Right Case rotate_left(N); // Reduce to Left Left Case } // Left Left Case rotate_right(P); break; // Leave the loop } if (balance_factor(P) == -1) { balance_factor(P) = 0; // N’s height increase is absorbed at P. break; // Leave the loop } balance_factor(P) = 1; // Height increases at P } else { // N == right_child(P), the child whose height increases by 1. if (balance_factor(P) == -1) { // The right column in the picture // Temporary balance_factor(P) == -2 ==> rebalancing is required. if (balance_factor(N) == 1) { // Right Left Case rotate_right(N); // Reduce to Right Right Case } // Right Right Case rotate_left(P); break; // Leave the loop } if (balance_factor(P) == 1) { balance_factor(P) = 0; // N’s height increase is absorbed at P. break; // Leave the loop } balance_factor(P) = -1; // Height increases at P } N = P; P = parent(N); } while (P != null); // Possibly up to the root

After a rotation a subtree has the same height as before, so retracing can stop. In order to restore the balance factors of all nodes, first observe that all nodes requiring correction lie along the path used during the initial insertion. If the above procedure is applied to nodes along this path, starting from the bottom (i.e. the inserted node), then every node in the tree will again have a balance factor of −1, 0, or 1.

The time required is O(log n) for lookup, plus a maximum of O(log n) retracing levels on the way back to the root, so the operation can be completed in O(log n) time.

Deletion

Let node X be the node with the value we need to delete, and let node Y be a node in the tree we need to find to take node X's place, and let node Z be the actual node we take out of the tree.

Steps to consider when deleting a node in an AVL tree are the following:

- If node X is a leaf or has only one child, skip to step 5 with Z:=X.

- Otherwise, determine node Y by finding the largest node in node X's left subtree (the in-order predecessor of X − it does not have a right child) or the smallest in its right subtree (the in-order successor of X − it does not have a left child).

- Exchange all the child and parent links of node X with those of node Y. In this step, the in-order sequence between nodes X and Y is temporarily disturbed, but the tree structure doesn't change.

- Choose node Z to be all the child and parent links of old node Y = those of new node X.

- If node Z has a subtree (which then is a leaf) attach it to Z's parent.

- If node Z was the root (its parent is null), update root.

- Delete node Z.

- Retrace the path back up the tree (starting with node Z's parent) to the root, adjusting the balance factors as needed.

Since with a single deletion the height of an AVL subtree cannot decrease by more than one, the temporary balance factor of a node will be in the range from −2 to +2.

If the balance factor becomes ±2 then the subtree is unbalanced and needs to be rotated. The various cases of rotations are depicted in section "Insertion".

The whole retracing loop for a deletion looks like this:

// N is the child of P whose height decreases by 1. do { if (N == right_child(P)) { if (balance_factor(P) == 1) { // The left column in the picture // Temporary balance_factor(P) == 2 ==> rebalancing is required. S = left_child(P); // Sibling of N B = balance_factor(S); if (B == -1) { // Left Right Case rotate_left(S); // Reduce to Left Left Case } // Left Left Case rotate_right(P); if (B == 0) // (in the picture the small blue (0) at node 4) break; // Height does not change: Leave the loop } if (balance_factor(P) == 0) { balance_factor(P) = 1; // N’s height decrease is absorbed at P. break; // Leave the loop } balance_factor(P) = 0; // Height decreases at P } else { // N == left_child(P), the child whose height decreases by 1. if (balance_factor(P) == -1) { // The right column in the picture // Temporary balance_factor(P) == -2 ==> rebalancing is required. S = right_child(P); // Sibling of N B = balance_factor(S); if (B == 1) { // Right Left Case rotate_right(S); // Reduce to Right Right Case } // Right Right Case rotate_left(P); if (B == 0) // (in the picture the small blue (0) at node 4) break; // Height does not change: Leave the loop } if (balance_factor(P) == 0) { balance_factor(P) = -1; // N’s height decrease is absorbed at P. break; // Leave the loop } balance_factor(P) = 0; // Height decreases at P } N = P; P = parent(N); } while (P != null); // Possibly up to the root

The retracing can stop if the balance factor becomes ±1 indicating that the height of that subtree has remained unchanged. This can also result from a rotation when the higher child tree has a balance factor of 0.

If the balance factor becomes 0 then the height of the subtree has decreased by one and the retracing needs to continue. This can also result from a rotation.

The time required is O(log n) for lookup, plus a maximum of O(log n) retracing levels on the way back to the root, so the operation can be completed in O(log n) time.

Comparison to other structures

Both AVL trees and red-black trees are self-balancing binary search trees and they are very similar mathematically.[5] The operations to balance the trees are different, but both occur on the average in O(1) with maximum in O(log n). The real difference between the two is the limiting height.

For a tree of size  :

:

- An AVL tree's height is strictly less than:[6][7]

- where

is the golden ratio.

is the golden ratio.

- A red-black tree's height is at most

[8]

[8]

AVL trees are more rigidly balanced than red-black trees, leading to slower insertion and removal but faster retrieval.

See also

- Trees

- Tree rotation

- Red-black tree

- Splay tree

- Scapegoat tree

- B-tree

- T-tree

- List of data structures

References

- ↑ Robert Sedgewick, Algorithms, Addison-Wesley, 1983, ISBN 0-201-06672-6, page 199, chapter 15: Balanced Trees.

- ↑ Georgy Adelson-Velsky, G.; E. M. Landis (1962). "An algorithm for the organization of information". Proceedings of the USSR Academy of Sciences (in Russian) 146: 263–266. English translation by Myron J. Ricci in Soviet Math. Doklady, 3:1259–1263, 1962.

- ↑ Pfaff, Ben (June 2004). "Performance Analysis of BSTs in System Software" (PDF). Stanford University.

- ↑ AVL trees are not weight-balanced? (meaning: AVL trees are not μ-balanced?)

Thereby: A Binary Tree is called -balanced, with

-balanced, with  , if for every node

, if for every node  , the inequality

, the inequality

is minimal with this property.

is minimal with this property.  is the number of nodes below the tree with

is the number of nodes below the tree with  as root (including the root) and

as root (including the root) and  is the left child node of

is the left child node of  .

. -

- ↑ In fact, each AVL tree can be colored red-black.

- ↑ Burkhard, Walt (Spring 2012). "AVL Dictionary Data Type Implementation". Advanced Data Structures (PDF). La Jolla: A.S. Soft Reserves, UC San Diego. p. 103.

- ↑ Knuth, Donald E. (2000). Sorting and searching (2. ed., 6. printing, newly updated and rev. ed.). Boston [u.a.]: Addison-Wesley. p. 460. ISBN 0-201-89685-0.

- ↑ Proof of asymptotic bounds

Further reading

- Donald Knuth. The Art of Computer Programming, Volume 3: Sorting and Searching, Third Edition. Addison-Wesley, 1997. ISBN 0-201-89685-0. Pages 458–475 of section 6.2.3: Balanced Trees.

External links

| The Wikibook Algorithm Implementation has a page on the topic of: AVL tree |

| Wikimedia Commons has media related to AVL-trees. |

- xdg library by Dmitriy Vilkov: Serializable straight C-implementation could easily be taken from this library under GNU-LGPL and AFL v2.0 licenses.

- Description from the Dictionary of Algorithms and Data Structures

- Python Implementation

- Single C header file by Ian Piumarta

- AVL Tree Demonstration

- AVL tree applet – all operations

- Fast and efficient implementation of AVL Trees

- PHP Implementation

- AVL Threaded Tree PHP Implementation

- C++ implementation which can be used as an array

- Self balancing AVL tree with Concat and Split operations

| ||||||||||||||||||||||

| ||||||||||||||||||||||||||||||