3SUM

| Is there an algorithm to solve the 3SUM problem in time  , for any , for any  ? ? |

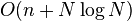

In computational complexity theory, the 3SUM problem asks if a given set of  numbers contains three elements that sum to zero. A generalized version, rSUM, asks the same question of r numbers. 3SUM can be easily solved in

numbers contains three elements that sum to zero. A generalized version, rSUM, asks the same question of r numbers. 3SUM can be easily solved in  time, and matching

time, and matching  lower bounds are known in some specialized models of computation (Erickson 1999). Slightly faster randomized algorithms are known that exploit computational-model parallelism on a RAM and in the external-memory and cache-oblivious models (Baran, Demaine & Pǎtraşcu 2008).

lower bounds are known in some specialized models of computation (Erickson 1999). Slightly faster randomized algorithms are known that exploit computational-model parallelism on a RAM and in the external-memory and cache-oblivious models (Baran, Demaine & Pǎtraşcu 2008).

When the elements are integers in the range ![[-N, \dots, N]](../I/m/da3f7476859a5d9f432c2f646840986b.png) , 3SUM can be solved in

, 3SUM can be solved in  time by representing the input set

time by representing the input set  as a bit vector, computing the set

as a bit vector, computing the set  of all pairwise sums as a discrete convolution using the Fast Fourier transform, and finally comparing this set to

of all pairwise sums as a discrete convolution using the Fast Fourier transform, and finally comparing this set to  .

.

Quadratic algorithm

Suppose the input array is ![S[0..n-1]](../I/m/3316b7a59be3cf01e464b00065393266.png) . 3SUM can be solved in

. 3SUM can be solved in  time on average by inserting each number

time on average by inserting each number ![S[i]](../I/m/91a746da7fa21b438545fa78016f5555.png) into a hash table, and then for each index

into a hash table, and then for each index  and

and  , checking whether the hash table contains the integer

, checking whether the hash table contains the integer ![-(S[i]+S[j])](../I/m/5594e919fa77850e6cdeddb9d19d54d2.png) .

.

Alternatively, the algorithm below first sorts the input array and then tests all possible pairs in a careful order that avoids the need to binary search for the pairs in the sorted list, achieving worst-case  time, as follows.[1]

time, as follows.[1]

sort(S);

for i=0 to n-3 do

a = S[i];

start = i+1;

end = n-1;

while (start < end) do

b = S[start];

c = S[end];

if (a+b+c == 0) then

output a, b, c;

// Continue search for all triplet combinations summing to zero.

start = start + 1

end = end - 1

else if (a+b+c > 0) then

end = end - 1;

else

start = start + 1;

end

end

end

The following example shows this algorithm's execution on a small sorted array. Current values of a are shown in green, values of b and c are shown in red.

-25 -10 -7 -3 2 4 8 10 (a+b+c==-25) -25 -10 -7 -3 2 4 8 10 (a+b+c==-22) . . . -25 -10 -7 -3 2 4 8 10 (a+b+c==-7) -25 -10 -7 -3 2 4 8 10 (a+b+c==-7) -25 -10 -7 -3 2 4 8 10 (a+b+c==-3) -25 -10 -7 -3 2 4 8 10 (a+b+c==2) -25 -10 -7 -3 2 4 8 10 (a+b+c==0)

The correctness of the algorithm can be seen as follows. Suppose we have a solution a + b + c = 0. Since the pointers only move in one direction, we can run the algorithm until the leftmost pointer points to a. Run the algorithm until either one of the remaining pointers points to b or c, whichever occurs first. Then the algorithm will run until the last pointer points to the remaining term, giving the affirmative solution.

Variants

Non-zero sum

Instead of looking for numbers whose sum is 0, it is possible to look for numbers whose sum is any constant C in the following way:

- Subtract C/3 from all elements of the input array.

- In the modified array, find 3 elements whose sum is 0.

a+b=c

Instead of requiring a+b+c=0, we can find numbers that satisfy a+b=c in the following way (assuming all elements are integers):

- Create a copy of the original array where each element x becomes 10x+1.

- Create a copy of the original array where each x becomes -10x-2.

- In the concatenation of these two arrays, find 3 elements whose sum is 0.

Correctness proof:

- If in the original array there are elements for which a+b=c, then the algorithm will find (10a+1), (10b+1) and (-10c-2), whose sum is 0.

- Conversely, any triple found by the algorithm must have exactly two elements from the first copy (10a+1), (10b+1), and a single element from the second copy (-10c-2), since other combinations cannot have a sum of 0. Hence, any triple found will necessarily match an a+b=c triple in the original array

In a very similar way, a solver for a+b=c can be used to solve a+b+c=0.

3 different arrays

Instead of searching for the 3 numbers in a single array, we can search for them in 3 different arrays. I.e., given three arrays X, Y and Z, find three numbers a∈X, b∈Y, c∈Z, such that a+b+c=0. Call the 1-array variant 3SUMx1 and the 3-array variant 3SUMx3.

Given a solver for 3SUMx1, the 3SUMx3 problem can be solved in the following way (assuming all elements are integers):

- For every element in X, set: X[i] ← X[i]*10+1.

- For every element in Y, set: Y[i] ← Y[i]*10+2.

- For every element in Z, set: Z[i] ← Z[i]*10-3.

- Let S be a concatenation of the arrays X, Y and Z.

- Use the 3SUMx1 oracle to find three elements a'∈S, b'∈S, c'∈S such that a'+b'+c'=0.

- Because the LSD (least significant digit) of the sum is 0, the LSDs of a', b' and c' must be 1, 2 and 7 (in any order). Suppose wlog that the LSD of a' is 1, b' is 2 and c' is 7.

- Return a ← (a'-1)/10, b ← (b'-2)/10, c ← (c'+3)/10.

By the way we transformed the arrays, it is guaranteed that a∈X, b∈Y, c∈Z.[2]

Convolution sum

Instead of looking for arbitrary elements of the array such that:

the convolution 3sum problem (Conv3SUM) looks for elements in specific locations:[3]

Reduction from Conv3SUM to 3SUM

Given a solver for 3SUM, the Conv3SUM problem can be solved in the following way.[3]

- Define a new array T, such that for every index i:

![T[i]=2n S[i]+i](../I/m/7cdc3af6f2a0534ad2118aee35f78163.png) (where n is the number of elements in the array, and the indices run from 0 to n-1).

(where n is the number of elements in the array, and the indices run from 0 to n-1). - Solve 3SUM on the array T.

Correctness proof:

- If in the original array there is a triple with

![S[i+j]=S[i]+S[j]](../I/m/f478576b734746c2da4b3d0afb02166a.png) , then

, then ![T[i+j]=2n S[i+j]+i+j = (2n S[i] + i) + (2n S[j] + j)=T[i]+T[j]](../I/m/eb79f425fd57b452d5ddd791ea29895b.png) , so this solution will be found by 3SUM on T.

, so this solution will be found by 3SUM on T. - Conversely, if in the new array there is a triple with

![T[k]=T[i]+T[j]](../I/m/e46ed4acd830df0c825abc58ead7fb8b.png) , then

, then ![2n S[k] + k = 2n(S[i]+S[j]) + (i+j)](../I/m/2fcf05f32eda8e82540d0fbf6a46c645.png) . Because

. Because  , necessarily

, necessarily ![S[k] = S[i]+S[j]](../I/m/9b8831b6e1f658bb44da626b383932e1.png) and

and  , so this is a valid solution for Conv3SUM on S.

, so this is a valid solution for Conv3SUM on S.

Reduction from 3SUM to Conv3SUM

Given a solver for Conv3SUM, the 3SUM problem can be solved in the following way.[3][4]

The reduction uses a hash function. As a first approximation, assume that he have a linear hash function, i.e. a function h such that:

Suppose that all elements are integers in the range: 0...N-1, and that the function h maps each element to an element in the smaller range of indices: 0...n-1. Create a new array T and send each element of S to its hash value in T, i.e., for every x in S:

Initially, suppose that the mappings are unique (i.e. each cell in T accepts only a single element from S). Solve Conv3SUM on T. Now:

- If there is a solution for 3SUM:

, then:

, then: ![T[h(z)]=T[h(x)]+T[h(y)]](../I/m/2b0c0d0dfccc1c58da9db6180ac8f675.png) and

and  , so this solution will be found by the Conv3SUM solver on T.

, so this solution will be found by the Conv3SUM solver on T. - Conversely, if a Conv3SUM is found on T, then obviously it corresponds to a 3SUM solution on S since T is just a permutation of S.

This idealized solution doesn't work, because any hash function might map several distinct elements of S to the same cell of T. The trick is to create an array T* by selecting a single random element from each cell of T, and run Conv3SUM on T*. If a solution is found, then it is a correct solution for 3SUM on S. If no solution is found, then create a different random T* and try again. Suppose there are at most R elements in each cell of T. Then the probability of finding a solution (if a solution exists) is the probability that the random selection will select the correct element from each cell, which is  . By running Conv3SUM

. By running Conv3SUM  times, the solution will be found with a high probability.

times, the solution will be found with a high probability.

Unfortunately, we do not have linear perfect hashing, so we have to use an almost linear hash function, i.e. a function h such that:

or

or

This requires to duplicate the elements of S when copying them into T, i.e., put every element  both in

both in ![T[h(x)]](../I/m/280b4c1c3c5b8a85dc0eac6f73797a9c.png) (as before) and in

(as before) and in ![T[h(x)]-1](../I/m/600e97e1888a96e5a558f0145c89633f.png) . So each cell will have 2R elements, and we will have to run Conv3SUM

. So each cell will have 2R elements, and we will have to run Conv3SUM  times.

times.

3SUM-hardness

While 3SUM can easily be solved in quadratic time,  , it was conjectured to be unsolvable in subquadratic expected time. This was known as the 3SUM Conjecture.

, it was conjectured to be unsolvable in subquadratic expected time. This was known as the 3SUM Conjecture.

A problem is called 3SUM-hard if solving it in subquadratic time implies a subquadratic-time algorithm for 3SUM. The concept of 3SUM-hardness was introduced by Gajentaan & Overmars (1995) in analysis of algorithms in computational geometry, including problems like: Given a set of lines in the plane, are there three that meet in a point?; or: Given a set of triangles in the plane, does their union have a hole? Also certain visibility and motion planning problems were shown to be in the class.

By now there are a multitude of problems that fall into this category. An example is the decision version of X + Y sorting: given sets of numbers X and Y of n elements each, are there n² distinct x + y for x ∈ X, y ∈ Y?[5]

The original 3SUM conjecture were recently refuted by an algorithm that solves 3SUM in time  .[6] However, it is still conjectured that 3SUM is unsolvable in

.[6] However, it is still conjectured that 3SUM is unsolvable in  expected time.[4]

expected time.[4]

Notes

- ↑ Visibility Graphs and 3-Sum by Michael Hoffmann

- ↑ For a reduction in the other direction, see Variants of the 3-sum problem.

- ↑ 3.0 3.1 3.2 Patrascu, M. (2010). Towards polynomial lower bounds for dynamic problems. Proceedings of the 42nd ACM symposium on Theory of computing - STOC '10. p. 603. doi:10.1145/1806689.1806772. ISBN 9781450300506.

- ↑ 4.0 4.1 Kopelowitz, Tsvi; Pettie, Seth; Porat, Ely (2014). "3SUM Hardness in (Dynamic) Data Structures". arXiv:1407.6756 [cs.DS].

- ↑ Demaine, Erik; Erickson, Jeff; O'Rourke, Joseph (20 August 2006). "Problem 41: Sorting X + Y (Pairwise Sums)". The Open Problems Project. Retrieved 23 September 2014.

- ↑ Gronlund, A.; Pettie, S. (2014). Threesomes, Degenerates, and Love Triangles. 2014 IEEE 55th Annual Symposium on Foundations of Computer Science. p. 621. doi:10.1109/FOCS.2014.72. ISBN 978-1-4799-6517-5.

References

- Baran, Ilya; Demaine, Erik D.; Pătraşcu, Mihai (2008), "Subquadratic algorithms for 3SUM", Algorithmica 50 (4): 584–596, doi:10.1007/s00453-007-9036-3.

- Demaine, Erik D.; Mitchell, Joseph S. B.; O'Rourke, Joseph (July 2005), Problem 11: 3SUM Hard Problems, The Open Problems Project.

- Erickson, Jeff (1999), "Lower bounds for linear satisfiability problems", Chicago Journal of Theoretical Computer Science (MIT Press) 1999.

- Gajentaan, Anka; Overmars, Mark H. (1995), "On a class of O(n2) problems in computational geometry", Computational Geometry: Theory and Applications 5 (3): 165–185, doi:10.1016/0925-7721(95)00022-2.

- King, James (2004), A survey of 3SUM-hard problems (PDF).

![T[h(x)] = x](../I/m/2313b51ca9eb67c402d09dbc06088cbe.png)