Šidák correction

In statistics, the Šidák correction, or Dunn–Šidák correction, is a method used to counteract the problem of multiple comparisons. It is a simple method to control the familywise error rate that is probabilistically exact when the individual tests are independent from each other but is conservative otherwise. It was published in 1967 [1] by the statistician and probabilist Zbyněk Šidák.[2]

Usage

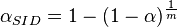

- If the test statistics are independent from each other then testing each of m hypotheses at level

is Sidak's multiple testing procedure.

is Sidak's multiple testing procedure. - This test is more powerful than Bonferroni, but the gain is small: for

= 0.05 and m= 10 and 10^12, Bonferroni vs Sidak give 0.005 and 5 10^-14 vs 0.005116 and 5.129 10^-14, respectively. The main merit of the correction is that it is exact probabilistically when the tests are independent from each other. Bonferroni is an easier approximate way to calculate the Sidak correction.

= 0.05 and m= 10 and 10^12, Bonferroni vs Sidak give 0.005 and 5 10^-14 vs 0.005116 and 5.129 10^-14, respectively. The main merit of the correction is that it is exact probabilistically when the tests are independent from each other. Bonferroni is an easier approximate way to calculate the Sidak correction. - The method may be used for the construction of a trapezoid joint Confidence Region for the mean and the variance of data obtained from a Normal Distribution, since these two parameters are independent.

Proof

The Šidák correction is derived by assuming that the individual tests are independent. Let the significance threshold for each test be  ; then the probability that at least one of the tests is significant under this threshold is (1 - the probability that none of them are significant). Since it is assumed that they are independent, the probability that all of them are not significant is the product of the probabilities that each of them are not significant, or

; then the probability that at least one of the tests is significant under this threshold is (1 - the probability that none of them are significant). Since it is assumed that they are independent, the probability that all of them are not significant is the product of the probabilities that each of them are not significant, or  . Our intention is for this probability to equal

. Our intention is for this probability to equal  , the significance level for the entire series of tests. By solving for

, the significance level for the entire series of tests. By solving for  , we obtain

, we obtain

Example

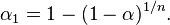

For example, to test two independent hypotheses on the same data at 0.05 significance level, instead of using a p value threshold of 0.05, one would use a stricter threshold equal to  . Notably one can derive valid confidence intervals matching the test decision using the Šidák correction by using 100(1 − α1/n)% confidence intervals.

. Notably one can derive valid confidence intervals matching the test decision using the Šidák correction by using 100(1 − α1/n)% confidence intervals.

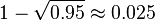

The Bonferroni correction is a safeguard against multiple tests of statistical significance on the same data falsely giving the appearance of significance, as 1 out of every 20 hypothesis-tests is expected to be significant at the α = 0.05 level purely due to chance. Furthermore, the probability of getting a significant result with n tests at this level of significance is 1 − 0.95n (1 − probability of not getting a significant result with n tests).

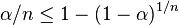

Similarly, the Šidák correction gives a stronger bound than the Bonferroni correction, because, for  ,

,  . But the Šidák correction requires the additional condition of independence. Previously, because the Šidák correction requires fractional powers (i.e. roots), the computationally simpler Bonferroni correction was often preferred instead. Now, inasmuch as computing fractional powers is trivial, preference of the Bonferroni method is due in part to tradition or unfamiliarity with the Šidák method. Additionally, the results of the two methods are highly similar for conventional significance levels (between .01 and .10).

. But the Šidák correction requires the additional condition of independence. Previously, because the Šidák correction requires fractional powers (i.e. roots), the computationally simpler Bonferroni correction was often preferred instead. Now, inasmuch as computing fractional powers is trivial, preference of the Bonferroni method is due in part to tradition or unfamiliarity with the Šidák method. Additionally, the results of the two methods are highly similar for conventional significance levels (between .01 and .10).

See also

- Multiple comparisons

- Bonferroni correction

- Familywise error rate

- Closed testing procedure

References

- ↑ Šidák, Z. K. (1967). "Rectangular Confidence Regions for the Means of Multivariate Normal Distributions". Journal of the American Statistical Association 62 (318): 626–633. doi:10.1080/01621459.1967.10482935.

- ↑ Seidler, J.; Vondráček, J. Í.; Saxl, I. (2000). "The life and work of Zbyněk Šidák (1933–1999)". Applications of Mathematics 45 (5): 321. doi:10.1023/A:1022238410461.