Wolfe conditions

In the unconstrained minimization problem, the Wolfe conditions are a set of inequalities for performing inexact line search, especially in quasi-Newton methods, first published by Philip Wolfe in 1969.[1][2]

In these methods the idea is to find

for some smooth  . Each step often involves approximately solving the subproblem

. Each step often involves approximately solving the subproblem

where  is the current best guess,

is the current best guess,  is a search direction, and

is a search direction, and  is the step length.

is the step length.

The inexact line searches provide an efficient way of computing an acceptable step length  that reduces the objective function 'sufficiently', rather than minimizing the objective function over

that reduces the objective function 'sufficiently', rather than minimizing the objective function over  exactly. A line search algorithm can use Wolfe conditions as a requirement for any guessed

exactly. A line search algorithm can use Wolfe conditions as a requirement for any guessed  , before finding a new search direction

, before finding a new search direction  .

.

Armijo rule and curvature

Denote a univariate function  restricted to the direction

restricted to the direction  as

as  . A step length

. A step length  is said to satisfy the Wolfe conditions if the following two inequalities hold:

is said to satisfy the Wolfe conditions if the following two inequalities hold:

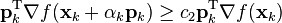

- i)

,

, - ii)

,

,

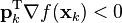

with  . (In examining condition (ii), recall that to ensure that

. (In examining condition (ii), recall that to ensure that  is a descent direction, we have

is a descent direction, we have  .)

.)

is usually chosen to be quite small while

is usually chosen to be quite small while  is much larger; Nocedal gives example values of

is much larger; Nocedal gives example values of  [3]

and

[3]

and  for Newton or quasi-Newton methods and

for Newton or quasi-Newton methods and  for the nonlinear conjugate gradient method. Inequality i) is known as the Armijo rule [4] and ii) as the curvature condition; i) ensures that the step length

for the nonlinear conjugate gradient method. Inequality i) is known as the Armijo rule [4] and ii) as the curvature condition; i) ensures that the step length  decreases

decreases  'sufficiently', and ii) ensures that the slope has been reduced sufficiently.

'sufficiently', and ii) ensures that the slope has been reduced sufficiently.

Strong Wolfe condition on curvature

The Wolfe conditions, however, can result in a value for the step length that is not close to a minimizer of  . If we modify the curvature condition to the following,

. If we modify the curvature condition to the following,

- iia)

then i) and iia) together form the so-called strong Wolfe conditions, and force  to lie close to a critical point of

to lie close to a critical point of  .

.

The principal reason for imposing the Wolfe conditions in an optimization algorithm where  is to ensure convergence of the gradient to zero. In particular, if the cosine of the angle between

is to ensure convergence of the gradient to zero. In particular, if the cosine of the angle between  and the gradient,

and the gradient,

is bounded away from zero and the i) and ii) hold, then  .

.

An additional motivation, in the case of a quasi-Newton method is that if  , where the matrix

, where the matrix  is updated by the BFGS or DFP formula, then if

is updated by the BFGS or DFP formula, then if  is positive definite ii) implies

is positive definite ii) implies  is also positive definite.

is also positive definite.

References

- "Line Search Methods". Numerical Optimization. Springer Series in Operations Research and Financial Engineering. 2006. pp. 30–32. doi:10.1007/978-0-387-40065-5_3. ISBN 978-0-387-30303-1.

- "Quasi-Newton Methods". Numerical Optimization. Springer Series in Operations Research and Financial Engineering. 2006. pp. 135–163. doi:10.1007/978-0-387-40065-5_6. ISBN 978-0-387-30303-1.

- ↑ Wolfe, P. (1969). "Convergence Conditions for Ascent Methods". SIAM Review 11 (2): 226–000. doi:10.1137/1011036. JSTOR 2028111.

- ↑ Wolfe, P. (1971). "Convergence Conditions for Ascent Methods. II: Some Corrections". SIAM Review 13 (2): 185–000. doi:10.1137/1013035.

- ↑ Nocedal, Jorge; Wright, Stephen (1999). Numerical Optimization.

- ↑ Armijo, Larry (1966). "Minimization of functions having Lipschitz continuous first partial derivatives". Pacific J. Math. 16 (1): 1–3.

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||