Transfer matrix

From Wikipedia, the free encyclopedia

This article is about the transfer matrix in wavelet theory. For the transfer matrix method in statistical physics, see Transfer-matrix method. For the transfer matrix method in optics, see Transfer-matrix method (optics).

In applied mathematics, the transfer matrix is a formulation in terms of a block-Toeplitz matrix of the two-scale equation, which characterizes refinable functions. Refinable functions play an important role in wavelet theory and finite element theory.

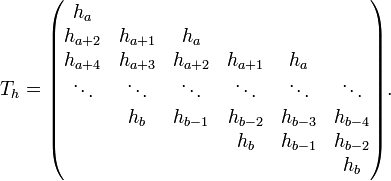

For the mask  , which is a vector with component indexes from

, which is a vector with component indexes from  to

to  ,

the transfer matrix of

,

the transfer matrix of  , we call it

, we call it  here, is defined as

here, is defined as

More verbosely

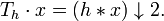

The effect of  can be expressed in terms of the downsampling operator "

can be expressed in terms of the downsampling operator " ":

":

Properties

-

.

. - If you drop the first and the last column and move the odd-indexed columns to the left and the even-indexed columns to the right, then you obtain a transposed Sylvester matrix.

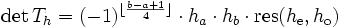

- The determinant of a transfer matrix is essentially a resultant.

- More precisely:

- Let

be the even-indexed coefficients of

be the even-indexed coefficients of  (

( ) and let

) and let  be the odd-indexed coefficients of

be the odd-indexed coefficients of  (

( ).

). - Then

, where

, where  is the resultant.

is the resultant. - This connection allows for fast computation using the Euclidean algorithm.

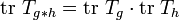

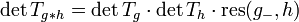

- For the determinant of the transfer matrix of convolved mask holds

- where

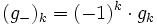

denotes the mask with alternating signs, i.e.

denotes the mask with alternating signs, i.e.  .

.

- If

, then

, then  .

.

- This is a concretion of the determinant property above. From the determinant property one knows that

is singular whenever

is singular whenever  is singular. This property also tells, how vectors from the null space of

is singular. This property also tells, how vectors from the null space of  can be converted to null space vectors of

can be converted to null space vectors of  .

.

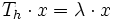

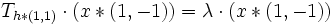

- If

is an eigenvector of

is an eigenvector of  with respect to the eigenvalue

with respect to the eigenvalue  , i.e.

, i.e.

-

,

, - then

is an eigenvector of

is an eigenvector of  with respect to the same eigenvalue, i.e.

with respect to the same eigenvalue, i.e. -

.

.

- Let

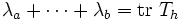

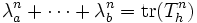

be the eigenvalues of

be the eigenvalues of  , which implies

, which implies  and more generally

and more generally  . This sum is useful for estimating the spectral radius of

. This sum is useful for estimating the spectral radius of  . There is an alternative possibility for computing the sum of eigenvalue powers, which is faster for small

. There is an alternative possibility for computing the sum of eigenvalue powers, which is faster for small  .

.

- Let

be the periodization of

be the periodization of  with respect to period

with respect to period  . That is

. That is  is a circular filter, which means that the component indexes are residue classes with respect to the modulus

is a circular filter, which means that the component indexes are residue classes with respect to the modulus  . Then with the upsampling operator

. Then with the upsampling operator  it holds

it holds ![{\mathrm {tr}}(T_{h}^{n})=\left(C_{k}h*(C_{k}h\uparrow 2)*(C_{k}h\uparrow 2^{2})*\cdots *(C_{k}h\uparrow 2^{{n-1}})\right)_{{[0]_{{2^{n}-1}}}}](/2014-wikipedia_en_all_02_2014/I/media/5/b/2/c/5b2c7c4c9f3bc820a1594ffc700cc9d3.png)

- Actually not

convolutions are necessary, but only

convolutions are necessary, but only  ones, when applying the strategy of efficient computation of powers. Even more the approach can be further sped up using the Fast Fourier transform.

ones, when applying the strategy of efficient computation of powers. Even more the approach can be further sped up using the Fast Fourier transform.

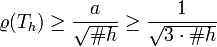

- From the previous statement we can derive an estimate of the spectral radius of

. It holds

. It holds

- where

is the size of the filter and if all eigenvalues are real, it is also true that

is the size of the filter and if all eigenvalues are real, it is also true that  ,

,- where

.

.

See also

- Transfer matrix method

- Hurwitz determinant

References

- Strang, Gilbert (1996). "Eigenvalues of

and convergence of the cascade algorithm". IEEE Transactions on Signal Processing 44. pp. 233–238.

and convergence of the cascade algorithm". IEEE Transactions on Signal Processing 44. pp. 233–238. - Thielemann, Henning (2006). Optimally matched wavelets (PhD thesis). (contains proofs of the above properties)

This article is issued from Wikipedia. The text is available under the Creative Commons Attribution/Share Alike; additional terms may apply for the media files.