Trajectory optimization

Trajectory optimization is the process of designing a trajectory that minimizes or maximizes some measure of performance within prescribed constraint boundaries. While not exactly the same, the goal of solving a trajectory optimization problem is essentially the same as solving an optimal control problem.[1]

The selection of flight profiles that yield the greatest performance plays a substantial role in the preliminary design of flight vehicles, since the use of ad-hoc profile or control policies to evaluate competing configurations may inappropriately penalize the performance of one configuration over another. Thus, to guarantee the selection of the best vehicle design, it is important to optimize the profile and control policy for each configuration early in the design process.

Consider this example. For tactical missiles, the flight profiles are determined by the thrust and load factor (lift) histories. These histories can be controlled by a number of means including such techniques as using an angle of attack command history or an altitude/downrange schedule that the missile must follow. Each combination of missile design factors, desired missile performance, and system constraints results in a new set of optimal control parameters.[2]

History

Trajectory optimization began in earnest in the 1950s as digital computers became available for the computation of trajectories. The first efforts were based on optimal control approaches which grew out of the calculus of variations developed at the University of Chicago in the first half of the 20th century most notably by Gilbert Ames Bliss. Pontryagin[3] in Russia and Bryson[4] in America were prominent researchers in the development of optimal control. Early application of trajectory optimization had to do with the optimization of rocket thrust profiles in:

- a vacuum and

- in an atmosphere.

From the early work, much of the givens about rocket propulsion optimization were discovered. Another successful application was the climb to altitude trajectories for the early jet aircraft. Because of the high drag associated with the transonic drag region and the low thrust of early jet aircraft, trajectory optimization was the key to maximizing climb to altitude performance. Optimal control based trajectories were responsible for some of the world records. In these situations, the pilot followed a Mach versus altitude schedule based on optimal control solutions.

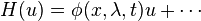

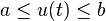

In the early phase of trajectory optimization; many of the solutions were plagued by the issue of singular subarcs. For such problems, the term in the Hamiltonian linearly multiplying the control variable goes to zero for a finite time and it becomes impossible to directly solve for the optimal control. The Hamiltonian is of the form:  and the control is restricted to being between an upper and a lower bound:

and the control is restricted to being between an upper and a lower bound:  . To minimize

. To minimize  , we need to make

, we need to make  as big or as small as possible, depending on the sign of

as big or as small as possible, depending on the sign of  , specifically:

, specifically:

If  is positive at some times, negative at others and is only zero instantaneously, then the solution is straightforward and is a bang-bang control that switches from

is positive at some times, negative at others and is only zero instantaneously, then the solution is straightforward and is a bang-bang control that switches from  to

to  at times when

at times when  switches from negative to positive.

switches from negative to positive.

The case when  remains at zero for a finite length of time

remains at zero for a finite length of time  is called the singular control case and the optimal trajectory follows the singular subarc.

is called the singular control case and the optimal trajectory follows the singular subarc.

In this case, one is left with a family of feasible solutions. At that point, the investigators had to numerically evaluate each member of the family to determine the optimal solution. A breakthrough occurred with a condition sometimes referred to as the Kelley condition. While not a sufficient condition, this provided an additional necessary condition that allowed downselection to a trajectory that is usually the optimal singular control.[5]

An example of a problem with singular control is the optimization of the thrust of a missile flying at a constant altitude and which is launched at low speed. Here the problem is one of a bang-bang control at maximum possible thrust until the singular arc is reached. Then the solution to the singular control provides a lower variable thrust until burnout. At that point bang-bang control provides that the control or thrust go to its minimum value of zero. This solution is the foundation of the boost-sustain rocket motor profile widely used today to maximize missile performance.

Many of the early triumphs of trajectory optimization have moved into the background knowledge of the modern flight mechanicist and the origins of these discoveries are not widely known.

Solution techniques

The techniques available to solve optimization problems fall into two broad categories: the optimal control methodology that allows solution by either analytical or numerical procedures, and an approximation to the optimal-control problem through the use of nonlinear programming that allows solution by numerical procedures. The former technique is "indirect" in that it finds a solution where the total differential of the performance measure is zero. The latter technique is "direct" in that it finds a solution where the performance measure is smaller (or greater) than that of any other solution in the neighborhood. Direct and indirect methods can be blended by an application of the covector mapping principle of Ross and Fahroo.[6]

The optimal control problem is an infinite dimensional problem while the nonlinear programming approach approximates the problem by a finite dimensional problem. Trajectory optimization shares the same optimization algorithms as other optimization problems. The numerical optimal control methodology can produce the best answers but converging to a solution is difficult. Convergence is rapid when the initial guess is good, otherwise the search may fail. The ascent trajectories for the US space program (Gemini and Apollo) were designed using numerical optimal control. The very tight tolerances associated with space launchers allowed optimal control to be a useful tool. For systems with less controlled environments such as missiles, numerical optimal control would not prove as useful.

The analytic solution of the optimal control often involves extensive approximations but can still produce useful algorithms. An example is given in Ohlmeyer & Phillips.[7] In this example, linear assumptions are made and yet the algorithm can produce near optimal trajectories. Another example of an analytic solution is the "Iterative Guidance Mode (IGM)", the guidance algorithm used by the two exo-atmospheric stages of the Saturn V rocket. The IGM algorithm is an analytical calculus-of-variations solution of the two-point boundary value problem posed by the ascent of the rocket to prescribed orbit-injection conditions. The analytical solution requires that gravitational acceleration be approximated as a constant vector, and an iteration of the solution is required to improve the accuracy of this approximation.

Many numerical procedures exist to solve parameter optimization problems. The simplest procedures use the gradient descent technique, sometimes also known as the method of steepest descent. Second-order methods are also available to improve the rate of convergence, for example, the Newton–Raphson iteration, which requires the evaluation of the Hessian matrix. Quasi-Newton or variable-metric methods avoid the evaluation of the Hessian matrix by using iterative evaluation of first-order information to approximate the Hessian matrix. The nonlinear programming methods such as BFGS and SQP may be used to solve the finite dimensional problem. An effective and robust nonlinear programming method[8] employing the Simplex algorithm was developed in the 1970s. It was first used to determine quasi-optimum reentry trajectories for the Space Shuttle and has subsequently been used to solve a wide variety of rocket trajectory optimization problems. The nonlinear programming approach is generally more robust in terms of finding a solution than numerical optimal control, but many of the gradient or Newton-Raphson methods require "smoothness" in the function algorithms to be successful. Smoothness is continuity in the first derivative. The smoothness requirement imposes a burden on flight trajectory analysts in that most highly detailed trajectory simulations do not exhibit smoothness. This restriction was a problem in the early days of trajectory optimization when computer computation speed was an issue. Often, special approximate trajectory models had to be used to work with non-linear programming models. As computation time has become cheap compared to manpower, direct sample methods have evolved as the optimization algorithms of choice. These algorithms may require orders of magnitude increases in the number of functional samples but exhibit robustness to non-smoothness in the trajectory code. Examples include: genetic algorithms,[9][10] stochastic sampling methods, and hill climbing algorithms. An overview of the state of the art in numerical methods is given in Betts.[11]

Multi-level optimization

When dealing with complex payoff functions that are pertinent to realistic engineering problems, an alternative method is one of the multi-level techniques. These approaches allow models to be used in the optimization in a tiered approach by the passing of constraints to the lower levels and selecting the optimal value of the constraint value in the upper levels. An early paper in this area presents this idea for the optimization of the performance of a missile.[12]

Software

Examples of trajectory optimization programs include:

- ASTOS (AeroSpace Trajectory Optimization and Simulation)

- Copernicus Trajectory Design and Optimization System

- DIDO

- GMAT (General Mission Analysis Tool)

- JModelica.org (Modelica-based open source platform for dynamic optimization)

- LTOTO (Low Thrust Orbit Transfer Optimization) tool from Astos Solutions

- OTIS (Optimal Trajectories by Implicit Simulation)

- POST (Program to Optimize Simulated Trajectories) ,

- SORT (Simulation and Optimization of Rocket Trajectories)

- ZOOM, Conceptual Design and Analysis of Rocket Configurations and Trajectories)

A collection of low thrust trajectory optimization tools, including members of the Low Thrust Trajectory Tool (LTTT) set, can be found here: LTTT Suite Optimization Tools.

References

- ↑ Ross, I. M. A Primer on Pontryagin's Principle in Optimal Control, Collegiate Publishers, San Francisco, 2009.

- ↑ Phillips, C.A, "Energy Management for a Multiple Pulse Missile", AIAA Paper 88-0334, Jan., 1988

- ↑ L.S. Pontyragin, The Mathematical Theory of Optimal Processes, New York, Intersciences, 1962

- ↑ Bryson, Ho,Applied Optimal Control, Blaisdell Publishing Company, 1969, p 246.)

- ↑ H.J. Kelley, R.E. Kopp, and H.G. Moyer, "Singular Extremals", Topics in Optimization, G. Leitmann (ed.) Vol. II Chapter 2 New York, Academic Press, 1966

- ↑ I. M. Ross and M. Karpenko, "A Review of Pseudospectral Optimal Control: From Theory to Flight," Annual Reviews in Control, Vol. 36, pp. 182-197, 2012.

- ↑ Ohlmeyer, E.J., Phillips, C.A., Generalized Vector Explicit Guidance Journal of Guidance, Control, and Dynamics 2006; 0731-5090 vol.29 no.2 (261-268)

- ↑ D.F. Williams and W.B. Tucker, "Computation of Quasi-Optimal Reentry Trajectories Using The Simplex Algorithm of Linear Programming", Report M-240-1208, Northrop Services, Inc., Huntsville, Alabama, April 1973.

- ↑ Swarm Guidance Using a Multi-Objective Co-Evolutionary On-Line Evolutionary Algorithm; E.J. Hughes, IEEE Congress on Evolutionary Computation 2004, vol.2 (2357-2363)

- ↑ A hybrid multiagent approach for global trajectory optimization; M. Vasile and M. Locatelli, J. Global Optim., Springer 2009, vol.44, no.4, (461-479)

- ↑ Survey of Numerical Methods for Trajectory Optimization;John T. Betts Journal of Guidance, Control, and Dynamics 1998;0731-5090 vol.21 no.2 (193-207)

- ↑ Trajectory Optimization for a Missile Using a Multitier Approach; C.A. Phillips, J.C. Drake, JOURNAL OF SPACECRAFT AND ROCKETS ; Vol. 37, No. 5, September–October 2000