Rayleigh quotient

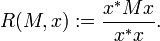

In mathematics, for a given complex Hermitian matrix  and nonzero vector

and nonzero vector  , the Rayleigh quotient[1]

, the Rayleigh quotient[1]  , is defined as:[2][3]

, is defined as:[2][3]

For real matrices and vectors, the condition of being Hermitian reduces to that of being symmetric, and the conjugate transpose  to the usual transpose

to the usual transpose  . Note that

. Note that  for any real scalar

for any real scalar  . Recall that a Hermitian (or real symmetric) matrix has real eigenvalues. It can be shown that, for a given matrix, the Rayleigh quotient reaches its minimum value

. Recall that a Hermitian (or real symmetric) matrix has real eigenvalues. It can be shown that, for a given matrix, the Rayleigh quotient reaches its minimum value  (the smallest eigenvalue of

(the smallest eigenvalue of  ) when

) when  is

is  (the corresponding eigenvector). Similarly,

(the corresponding eigenvector). Similarly,  and

and  . The Rayleigh quotient is used in the min-max theorem to get exact values of all eigenvalues. It is also used in eigenvalue algorithms to obtain an eigenvalue approximation from an eigenvector approximation. Specifically, this is the basis for Rayleigh quotient iteration.

. The Rayleigh quotient is used in the min-max theorem to get exact values of all eigenvalues. It is also used in eigenvalue algorithms to obtain an eigenvalue approximation from an eigenvector approximation. Specifically, this is the basis for Rayleigh quotient iteration.

The range of the Rayleigh quotient is called a numerical range.

Special case of covariance matrices

An empirical covariance matrix M can be represented as the product A' A of the data matrix A pre-multiplied by its transpose A'. Being a symmetrical real matrix, M has non-negative eigenvalues, and orthogonal (or othogonalisable) eigenvectors, which can be demonstrated as follows.

Firstly, that the eigenvalues  are non-negative:

are non-negative:

Secondly, that the eigenvectors  are orthogonal to one another:

are orthogonal to one another:

(if the eigenvalues are different – in the case of multiplicity, the basis can be orthogonalized).

(if the eigenvalues are different – in the case of multiplicity, the basis can be orthogonalized).

To now establish that the Rayleigh quotient is maximised by the eigenvector with the largest eigenvalue, consider decomposing an arbitrary vector  on the basis of the eigenvectors vi:

on the basis of the eigenvectors vi:

, where

, where  is the coordinate of x orthogonally projected onto

is the coordinate of x orthogonally projected onto

so

can be written

which, by orthogonality of the eigenvectors, becomes:

The last representation establishes that the Rayleigh quotient is the sum of the squared cosines of the angles formed by the vector  and each eigenvector

and each eigenvector  , weighted by corresponding eigenvalues.

, weighted by corresponding eigenvalues.

If a vector  maximizes

maximizes  , then any scalar multiple

, then any scalar multiple  (for

(for  ) also maximizes R, so the problem can be reduced to the Lagrange problem of maximizing

) also maximizes R, so the problem can be reduced to the Lagrange problem of maximizing  under the constraint that

under the constraint that  .

.

Let  . This then becomes a linear program, which always attains its maximum at one of the corners of the domain. A maximum point will have

. This then becomes a linear program, which always attains its maximum at one of the corners of the domain. A maximum point will have  and

and  (when the eigenvalues are ordered by decreasing magnitude).

(when the eigenvalues are ordered by decreasing magnitude).

Thus, as advertised, the Rayleigh quotient is maximised by the eigenvector with the largest eigenvalue.

Formulation using Lagrange multipliers

Alternatively, this result can be arrived at by the method of Lagrange multipliers. The problem is to find the critical points of the function

,

,

subject to the constraint  I.e. to find the critical points of

I.e. to find the critical points of

where  is a Lagrange multiplier. The stationary points of

is a Lagrange multiplier. The stationary points of  occur at

occur at

and

Therefore, the eigenvectors  of M are the critical points of the Rayleigh Quotient and their corresponding eigenvalues

of M are the critical points of the Rayleigh Quotient and their corresponding eigenvalues  are the stationary values of R.

are the stationary values of R.

This property is the basis for principal components analysis and canonical correlation.

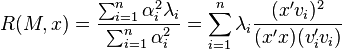

Use in Sturm–Liouville theory

Sturm–Liouville theory concerns the action of the linear operator

on the inner product space defined by

of functions satisfying some specified boundary conditions at a and b. In this case the Rayleigh quotient is

This is sometimes presented in an equivalent form, obtained by separating the integral in the numerator and using integration by parts:

Generalization

For a given pair  of matrices, and a given non-zero vector

of matrices, and a given non-zero vector  , the generalized Rayleigh quotient is defined as:

, the generalized Rayleigh quotient is defined as:

The Generalized Rayleigh Quotient can be reduced to the Rayleigh Quotient  through the transformation

through the transformation  where

where  is the Cholesky decomposition of the Hermitian positive-definite matrix

is the Cholesky decomposition of the Hermitian positive-definite matrix  .

.

See also

- Field of values

References

- ↑ Also known as the Rayleigh–Ritz ratio; named after Walther Ritz and Lord Rayleigh.

- ↑ Horn, R. A. and C. A. Johnson. 1985. Matrix Analysis. Cambridge University Press. pp. 176–180.

- ↑ Parlet B. N. The symmetric eigenvalue problem, SIAM, Classics in Applied Mathematics,1998

Further reading

- Shi Yu, Léon-Charles Tranchevent, Bart Moor, Yves Moreau, 'Rayleigh%E2%80%93Ritz+ratio%22+Rayleigh+quotient&source=gbs_navlinks_s Kernel-based Data Fusion for Machine Learning: Methods and Applications in Bioinformatics and Text Mining, Ch. 2, Springer, 2011.

![L(y)={\frac {1}{w(x)}}\left(-{\frac {d}{dx}}\left[p(x){\frac {dy}{dx}}\right]+q(x)y\right)](/2014-wikipedia_en_all_02_2014/I/media/f/7/a/2/f7a279e06daa340e9ecd72639b512b93.png)

![{\frac {\langle {y,Ly}\rangle }{\langle {y,y}\rangle }}={\frac {\int _{a}^{b}{y(x)\left(-{\frac {d}{dx}}\left[p(x){\frac {dy}{dx}}\right]+q(x)y(x)\right)}dx}{\int _{a}^{b}{w(x)y(x)^{2}}dx}}.](/2014-wikipedia_en_all_02_2014/I/media/a/c/8/1/ac81866f4aa8cc56e243b677b251644a.png)

![{\frac {\langle {y,Ly}\rangle }{\langle {y,y}\rangle }}={\frac {\int _{a}^{b}{y(x)\left(-{\frac {d}{dx}}\left[p(x)y'(x)\right]\right)}dx+\int _{a}^{b}{q(x)y(x)^{2}}\,dx}{\int _{a}^{b}{w(x)y(x)^{2}}\,dx}}](/2014-wikipedia_en_all_02_2014/I/media/d/8/5/5/d8551a06bb0015090df841ba90aa66f5.png)

![={\frac {-y(x)\left[p(x)y'(x)\right]|_{a}^{b}+\int _{a}^{b}{y'(x)\left[p(x)y'(x)\right]}\,dx+\int _{a}^{b}{q(x)y(x)^{2}}\,dx}{\int _{a}^{b}{w(x)y(x)^{2}}\,dx}}](/2014-wikipedia_en_all_02_2014/I/media/e/8/7/a/e87a1494fc078fcd6c7f6b70047ef17b.png)

![={\frac {-p(x)y(x)y'(x)|_{a}^{b}+\int _{a}^{b}\left[p(x)y'(x)^{2}+q(x)y(x)^{2}\right]\,dx}{\int _{a}^{b}{w(x)y(x)^{2}}\,dx}}.](/2014-wikipedia_en_all_02_2014/I/media/7/1/2/8/7128e347e30563e26611a9b199108f2c.png)