Racetrack memory

| Computer memory types |

|---|

| Volatile |

| RAM |

|

In development |

| Historical |

|

| Non-volatile |

| ROM |

| NVRAM |

| Early stage NVRAM |

| Mechanical |

|

| In development |

| Historical |

|

Racetrack memory (or domain-wall memory (DWM)) is an experimental non-volatile memory device under development at IBM's Almaden Research Center by a team led by Stuart Parkin.[1] In early 2008, a 3-bit version was successfully demonstrated.[2] If it is developed successfully, racetrack would offer storage density higher than comparable solid-state memory devices like flash memory and similar to conventional disk drives, and also have much higher read/write performance. It is one of a number of new technologies trying to become a universal memory in the future.

Description

Racetrack memory uses a spin-coherent electric current to move magnetic domains along a nanoscopic permalloy wire about 200 nm across and 100 nm thick. As current is passed through the wire, the domains pass by magnetic read/write heads positioned near the wire, which alter the domains to record patterns of bits. A racetrack memory device is made up of many such wires and read/write elements. In general operational concept, racetrack memory is similar to the earlier bubble memory of the 1960s and 1970s. Delay line memory, such as mercury delay lines of the 1940s and 1950s, are a still-earlier form of similar technology, as used in the UNIVAC and EDSAC computers. Like bubble memory, racetrack memory uses electrical currents to "push" a magnetic pattern through a substrate. Dramatic improvements in magnetic detection capabilities, based on the development of spintronic magnetoresistive-sensing materials and devices, allow the use of much smaller magnetic domains to provide far higher bit densities.

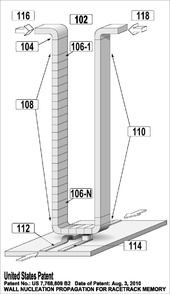

In production, it is expected that the wires can be scaled down to around 50 nm. There are two ways to arrange racetrack memory. The simplest is a series of flat wires arranged in a grid with read and write heads arranged nearby. A more widely studied arrangement uses U-shaped wires arranged vertically over a grid of read/write heads on an underlying substrate. This allows the wires to be much longer without increasing its 2D area, although the need to move individual domains further along the wires before they reach the read/write heads results in slower random access times. This does not present a real performance bottleneck; both arrangements offer about the same throughput. Thus the primary concern in terms of construction is practical; whether or not the 3D vertical arrangement is feasible to mass produce.

Comparison to other memory devices

Current projections suggest that racetrack memory will offer performance on the order of 20-32 ns to read or write a random bit. This compares to about 10,000,000 ns for a hard drive, or 20-30 ns for conventional DRAM. The authors of the primary work also discuss ways to improve the access times with the use of a "reservoir," improving to about 9.5 ns. Aggregate throughput, with or without the reservoir, is on the order of 250-670 Mbit/s for racetrack memory, compared to 12800 Mbit/s for a single DDR3 DRAM, 1000 Mbit/s for high-performance hard drives, and much slower performance on the order of 30 to 100 Mbit/s for flash memory devices. The only current technology that offers a clear latency benefit over racetrack memory is SRAM, on the order of 0.2 ns, but is more expensive and has a feature size of about 45 nm currently with a cell area of about 140 F2.[3][4]

Flash memory, in particular, is a highly asymmetrical device. Although read performance is fairly fast, especially compared to a hard drive, writing is much slower. Flash memory works by "trapping" electrons in the chip surface, and requires a burst of high voltage to remove this charge and reset the cell. In order to do this, charge is accumulated in a device known as a charge pump, which takes a relatively long time to charge up. In the case of NOR flash memory, which allows random bit-wise access like racetrack memory, read times are on the order of 70 ns, while write times are much slower, about 2,500 ns. To address this concern, NAND flash memory allows reading and writing only in large blocks, but this means that the time to access any random bit is greatly increased, to about 1,000 ns. In addition, the use of the burst of high voltage physically degrades the cell, so most flash devices allow on the order of 100,000 writes to any particular bit before their operation becomes unpredictable. Wear leveling and other techniques can spread this out, but only if the underlying data can be re-arranged.

The key determinant of the cost of any memory device is the physical size of the storage medium. This is due to the way memory devices are fabricated. In the case of solid-state devices like flash memory or DRAM, a large "wafer" of silicon is processed into many individual devices, which are then cut apart and packaged. The cost of packaging is about $1 per device, so, as the density increases and the number of bits per devices increases with it, the cost per bit falls by an equal amount. In the case of hard drives, data is stored on a number of rotating platters, and the cost of the device is strongly related to the number of platters. Increasing the density allows the number of platters to be reduced for any given amount of storage.

In most cases, memory devices store one bit in any given location, so they are typically compared in terms of "cell size", a cell storing one bit. Cell size itself is given in units of F², where F is the design rule, representing usually the metal line width. Flash and racetrack both store multiple bits per cell, but the comparison can still be made. For instance, modern hard drives appear to be rapidly reaching their current theoretical limits around 650 nm²/bit,[5] which is defined primarily by our capability to read and write to tiny patches of the magnetic surface. DRAM has a cell size of about 6 F², SRAM is much worse at 120 F². NAND flash memory is currently the densest form of non-volatile memory in widespread use, with a cell size of about 4.5 F², but storing three bits per cell for an effective size of 1.5 F². NOR flash memory is slightly less dense, at an effective 4.75 F², accounting for 2-bit operation on a 9.5 F² cell size.[4] In the vertical orientation (U-shaped) racetrack, about 10-20 bits are stored per cell, which itself can have a physical size of at least about 20 F². In addition, bits at different positions on the "track" would take different times (from ~10 ns to nearly a microsecond, or 10 ns/bit) to be accessed by the read/write sensor, because the "track" is moved at fixed rate (~100 m/s) past the read/write sensor.

Racetrack memory is one of a number of new technologies aiming to replace flash memory, and potentially offer a "universal" memory device applicable to a wide variety of roles. Other leading contenders include magnetoresistive random-access memory (MRAM), phase-change memory (PCRAM) and ferroelectric RAM (FeRAM). Most of these technologies offer densities similar to flash memory, in most cases worse, and their primary advantage is the lack of write-endurance limits like those in flash memory. Field-MRAM offers excellent performance as high as 3 ns access time, but requires a large 25-40 F² cell size. It might see use as an SRAM replacement, but not as a mass storage device. The highest densities from any of these devices is offered by PCRAM, which has a cell size of about 5.8 F², similar to flash memory, as well as fairly good performance around 50 ns. Nevertheless, none of these can come close to competing with racetrack memory in overall terms, especially density. For example, 50 ns allows about five bits to be operated in a racetrack memory device, resulting in an effective cell size of 20/5=4 F², easily exceeding the performance-density product of PCM. On the other hand, without sacrificing bit density, the same 20 F² area can also fit 2.5 2-bit 8 F² alternative memory cells (such as resistive RAM (RRAM) or spin-torque transfer MRAM), each of which individually operating much faster (~10 ns).

A difficulty for this technology arises from the need for high current density (>108 A/cm²); a 30 nm x 100 nm cross-section would require >3 mA. The resulting power draw would be higher than, for example, spin-torque transfer memory or flash memory.

Development difficulties

One limitation of the early experimental devices was that the magnetic domains could be pushed only slowly through the wires, requiring current pulses on the orders of microseconds to move them successfully. This was unexpected, and led to performance equal roughly to that of hard drives, as much as 1000 times slower than predicted. Recent research at the University of Hamburg has traced this problem to microscopic imperfections in the crystal structure of the wires which led to the domains becoming "stuck" at these imperfections. Using an X-ray microscope to directly image the boundaries between the domains, their research found that domain walls would be moved by pulses as short as a few nanoseconds when these imperfections were absent. This corresponds to a macroscopic performance of about 110 m/s.[6]

The voltage required to drive the domains along the racetrack would be proportional to the length of the wire. The current density must be sufficiently high to push the domain walls (as in electromigration).

See also

- Bubble memory

- Giant magnetoresistance (GMR) effect

- Magnetoresistive random-access memory (MRAM)

- Spintronics

- Spin transistor

References

- ↑ Spintronics Devices Research, Magnetic Racetrack Memory Project

- ↑ Masamitsu Hayashi et al., Current-Controlled Magnetic Domain-Wall Nanowire Shift Register, Science, Vol. 320. no. 5873, pp. 209 - 211, April 2008, doi:10.1126/science.1154587

- ↑ "ITRS 2011". Retrieved 8 November 2012.

- ↑ 4.0 4.1 Parkin, et all., Magnetic Domain-Wall Racetrack Memory, Science, 320, 190 (11 April 2008), doi:10.1126/science.1145799

- ↑ 1 Tbit/in² is approx. 650nm²/bit.

- ↑ 'Racetrack' memory could gallop past the hard disk

External links

- Redefining the Architecture of Memory

- IBM Moves Closer to New Class of Memory (YouTube video)

- IBM Racetrack Memory Project

| |||||