Probability-generating function

In probability theory, the probability-generating function of a discrete random variable is a power series representation (the generating function) of the probability mass function of the random variable. Probability-generating functions are often employed for their succinct description of the sequence of probabilities Pr(X = i) in the probability mass function for a random variable X, and to make available the well-developed theory of power series with non-negative coefficients.

Definition

Univariate case

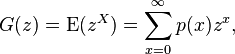

If X is a discrete random variable taking values in the non-negative integers {0,1, ...}, then the probability-generating function of X is defined as [1]

where p is the probability mass function of X. Note that the subscripted notations GX and pX are often used to emphasize that these pertain to a particular random variable X, and to its distribution. The power series converges absolutely at least for all complex numbers z with |z| ≤ 1; in many examples the radius of convergence is larger.

Multivariate case

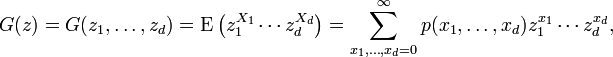

If X = (X1,...,Xd ) is a discrete random variable taking values in the d-dimensional non-negative integer lattice {0,1, ...}d, then the probability-generating function of X is defined as

where p is the probability mass function of X. The power series converges absolutely at least for all complex vectors z = (z1,...,zd ) ∈ ℂd with max{|z1|,...,|zd |} ≤ 1.

Properties

Power series

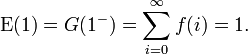

Probability-generating functions obey all the rules of power series with non-negative coefficients. In particular, G(1−) = 1, where G(1−) = limz→1G(z) from below, since the probabilities must sum to one. So the radius of convergence of any probability-generating function must be at least 1, by Abel's theorem for power series with non-negative coefficients.

Probabilities and expectations

The following properties allow the derivation of various basic quantities related to X:

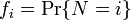

1. The probability mass function of X is recovered by taking derivatives of G

2. It follows from Property 1 that if random variables X and Y have probability generating functions that are equal, GX = GY, then pX = pY. That is, if X and Y have identical probability-generating functions, then they have identical distributions.

3. The normalization of the probability density function can be expressed in terms of the generating function by

The expectation of X is given by

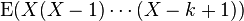

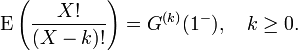

More generally, the kth factorial moment,  of X is given by

of X is given by

So the variance of X is given by

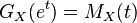

4.  where X is a random variable,

where X is a random variable,  is the probability generating function (of X) and

is the probability generating function (of X) and  is the moment-generating function (of X) .

is the moment-generating function (of X) .

Functions of independent random variables

Probability-generating functions are particularly useful for dealing with functions of independent random variables. For example:

- If X1, X2, ..., Xn is a sequence of independent (and not necessarily identically distributed) random variables, and

- where the ai are constants, then the probability-generating function is given by

- For example, if

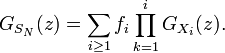

- then the probability-generating function, GSn(z), is given by

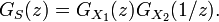

- It also follows that the probability-generating function of the difference of two independent random variables S = X1 − X2 is

- Suppose that N is also an independent, discrete random variable taking values on the non-negative integers, with probability-generating function GN. If the X1, X2, ..., XN are independent and identically distributed with common probability-generating function GX, then

- This can be seen, using the law of total expectation, as follows:

- This last fact is useful in the study of Galton–Watson processes.

- Suppose again that N is also an independent, discrete random variable taking values on the non-negative integers, with probability-generating function GN and probability density

. If the X1, X2, ..., XN are independent, but not identically distributed random variables, where

. If the X1, X2, ..., XN are independent, but not identically distributed random variables, where  denotes the probability generating function of

denotes the probability generating function of  , then

, then

- For identically distributed Xi this simplifies to the identity stated before. The general case is sometimes useful to obtain a decomposition of SN by means of generating functions.

Examples

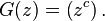

- The probability-generating function of a constant random variable, i.e. one with Pr(X = c) = 1, is

- The probability-generating function of a binomial random variable, the number of successes in n trials, with probability p of success in each trial, is

- Note that this is the n-fold product of the probability-generating function of a Bernoulli random variable with parameter p.

- The probability-generating function of a negative binomial random variable on {0,1,2 ...}, the number of failures until the rth success with probability of success in each trial p, is

- (Convergence for

).

).

- Note that this is the r-fold product of the probability generating function of a geometric random variable with parameter 1−p on {0,1,2 ...}.

- The probability-generating function of a Poisson random variable with rate parameter λ is

Related concepts

The probability-generating function is an example of a generating function of a sequence: see also formal power series. It is occasionally called the z-transform of the probability mass function.

Other generating functions of random variables include the moment-generating function, the characteristic function and the cumulant generating function.

Notes

References

- Johnson, N.L.; Kotz, S.; Kemp, A.W. (1993) Univariate Discrete distributions (2nd edition). Wiley. ISBN 0-471-54897-9 (Section 1.B9)

| |||||||||||||

![\operatorname {Var}(X)=G''(1^{-})+G'(1^{-})-\left[G'(1^{-})\right]^{2}.](/2014-wikipedia_en_all_02_2014/I/media/1/c/d/4/1cd451016c516c73ea380bc1f95ded57.png)

![G(z)=\left[(1-p)+pz\right]^{n}.\,](/2014-wikipedia_en_all_02_2014/I/media/1/5/8/5/15859f22473ef9a667931a9185abe4f4.png)