Potential method

In computational complexity theory, the potential method is a method used to analyze the amortized time and space complexity of a data structure, a measure of its performance over sequences of operations that smooths out the cost of infrequent but expensive operations.[1][2]

Definition of amortized time

In the potential method, a function Φ is chosen that maps states of the data structure to non-negative numbers. If S is a state of the data structure, Φ(S) may be thought of intuitively as an amount of potential energy stored in that state;[1][2] alternatively, Φ(S) may be thought of as representing the amount of disorder in state S or its distance from an ideal state. The potential value prior to the operation of initializing a data structure is defined to be zero.

Let o be any individual operation within a sequence of operations on some data structure, with Sbefore denoting the state of the data structure prior to operation o and Safter denoting its state after operation o has completed. Then, once Φ has been chosen, the amortized time for operation o is defined to be

where C is a non-negative constant of proportionality (in units of time) that must remain fixed throughout the analysis. That is, the amortized time is defined to be the actual time taken by the operation plus C times the difference in potential caused by the operation.[1][2]

Relation between amortized and actual time

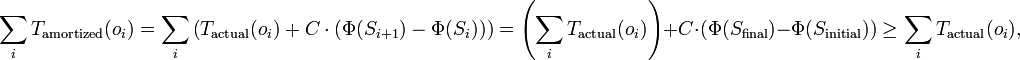

Despite its artificial appearance, the total amortized time of a sequence of operations provides a valid upper bound on the actual time for the same sequence of operations. That is, for any sequence of operations  ,

the total amortized time

,

the total amortized time  is always at least as large as the total actual time

is always at least as large as the total actual time  . In more detail,

. In more detail,

where the sequence of potential function values forms a telescoping series in which all terms other than the initial and final potential function values cancel in pairs, and where the final inequality arises from the assumptions that  and

and  . Therefore, amortized time can be used to provide accurate predictions about the actual time of sequences of operations, even though the amortized time for an individual operation may vary widely from its actual time.

. Therefore, amortized time can be used to provide accurate predictions about the actual time of sequences of operations, even though the amortized time for an individual operation may vary widely from its actual time.

Amortized analysis of worst-case inputs

Typically, amortized analysis is used in combination with a worst case assumption about the input sequence. With this assumption, if X is a type of operation that may be performed by the data structure, and n is an integer defining the size of the given data structure (for instance, the number of items that it contains), then the amortized time for operations of type X is defined to be the maximum, among all possible sequences of operations on data structures of size n and all operations oi of type X within the sequence, of the amortized time for operation oi.

With this definition, the time to perform a sequence of operations may be estimated by multiplying the amortized time for each type of operation in the sequence by the number of operations of that type.

Example

A dynamic array is a data structure for maintaining an array of items, allowing both random access to positions within the array and the ability to increase the array size by one. It is available in Java as the "ArrayList" type and in Python as the "list" type. A dynamic array may be implemented by a data structure consisting of an array A of items, of some length N, together with a number n ≤ N representing the positions within the array that have been used so far. With this structure, random accesses to the dynamic array may be implemented by accessing the same cell of the internal array A, and when n < N an operation that increases the dynamic array size may be implemented simply by incrementing n. However, when n = N, it is necessary to resize A, and a common strategy for doing so is to double its size, replacing A by a new array of length 2n.[3]

This structure may be analyzed using a potential function Φ = 2n − N. Since the resizing strategy always causes A to be at least half-full, this potential function is always non-negative, as desired. When an increase-size operation does not lead to a resize operation, Φ increases by 2, a constant. Therefore, the constant actual time of the operation and the constant increase in potential combine to give a constant amortized time for an operation of this type.

However, when an increase-size operation causes a resize, the potential value of n prior to the resize decreases to zero after the resize. Allocating a new internal array A and copying all of the values from the old internal array to the new one takes O(n) actual time, but (with an appropriate choice of the constant of proportionality C) this is entirely cancelled by the decrease of n in the potential function, leaving again a constant total amortized time for the operation.

The other operations of the data structure (reading and writing array cells without changing the array size) do not cause the potential function to change and have the same constant amortized time as their actual time.[2]

Therefore, with this choice of resizing strategy and potential function, the potential method shows that all dynamic array operations take constant amortized time. Combining this with the inequality relating amortized time and actual time over sequences of operations, this shows that any sequence of n dynamic array operations takes O(n) actual time in the worst case, despite the fact that some of the individual operations may themselves take a linear amount of time.[2]

Applications

The potential function method is commonly used to analyze Fibonacci heaps, a form of priority queue in which removing an item takes logarithmic amortized time, and all other operations take constant amortized time.[4] It may also be used to analyze splay trees, a self-adjusting form of binary search tree with logarithmic amortized time per operation.[5]

References

- ↑ 1.0 1.1 1.2 Goodrich, Michael T.; Tamassia, Roberto (2002), "1.5.1 Amortization Techniques", Algorithm Design: Foundations, Analysis and Internet Examples, Wiley, pp. 36–38.

- ↑ 2.0 2.1 2.2 2.3 2.4 Cormen, Thomas H.; Leiserson, Charles E., Rivest, Ronald L., Stein, Clifford (2001) [1990]. "17.3 The potential method". Introduction to Algorithms (2nd ed.). MIT Press and McGraw-Hill. pp. 412–416. ISBN 0-262-03293-7.

- ↑ Goodrich and Tamassia, 1.5.2 Analyzing an Extendable Array Implementation, pp. 139–141; Cormen et al., 17.4 Dynamic tables, pp. 416–424.

- ↑ Cormen et al., Chapter 20, "Fibonacci Heaps", pp. 476–497.

- ↑ Goodrich and Tamassia, Section 3.4, "Splay Trees", pp. 185–194.