Polynomial SOS

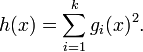

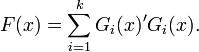

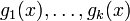

In mathematics, a form (i.e. a homogeneous polynomial) h(x) of degree 2m in the real n-dimensional vector x is sum of squares of forms (SOS) if and only if there exist forms  of degree m such that

of degree m such that

Explicit sufficient conditions for a form to be SOS have been found.[1] However every real nonnegative form can be approximated as closely as desired (in the  -norm of its coefficient vector) by a sequence of forms

-norm of its coefficient vector) by a sequence of forms  that are SOS.[2]

that are SOS.[2]

Square matricial representation (SMR)

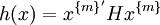

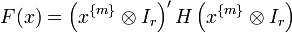

To establish whether a form h(x) is SOS amounts to solving a convex optimization problem. Indeed, any h(x) can be written as

where  is a vector containing a base for the forms of degree m in x (such as all monomials of degree m in x), the prime ′ denotes the transpose, H is any symmetric matrix satisfying

is a vector containing a base for the forms of degree m in x (such as all monomials of degree m in x), the prime ′ denotes the transpose, H is any symmetric matrix satisfying

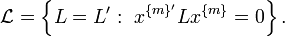

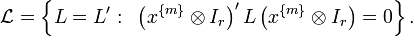

and  is a linear parameterization of the linear space

is a linear parameterization of the linear space

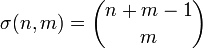

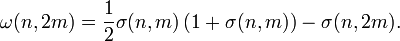

The dimension of the vector  is given by

is given by

whereas the dimension of the vector  is given by

is given by

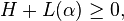

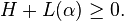

Then, h(x) is SOS if and only if there exists a vector  such that

such that

meaning that the matrix  is positive-semidefinite. This is a linear matrix inequality (LMI) feasibility test, which is a convex optimization problem. The expression

is positive-semidefinite. This is a linear matrix inequality (LMI) feasibility test, which is a convex optimization problem. The expression  was introduced in [1] with the name square matricial representation (SMR) in order to establish whether a form is SOS via an LMI. This representation is also known as Gram matrix (see [2] and references therein).

was introduced in [1] with the name square matricial representation (SMR) in order to establish whether a form is SOS via an LMI. This representation is also known as Gram matrix (see [2] and references therein).

Examples

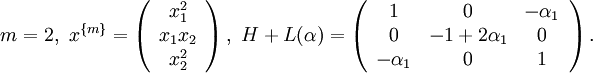

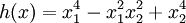

- Consider the form of degree 4 in two variables

. We have

. We have

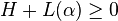

Since there exists α such that

, namely

, namely  , it follows that h(x) is SOS.

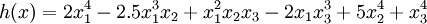

, it follows that h(x) is SOS. - Consider the form of degree 4 in three variables

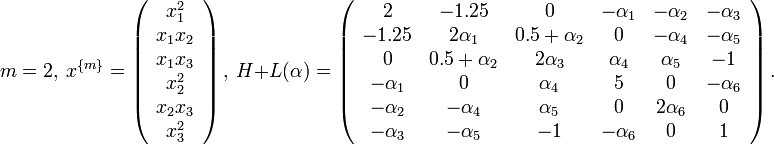

. We have

. We have

Since

for

for  , it follows that h(x) is SOS.

, it follows that h(x) is SOS.

Matrix SOS

A matrix form F(x) (i.e., a matrix whose entries are forms) of dimension r and degree 2m in the real n-dimensional vector x is SOS if and only if there exist matrix forms  of degree m such that

of degree m such that

Matrix SMR

To establish whether a matrix form F(x) is SOS amounts to solving a convex optimization problem. Indeed, similarly to the scalar case any F(x) can be written according to the SMR as

where  is the Kronecker product of matrices, H is any symmetric matrix satisfying

is the Kronecker product of matrices, H is any symmetric matrix satisfying

and  is a linear parameterization of the linear space

is a linear parameterization of the linear space

The dimension of the vector  is given by

is given by

Then, F(x) is SOS if and only if there exists a vector  such that the following LMI holds:

such that the following LMI holds:

The expression  was introduced in [3] in order to establish whether a matrix form is SOS via an LMI.

was introduced in [3] in order to establish whether a matrix form is SOS via an LMI.

References

[1] G. Chesi, A. Tesi, A. Vicino, and R. Genesio, On convexification of some minimum distance problems, 5th European Control Conference, Karlsruhe (Germany), 1999.

[2] M. Choi, T. Lam, and B. Reznick, Sums of squares of real polynomials, in Proc. of Symposia in Pure Mathematics, 1995.

[3] G. Chesi, A. Garulli, A. Tesi, and A. Vicino, Robust stability for polytopic systems via polynomially parameter-dependent Lyapunov functions, in 42nd IEEE Conference on Decision and Control, Maui (Hawaii), 2003.