Multiclass classification

- Not to be confused with multi-label classification.

In machine learning, multiclass or multinomial classification is the problem of classifying instances into more than two classes.

While some classification algorithms naturally permit the use of more than two classes, others are by nature binary algorithms; these can, however, be turned into multinomial classifiers by a variety of strategies.

Multiclass classification should not be confused with multi-label classification, where multiple classes are to be predicted for each problem instance.

General strategies

One-vs.-all

Among these strategies are the one-vs.-all (or one-vs.-rest, OvA or OvR) strategy, where a single classifier is trained per class to distinguish that class from all other classes. Prediction is then performed by predicting using each binary classifier, and choosing the prediction with the highest confidence score (e.g., the highest probability of a classifier such as naive Bayes).

In pseudocode, the training algorithm for an OvA learner constructed from a binary classification learner L is as follows:

- Inputs:

- L, a learner (training algorithm for binary classifiers)

- samples X

- labels y where yᵢ ∈ {1, … K} is the label for the sample Xᵢ

- Output:

- a list of classifiers fk for k ∈ {1, … K}

- Procedure:

- For each k in {1 … K}:

- Construct a new label vector yᵢ' = 1 where yᵢ = k, 0 (or -1) elsewhere

- Apply L to X, y' to obtain fk

- For each k in {1 … K}:

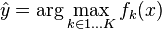

Making decisions proceeds by applying all classifiers to an unseen sample x and predicting the label k for which the corresponding classifier reports the highest confidence score: