Jack function

In mathematics, the Jack function, introduced by Henry Jack, is a homogenous, symmetric polynomial which generalizes the Schur and zonal polynomials, and is in turn generalized by the Heckman–Opdam polynomials and Macdonald polynomials.

Definition

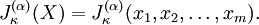

The Jack function  of integer partition

of integer partition  , parameter

, parameter  and

arguments

and

arguments  can be recursively defined as

follows:

can be recursively defined as

follows:

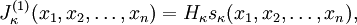

- For m=1

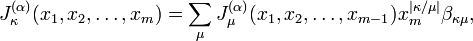

- For m>1

where the summation is over all partitions  such that the skew partition

such that the skew partition  is a horizontal strip, namely

is a horizontal strip, namely

(

( must be zero or otherwise

must be zero or otherwise  ) and

) and

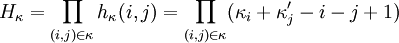

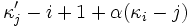

where  equals

equals  if

if  and

and  otherwise. The expressions

otherwise. The expressions  and

and  refer to the conjugate partitions of

refer to the conjugate partitions of  and

and  , respectively. The notation

, respectively. The notation  means that the product is taken over all coordinates

means that the product is taken over all coordinates  of boxes in the Young diagram of the partition

of boxes in the Young diagram of the partition  .

.

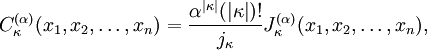

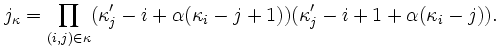

C normalization

The Jack functions form an orthogonal basis in a space of symmetric polynomials, with inner product:

![\langle f,g\rangle =\int _{{[0,2\pi ]^{n}}}f(e^{{i\theta _{1}}},\cdots ,e^{{i\theta _{n}}})\overline {g(e^{{i\theta _{1}}},\cdots ,e^{{i\theta _{n}}})}\prod _{{1\leq j<k\leq n}}|e^{{i\theta _{j}}}-e^{{i\theta _{k}}}|^{{2/\alpha }}d\theta _{1}\cdots d\theta _{n}](/2014-wikipedia_en_all_02_2014/I/media/8/8/e/f/88ef4d8dbd97cddc84c6001a0e5e4870.png)

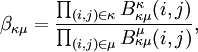

This orthogonality property is unaffected by normalization. The normalization defined above is typically referred to as the J normalization. The C normalization is defined as

where

For  denoted often as just

denoted often as just

is known as the Zonal polynomial.

is known as the Zonal polynomial.

Connection with the Schur polynomial

When  the Jack function is a scalar multiple of the Schur polynomial

the Jack function is a scalar multiple of the Schur polynomial

where

is the product of all hook lengths of  .

.

Properties

If the partition has more parts than the number of variables, then the Jack function is 0:

Matrix argument

In some texts, especially in random matrix theory, authors have found it more convenient to use a matrix argument in the Jack function. The connection is simple. If  is a matrix with eigenvalues

is a matrix with eigenvalues

, then

, then

References

- Demmel, James; Koev, Plamen (2006), "Accurate and efficient evaluation of Schur and Jack functions", Mathematics of Computation 75 (253): 223–239, doi:10.1090/S0025-5718-05-01780-1, MR 2176397.

- Jack, Henry (1970–1971), "A class of symmetric polynomials with a parameter", Proceedings of the Royal Society of Edinburgh, Section A. Mathematics 69: 1–18, MR 0289462 .

- Macdonald, I. G. (1995), Symmetric functions and Hall polynomials, Oxford Mathematical Monographs (2nd ed.), New York: Oxford University Press, ISBN 0-19-853489-2, MR 1354144

- Stanley, Richard P. (1989), "Some combinatorial properties of Jack symmetric functions", Advances in Mathematics 77 (1): 76–115, doi:10.1016/0001-8708(89)90015-7, MR 1014073.

External links

- Software for computing the Jack function by Plamen Koev.

- MOPS: Multivariate Orthogonal Polynomials (symbolically) (Maple Package)

- SAGE documentation for Jack Symmetric Functions