Infineta Systems

|

| |

| Type | Private |

|---|---|

| Industry | Networking hardware |

| Founded | California 2008 |

| Headquarters | Santa Clara, California |

| Key people |

Raj Kanaya, CEO |

| Website | www.infineta.com |

Infineta Systems was a company that made WAN optimization products for high performance, latency-sensitive network applications. The company advertised that its Data Mobility Switch (DMS) allowed application data rate to exceed the nominal data rate of the link. Infineta Systems ceased operations by February 2013, a liquidator was appointed, and its products will no longer be manufactured, sold or distributed. Riverbed Technology purchased some of Infineta's assets from the liquidator.[1]

Company

Infineta was founded in 2008 by Raj Kanaya, the CEO, and K.V.S. Ramarao, the CTO, to use the buzzword of Big Data. Ramarao concluded the computational resources, especially I/O operations and CPU cycles, associated with data compression technologies would ultimately limit their scalability.[2] He and Kanaya determined founded Infineta to develop algorithms and hardware. The company had six patents pending.

Infineta was headquartered in San Jose, California and attracted $30 million in two rounds of venture funding from Alloy Ventures, North Bridge Venture Partners, and Rembrandt Venture Partners.[3][4]

Products

Infineta launched its Data Mobility Switch in June 2011 after more than two years of development and field trials. The DMS was the first WAN optimization technology to work at throughput rates of 10 Gbit/s.[5] Infineta designed the product in FPGA hardware around a multi-Gigabit switch fabric to minimize latency. As a result, accelerated packets average no more than 50 microseconds port-to-port latency. Unaccelerated packets are bridged through the system at wire speed.[6] The company decided against designs based on software, large dictionaries, and existing algorithms because it claimed the operational overhead and latency introduced by these technologies did not permit scaling required for data center applications, which include replication, data migrations, and virtualization.[7]

The DMS works by removing redundant data from network flows, which allows the same information to be transferred across a link using only 10%-15% of the bytes otherwise required. This kind of compression is similar to data deduplication. The effect is that either the applications generating the data will respond by increasing performance, or, there will be a net decrease in the amount of WAN bandwidth those applications consume.

The product was designed to addresses the long-standing issue of TCP performance[8] on long fat networks, so even unreduced data can achieve throughputs equivalent to the WAN bandwidth. To illustrate what this means, take the example of transferring a 2.5 GBytes (20 billion bits) file from New York to Chicago (15 ms latency, 30 ms round-trip time ) over a 1 Gbit/s link. With standard TCP, which uses a 64 KB window size, the file transfer would take about 20 minutes. The theoretical maximum throughput is 1 Gbit/s, or about 20 seconds. The DMS performs the transfer in 19.5 to 21 seconds.[9]

Competitors

Other vendors in the area of WAN optimization included Aryaka, Blue Coat Systems, Cisco Systems WAAS, Exinda, Riverbed Technology, and Silver Peak Systems.

See also

- Data migration

- WAN optimization

- Network latency

- Network congestion

References

- ↑ "Technology Migration - Contact Sales Infineta". Web page. Riverbed Technology. Retrieved June 27, 2013.

- ↑ Martynov, Maxim (11 September 2009). "Challenges for High-Speed Protocol-Independent Redundancy Eliminating Systems". Proceedings of 18th International Conference on Computer Communications and Networks, ICCCN 2009.: 6. doi:10.1109/ICCCN.2009.5235389. ISSN 1095-2055.

- ↑ "San Jose-Based Infineta Systems Raises $15 Million in Second Round". Silicon Valley Wire. 2011-06-06. Retrieved 2011-07-29.

- ↑ "Infineta raises $15M to move big data across data centers — Cloud Computing News". Gigaom.com. 2011-06-06. Retrieved 2011-07-29.

- ↑ Rath, John. "Infineta Ships 10Gbps Data Mobility Switch". Retrieved June 7, 2011.

- ↑ "Typically, the entire latency budget between two servers participating in long distance live migration is around 5-6 milliseconds. Enterprises will need to find ways to optimize this latency-sensitive workflow while remaining within the necessary latency budget." - Jim Metzler, Vice President, Ashton, Metzler & Associates

- ↑ “Highly-scalable, multi-gigabit WAN optimization will play a critical role in next-generation data centers as more applications, data, and services become centralized and delivered to remote sites over a WAN.... Achieving the highest degree of performance while simplifying data center architecture around space, cooling, and power will be crucial.” Joe Skorupa, research vice president, data center convergence, Gartner.

- ↑ Jacobson, Van. "TCP Extensions for High Performance". Network Working Group V. Jacobson Request for Comments: 1323. ietf.org.

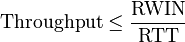

- ↑ Throughput can be calculated as:

where RWIN is the TCP Receive Window and RTT is the latency to and from the target. The default TCP window size in the absence of window scaling is 65,536 bytes, or 524,228 bits. So for this example, Throughput = 524,228 bits / 0.03 seconds = 17,476,267 bits/second or about 17.5 Mbit/s. Divide the bits to be transferred by the rate of transfer: 20,000,000,000 bits / 17,476,267 = 1,176.5 seconds, or 19.6 minutes.

where RWIN is the TCP Receive Window and RTT is the latency to and from the target. The default TCP window size in the absence of window scaling is 65,536 bytes, or 524,228 bits. So for this example, Throughput = 524,228 bits / 0.03 seconds = 17,476,267 bits/second or about 17.5 Mbit/s. Divide the bits to be transferred by the rate of transfer: 20,000,000,000 bits / 17,476,267 = 1,176.5 seconds, or 19.6 minutes.

External links

- "Infineta Systems—WAN Optimization for Big Traffic". Company web site. Archived from the original on December 23, 2012. Retrieved June 27, 2013.