Hamburger moment problem

In mathematics, the Hamburger moment problem, named after Hans Ludwig Hamburger, is formulated as follows: given a sequence { mn : n = 1, 2, 3, ... }, does there exist a positive Borel measure μ on the real line such that

In other words, an affirmative answer to the problem means that { mn : n = 0, 1, 2, ... } is the sequence of moments of some positive Borel measure μ.

The Stieltjes moment problem, Vorobyev moment problem, and the Hausdorff moment problem are similar but replace the real line by [0, +∞) (Stieltjes and Vorobyev; but Vorobyev formulates the problem in the terms of matrix theory), or a bounded interval (Hausdorff).

Characterization

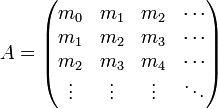

The Hamburger moment problem is solvable (that is, {mn} is a sequence of moments) if and only if the corresponding Hankel kernel on the nonnegative integers

is positive definite, i.e.,

for an arbitrary sequence {cj}j ≥ 0 of complex numbers with finite support (i.e. cj = 0 except for finitely many values of j).

The "only if" part of the claims can be verified by a direct calculation.

We sketch an argument for the converse. Let Z+ be the nonnegative integers and F0(Z+) denote the family of complex valued sequences with finite support. The positive Hankel kernel A induces a (possibly degenerate) sesquilinear product on the family of complex valued sequences with finite support. This in turn gives a Hilbert space

whose typical element is an equivalence class denoted by [f].

Let en be the element in F0(Z+) defined by en(m) = δnm. One notices that

Therefore the "shift" operator T on  , with T[en] = [en + 1], is symmetric.

, with T[en] = [en + 1], is symmetric.

On the other hand, the desired expression

suggests that μ is the spectral measure of a self-adjoint operator. If we can find a "function model" such that the symmetric operator T is multiplication by x, then the spectral resolution of a self-adjoint extension of T proves the claim.

A function model is given by the natural isomorphism from F0(Z+) to the family of polynomials, in one single real variable and complex coefficients: for n ≥ 0, identify en with xn. In the model, the operator T is multiplication by x and a densely defined symmetric operator. It can be shown that T always has self-adjoint extensions. Let

be one of them and μ be its spectral measure. So

On the other hand,

Uniqueness of solutions

The solutions form a convex set, so the problem has either infinitely many solutions or a unique solution.

Consider the (n + 1)×(n + 1) Hankel matrix

Positivity of A means that for each n, det(Δn) ≥ 0. If det(Δn) = 0, for some n, then

is finite dimensional and T is self-adjoint. So in this case the solution to the Hamburger moment problem is unique and μ, being the spectral measure of T, has finite support.

More generally, the solution is unique if there are constants C and D such that for all n, |mn|≤ CDnn! (Reed & Simon 1975, p. 205). This follows from the more general Carleman's condition.

There are examples where the solution is not unique.

Further results

One can see that the Hamburger moment problem is intimately related to orthogonal polynomials on the real line. The Gram–Schmidt procedure gives a basis of orthogonal polynomials in which the operator

has a tridiagonal Jacobi matrix representation. This in turn leads to a tridiagonal model of positive Hankel kernels.

An explicit calculation of the Cayley transform of T shows the connection with what is called the Nevanlinna class of analytic functions on the left half plane. Passing to the non-commutative setting, this motivates Krein's formula which parametrizes the extensions of partial isometries.

The cumulative distribution function and the probability density function can often be found by applying the inverse Laplace transform to the moment generating function

provided that this function converges.

References

- Reed, Michael; Simon, Barry (1975), Fourier Analysis, Self-Adjointness, Methods of modern mathematical physics 2, Academic Press, pp. 145, 205, ISBN 0-12-585002-6

- Shohat, J. A.; Tamarkin, J. D. (1943), The Problem of Moments, New York: American mathematical society, ISBN 0-8218-1501-6.

![\langle [e_{{n+1}}],[e_{m}]\rangle =A_{{m,n+1}}=m_{{m+n+1}}=\langle [e_{n}],[e_{{m+1}}]\rangle .](/2014-wikipedia_en_all_02_2014/I/media/d/0/9/a/d09a2161fca48ef0804d2dfa55dbc6da.png)

![\langle {\bar {T}}^{n}[1],[1]\rangle =\int x^{n}d\mu (x).](/2014-wikipedia_en_all_02_2014/I/media/0/2/d/d/02dd297b25cb1e1ec97f75c7de301e82.png)

![\langle {\bar {T}}^{n}[1],[1]\rangle =\langle T^{n}[e_{0}],[e_{0}]\rangle =m_{n}.\,](/2014-wikipedia_en_all_02_2014/I/media/9/4/0/7/940777a558ff7b8474bd9517141723ca.png)

![\Delta _{n}=\left[{\begin{matrix}m_{0}&m_{1}&m_{2}&\cdots &m_{{n}}\\m_{1}&m_{2}&m_{3}&\cdots &m_{{n+1}}\\m_{2}&m_{3}&m_{4}&\cdots &m_{{n+2}}\\\vdots &\vdots &\vdots &\ddots &\vdots \\m_{{n}}&m_{{n+1}}&m_{{n+2}}&\cdots &m_{{2n}}\end{matrix}}\right].](/2014-wikipedia_en_all_02_2014/I/media/0/0/f/2/00f2f6ab5930e1c744ca17848fba5215.png)