Goertzel algorithm

The Goertzel algorithm is a Digital Signal Processing (DSP) technique that provides a means for efficient evaluation of individual terms of the Discrete Fourier Transform (DFT), thus making it useful in certain practical applications, such as recognition of DTMF tones produced by the buttons pushed on a telephone keypad. The algorithm was first described by Gerald Goertzel in 1958.[1]

Like the DFT, the Goertzel algorithm analyses one selectable frequency component from a discrete signal.[2][3][4] Unlike direct DFT calculations, the Goertzel algorithm applies a single real-valued coefficient at each iteration, using real-valued arithmetic for real-valued input sequences. For covering a full spectrum, the Goertzel algorithm has a higher order of complexity than Fast Fourier Transform (FFT) algorithms; but for computing a small number of selected frequency components, it is more numerically efficient. The simple structure of the Goertzel algorithm makes it well suited to small processors and embedded applications, though not limited to these.

The Goertzel algorithm can also be used "in reverse" as a sinusoid synthesis function, which requires only 1 multiplication and 1 subtraction per generated sample.[5]

The algorithm

The main calculation in the Goertzel algorithm has the form of a digital filter, and for this reason the algorithm is often called a Goertzel filter. The filter operates on an input sequence,  , in a cascade of two stages with a parameter,

, in a cascade of two stages with a parameter,  , giving the frequency to be analysed, normalised to cycles per sample.

, giving the frequency to be analysed, normalised to cycles per sample.

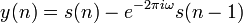

The first stage calculates an intermediate sequence,  :

:

- (1)

The second stage applies the following filter to  , producing output sequence

, producing output sequence  .

.

- (2)

[citation needed]

[citation needed]

The first filter stage can be observed to be a second-order IIR filter with a direct form structure. This particular structure has the property that its internal state variables equal the past output values from that stage. Input values  for

for  are presumed all equal to 0.

[citation needed]

To establish the initial filter state so that evaluation can begin at sample

are presumed all equal to 0.

[citation needed]

To establish the initial filter state so that evaluation can begin at sample  , the filter states are assigned initial values

, the filter states are assigned initial values  .

[citation needed]

To avoid aliasing hazards, frequency

.

[citation needed]

To avoid aliasing hazards, frequency  is often restricted to the range 0 to 1/2 (see Nyquist-Shannon sampling theorem), but the results are mathematically valid outside of this range.

is often restricted to the range 0 to 1/2 (see Nyquist-Shannon sampling theorem), but the results are mathematically valid outside of this range.

The second stage filter can be observed to be a FIR filter, since its calculations do not use any of its past outputs.

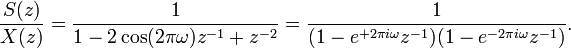

Z transform methods can be applied to study the properties of the filter cascade. The Z transform of the first filter stage given in equation (1) is:

- (3)

The Z transform of the second filter stage given in equation (2) is:

- (4)

The combined transfer function of the cascade of the two filter stages is then

- (5)

This can be transformed back to an equivalent time domain sequence, and the terms unrolled back to the first input term at index  .

.

- (6)

[citation needed]

[citation needed]

It can be observed that the poles of the filter's Z transform are located at  and

and  , on a circle of unit radius centered on the origin of the complex Z transform plane. This property indicates that the filter process is marginally stable and potentially vulnerable to numerical error accumulation when computed using low-precision arithmetic and long input sequences.

, on a circle of unit radius centered on the origin of the complex Z transform plane. This property indicates that the filter process is marginally stable and potentially vulnerable to numerical error accumulation when computed using low-precision arithmetic and long input sequences.

DFT computations

For the important case of computing a DFT term, the following special restrictions are applied.

- the filtering terminates at index

where

where  is the number of terms in the input sequence of the DFT

is the number of terms in the input sequence of the DFT - the frequencies chosen for the Goertzel analysis are restricted to the special form

- (7)

- the index number

indicating the "frequency bin" of the DFT is selected from the set of index numbers

indicating the "frequency bin" of the DFT is selected from the set of index numbers

- (8)

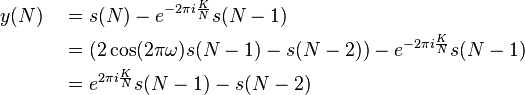

Making these substitutions into equation (6), and observing that the term  , equation (6) then takes the following form.

, equation (6) then takes the following form.

- (9)

We can observe that the right side of equation (9) is extremely similar to the defining formula for DFT term  , the DFT term for index number K, but not exactly the same. The summation shown in equation (9) requires N+1 input terms, but only N input terms are available when evaluating a DFT. A simple but inelegant expedient is to extend the input sequence

, the DFT term for index number K, but not exactly the same. The summation shown in equation (9) requires N+1 input terms, but only N input terms are available when evaluating a DFT. A simple but inelegant expedient is to extend the input sequence  with one more artificial value

with one more artificial value  .[6] We can see from equation (9), the mathematical effect on the final result is the same as removing term

.[6] We can see from equation (9), the mathematical effect on the final result is the same as removing term  from the summation, thus delivering the intended DFT value.

from the summation, thus delivering the intended DFT value.

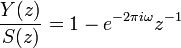

However, there is a more elegant approach that avoids the extra filter pass. From equation (1), we can note that when the extended input term  is used in the final step,

is used in the final step,

- (10)

Thus, the algorithm can be completed as follows:

- terminate the IIR filter after processing input term

- apply equation (10) to construct

from the prior outputs

from the prior outputs  and

and

- apply equation (2) with the calculated

value, and with

value, and with  produced by the final direct calculation of the filter.

produced by the final direct calculation of the filter.

The last two mathematical operations are simplified by combining them algebraically.

- (11)

Note that stopping the filter updates at term  and immediately applying equation (2) rather than equation (11) misses the final filter state updates, yielding a result with incorrect phase.[7]

and immediately applying equation (2) rather than equation (11) misses the final filter state updates, yielding a result with incorrect phase.[7]

The particular filtering structure chosen for the Goertzel algorithm is the key to its efficient DFT calculations. We can observe that only one output value  is used for calculating the DFT, so calculations for all the other output terms are omitted. Since the FIR filter is not calculated, the IIR stage calculations

is used for calculating the DFT, so calculations for all the other output terms are omitted. Since the FIR filter is not calculated, the IIR stage calculations  etc. can be discarded immediately after updating the first stage's internal state.

etc. can be discarded immediately after updating the first stage's internal state.

This seems to leave a paradox: to complete the algorithm, the FIR filter stage must be evaluated once using the final two outputs from the IIR filter stage, while for computational efficiency the IIR filter iteration discards its output values. This is where the properties of the direct-form filter structure are applied. The two internal state variables of the IIR filter provide the last two values of the IIR filter output, which are the terms required to evaluate the FIR filter stage.

Applications

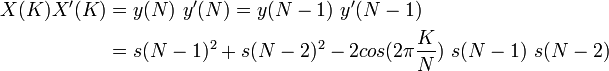

Power spectrum terms

Examining equation (6), a final IIR filter pass to calculate term  using a supplemental input value

using a supplemental input value  applies a complex multiplier of magnitude 1.0 to the previous term

applies a complex multiplier of magnitude 1.0 to the previous term  . Consequently,

. Consequently,  and

and  represent equivalent signal power. It is equally valid to apply equation (11) and calculate the signal power from term

represent equivalent signal power. It is equally valid to apply equation (11) and calculate the signal power from term  , or to apply equation (2) and calculate the signal power from term

, or to apply equation (2) and calculate the signal power from term  . Both cases lead to the following expression for the signal power represented by DFT term X(K).

. Both cases lead to the following expression for the signal power represented by DFT term X(K).

- (12)

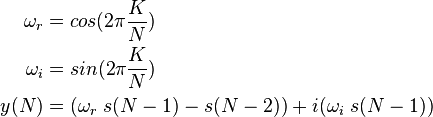

In the pseudocode below, the variables sprev and sprev2 temporarily store output history from the IIR filter, while x[n] is an indexed element of the array x which stores the input.

Nterms defined here Kterm selected here ω = 2 * π * Kterm / Nterms; ωr = cos(ω); ωi = sin(ω); coeff = 2 * ωr; sprev = 0; sprev2 = 0; for each index n in range 0 to Nterms-1 s = x[n] + coeff * sprev - sprev2; sprev2 = sprev; sprev = s; end power = sprev2*sprev2 + sprev*sprev - coeff*sprev*sprev2 ;

It is possible to organise the computations so that incoming samples are delivered singly to a software object that maintains the filter state between updates, with the final power result accessed after the other processing is done.

Single DFT term with real-valued arithmetic

The case of real-valued input data arises frequently, especially in embedded systems where the input streams result from direct measurements of physical processes. Comparing to the illustration in the previous section, when the input data are real-valued, the filter internal state variables sprev and sprev2 can be observed also to be real-valued, consequently, no complex arithmetic is required in the first IIR stage. Optimizing for real-valued arithmetic typically is as simple as applying appropriate real-valued data types for the variables.

After the calculations using input term  , and filter iterations are terminated, equation (11) must be applied to evaluate the DFT term. The final calculation uses complex-valued arithmetic, but this can be converted into real-valued arithmetic by separating real and imaginary terms.

, and filter iterations are terminated, equation (11) must be applied to evaluate the DFT term. The final calculation uses complex-valued arithmetic, but this can be converted into real-valued arithmetic by separating real and imaginary terms.

- (13)

Comparing to the power spectrum application, the only difference is the calculation used to finish.

(Same IIR filter calculations as in the signal power implementation) XKreal = sprev * ωr - sprev2; XKimag = sprev * ωi;

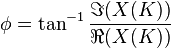

Phase detection

This application requires the same evaluation of DFT term  , as discussed in the previous section, using a real-valued or complex-valued input stream. Then the signal phase can be evaluated as:

, as discussed in the previous section, using a real-valued or complex-valued input stream. Then the signal phase can be evaluated as:

- (14)

taking appropriate precautions for singularities, quadrant, and so forth when computing the inverse tangent function.

Complex signals in real arithmetic

Since complex signals decompose linearly into real and imaginary parts, the Goertzel algorithm can be computed in real arithmetic separately over the sequence of real parts, yielding  ; and over the sequence of imaginary parts, yielding

; and over the sequence of imaginary parts, yielding  . After that, the two complex-valued partial results can be recombined:

. After that, the two complex-valued partial results can be recombined:

- (15)

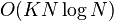

Computational complexity

- According to computational complexity theory, computing a set of

DFT terms using

DFT terms using  applications of the Goertzel algorithm on a data set with

applications of the Goertzel algorithm on a data set with  values with a "cost per operation" of

values with a "cost per operation" of  has complexity

has complexity  .

.

- To compute a single DFT bin

for a complex input sequence of length

for a complex input sequence of length  , the Goertzel algorithm requires

, the Goertzel algorithm requires  multiplications and

multiplications and  additions/subtractions within the loop, as well as 4 multiplications and 4 final additions/subtractions, for a total of

additions/subtractions within the loop, as well as 4 multiplications and 4 final additions/subtractions, for a total of  multiplications and

multiplications and  additions/subtractions. This is repeated for each of the

additions/subtractions. This is repeated for each of the  frequencies.

frequencies.

- In contrast, using an FFT on a data set with

values has complexity

values has complexity  . [citation needed]

. [citation needed]

- This is harder to apply directly because it depends on the FFT algorithm used, but a typical [citation needed] example is a radix-2 FFT, which requires

multiplications and

multiplications and  additions/subtractions per DFT bin, for each of the

additions/subtractions per DFT bin, for each of the  bins. [citation needed]

bins. [citation needed]

In the complexity order expressions, when the number of calculated terms  is smaller than

is smaller than  , the advantage of the Goertzel algorithm is clear. But because FFT code is comparatively very complex,

[citation needed]

the "cost per unit of work" factor

, the advantage of the Goertzel algorithm is clear. But because FFT code is comparatively very complex,

[citation needed]

the "cost per unit of work" factor  is often much larger [citation needed] for an FFT, and the practical advantage favours the Goertzel algorithm more than the complexity expressions indicate.

is often much larger [citation needed] for an FFT, and the practical advantage favours the Goertzel algorithm more than the complexity expressions indicate.

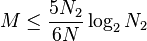

As a rule-of-thumb for determining whether a radix-2 FFT or a Goertzel algorithm is more efficient, adjust the number of terms N in the data set upward to the nearest exact power of 2, calling this  , and the Goertzel algorithm is likely to be faster if

, and the Goertzel algorithm is likely to be faster if

[citation needed]

[citation needed]

FFT implementations and processing platforms have a significant impact on the relative performance. Some FFT implementations[8] perform internal complex-number calculations to generate coefficients on-the-fly, significantly increasing their "cost K per unit of work." FFT and DFT algorithms can use tables of pre-computed coefficient values for better numerical efficiency, but this requires more accesses to coefficient values buffered in external memory, which can lead to increased cache contention that counters some of the numerical advantage. [citation needed]

Both algorithms [citation needed] gain approximately a factor of 2 efficiency [citation needed] when using real-valued rather than complex-valued input data. However, these gains are natural for the Goertzel algorithm but will not be achieved for the FFT without using certain algorithm variants specialised for transforming real-valued data.

See also

- Bluestein's FFT algorithm (chirp-Z)

- Frequency-Shift Keying (FSK)

- Phase-Shift Keying (PSK)

References

- ↑ Goertzel, G. (January 1958), "An Algorithm for the Evaluation of Finite Trigonometric Series", American Mathematical Monthly 65 (1): 34–35, doi:10.2307/2310304

- ↑ Mock (1985)

- ↑ Chen (1996)

- ↑ Schmer (2000)

- ↑ http://cs-www.cs.yale.edu/c2/images/uploads/AudioProc-TR.pdf

- ↑ "Goertzel's Algorithm". Cnx.org. 2006-09-12. Retrieved 2014-02-03.

- ↑ "Electronic Engineering Times | Connecting the Global Electronics Community". EE Times. Retrieved 2014-02-03.

- ↑ Press; Flannery; Teukolsky; Vetterling (2007), "Chapter 12", Numerical Recipes, The Art of Scientific Computing, Cambridge University Press

- Chen, Chiouguey J. (June 1996), Modified Goertzel Algorithm in DTMF Detection Using the TMS320C80 DSP, Application Report, SPRA066, Texas Instruments

- Mock, P. (March 21, 1985), "Add DTMF Generation and Decoding to DSP-μP Designs", EDN, ISSN 0012-7515; also found in DSP Applications with the TMS320 Family, Vol. 1, Texas Instruments, 1989.

- Schmer, Gunter (May 2000), DTMF Tone Generation and Detection: An Implementation Using the TMS320C54x, Application Report, SPRA096a, Texas Instruments

- Proakis, J. G.; Manolakis, D. G. (1996), Digital Signal Processing: Principles, Algorithms, and Applications, Upper Saddle River, NJ: Prentice Hall, pp. 480–481

External links

- http://netwerkt.wordpress.com/2011/08/25/goertzel-filter/ C Code implementation of iterative Goertzel algorithm

- http://www.embedded.com/design/configurable-systems/4006427/A-DSP-algorithm-for-frequency-analysis (Java) A DSP algorithm for frequency analysis - the Chirp-Z Transform (CZT)