Geometric algebra

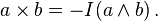

A geometric algebra (GA) is the Clifford algebra of a vector space over the field of real numbers endowed with a quadratic form. The term is also sometimes used as a collective term for the approach to classical, computational and relativistic geometry that applies these algebras. The distinguishing multiplication operation that defines the GA as a unital ring is the geometric product. Taking the geometric product among vectors can yield bivectors, trivectors, or general n-vectors. The addition operation combines these into general multivectors, which are the elements of the ring. This includes, among other possibilities, a well-defined sum of a scalar and a vector, an operation that is impossible by traditional vector addition. This operation may seem peculiar, but in geometric algebra it is seen as no more unusual than the representation of a complex number by the sum of its real and imaginary components.

Geometric algebra is distinguished from Clifford algebra in general by its restriction to real numbers and its emphasis on its geometric interpretation and physical applications. Specific examples of geometric algebras applied in physics include the algebra of physical space, the spacetime algebra, and the conformal geometric algebra. Geometric calculus, an extension of GA that includes differentiation and integration can be further shown to incorporate other theories such as complex analysis, differential geometry, and differential forms. Because of such a broad reach with a comparatively simple algebraic structure, GA has been advocated, most notably by David Hestenes[1] and Chris Doran,[2] as the preferred mathematical framework for physics. Proponents argue that it provides compact and intuitive descriptions in many areas including classical and quantum mechanics, electromagnetic theory and relativity.[3] Others claim that in some cases the geometric algebra approach is able to sidestep a "proliferation of manifolds"[4] that arises during the standard application of differential geometry.

The geometric product was first briefly mentioned by Hermann Grassmann, who was chiefly interested in developing the closely related but more limited exterior algebra. In 1878, William Kingdom Clifford greatly expanded on Grassmann's work to form what are now usually called Clifford algebras in his honor (although Clifford himself chose to call them "geometric algebras"). For several decades, geometric algebras went somewhat ignored, greatly eclipsed by the vector calculus then newly developed to describe electromagnetism. The term "geometric algebra" was repopularized by Hestenes in the 1960s, who recognized its importance to relativistic physics.[5] Since then, geometric algebra (GA) has also found application in computer graphics and robotics.

Definition and notation

Given a finite dimensional real quadratic space V = Rn with quadratic form Q : V → R, the geometric algebra for this quadratic space is the Clifford algebra Cℓ(V,Q).

The algebra product is called the geometric product. It is standard to denote the geometric product by juxtaposition. The above definition of the geometric algebra is abstract, so we summarize the properties of the geometric product by the following set of axioms. If a, b, and c are vectors, then the geometric product has the following properties:

(associativity)

(associativity) (distributivity over addition)

(distributivity over addition)

Note that in the final property above, the square is not necessarily positive. An important property of the geometric product is the existence of elements with multiplicative inverse, also known as units. If a2 ≠ 0 for some vector a, then a−1 exists and is equal to a/(a2). Not all the elements of the algebra are necessarily units. For example, if u is a vector in V such that u2 = 1, the elements 1 ± u have no inverse since they are zero divisors: (1 − u)(1 + u) = 1 − uu = 1 − 1 = 0. There may also exist nontrivial idempotent elements such as (1 + u)/2.

Inner and outer product of vectors

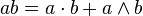

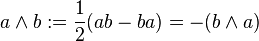

From the axioms above, we find that, for vectors a and b, we may write the geometric product of any two vectors a and b as the sum of a symmetric product and an antisymmetric product:

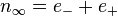

Thus we can define the inner product of vectors to be the symmetric product

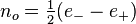

which is a real number because it is a sum of squares. The remaining antisymmetric part is the outer product (the exterior product of the contained exterior algebra):

The inner and outer products are associated with familiar concepts from standard vector algebra. Pictorially, a and b are parallel if all their geometric product is equal to their inner product whereas a and b are perpendicular if their geometric product is equal to their outer product. In a geometric algebra for which the square of any nonzero vector is positive, the inner product of two vectors can be identified with the dot product of standard vector algebra. The outer product of two vectors can be identified with the signed area enclosed by a parallelogram the sides of which are the vectors. The cross product of two vectors in 3 dimensions with positive-definite quadratic form is closely related to their outer product.

Most instances of geometric algebras of interest have a nondegenerate quadratic form. If the quadratic form is fully degenerate, the inner product of any two vectors is always zero, and the geometric algebra is then simply an exterior algebra. Unless otherwise stated, this article will treat only nondegenerate geometric algebras.

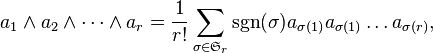

The outer product is naturally extended as a completely antisymmetric, associative operator between any number of vectors

where the sum is over all permutations of the indices, with  the sign of the permutation.

the sign of the permutation.

Blades, grading, and canonical basis

A multivector that is the outer product of r independent vectors ( ) is called a blade, and the blade is said to be a multivector of grade r. From the axioms, with closure, every multivector of the geometric algebra is a sum of blades.

) is called a blade, and the blade is said to be a multivector of grade r. From the axioms, with closure, every multivector of the geometric algebra is a sum of blades.

Consider a set of r independent vectors  spanning an r-dimensional subspace of the vector space. With these, we can define a real symmetric matrix

spanning an r-dimensional subspace of the vector space. With these, we can define a real symmetric matrix

By the spectral theorem, A can be diagonalized to diagonal matrix D by an orthogonal matrix O via

Define a new set of vectors  , known as orthogonal basis vectors, to be those transformed by the orthogonal matrix:

, known as orthogonal basis vectors, to be those transformed by the orthogonal matrix:

Since orthogonal transformations preserve inner products, it follows that ![e_{i}\cdot e_{j}=[{\mathbf {D}}]_{{ij}}](/2014-wikipedia_en_all_02_2014/I/media/a/9/7/c/a97cba3f4ed3d51fbe03ef3bb42f35de.png) and thus the

and thus the  are perpendicular. In other words the geometric product of two distinct vectors

are perpendicular. In other words the geometric product of two distinct vectors  is completely specified by their outer product, or more generally

is completely specified by their outer product, or more generally

- Failed to parse(unknown function '\begin'): {\begin{aligned}e_{1}e_{2}\cdots e_{r}&=e_{1}\wedge e_{2}\wedge \cdots \wedge e_{r}\\&=\left(\sum _{j}[{\mathbf {O}}]_{{1j}}a_{j}\right)\wedge \left(\sum _{j}[{\mathbf {O}}]_{{2j}}a_{j}\right)\wedge \cdots \wedge \left(\sum _{j}[{\mathbf {O}}]_{{rj}}a_{j}\right)\\&=\det[{\mathbf {O}}]a_{1}\wedge a_{2}\wedge \cdots \wedge a_{r}\end{aligned}}

Therefore every blade of grade r can be written as a geometric product of r vectors. More generally, if a degenerate geometric algebra is allowed, then the orthogonal matrix is replaced by a block matrix that is orthogonal in the nondegenerate block, and the diagonal matrix has zero-valued entries along the degenerate dimensions. If the new vectors of the nondegenerate subspace are normalized according to

then these normalized vectors must square to +1 or −1. By Sylvester's law of inertia, the total number of +1's and the total number of −1's along the diagonal matrix is invariant. By extension, the total number p of orthonormal basis vectors that square to +1 and the total number q of orthonormal basis vectors that square to −1 is invariant. (If the degenerate case is allowed, then the total number of basis vectors that square to zero is also invariant.) We denote this algebra  . For example,

. For example,  models 3D Euclidean space,

models 3D Euclidean space,  relativistic spacetime and

relativistic spacetime and  a 3D conformal geometric algebra.

a 3D conformal geometric algebra.

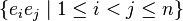

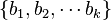

The set of all possible products of n orthogonal basis vectors with indices in increasing order, including 1 as the empty product forms a basis for the entire geometric algebra (an analogue of the PBW theorem). For example, the following is a basis for the geometric algebra  :

:

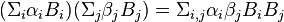

A basis formed this way is called a canonical basis for the geometric algebra, and any other orthogonal basis for V will produce another canonical basis. Each canonical basis consists of 2n elements. Every multivector of the geometric algebra can be expressed as a linear combination of the canonical basis elements. If the canonical basis elements are {Bi | i∈S} with S being an index set, then the geometric product of any two multivectors is

.

.

Grade projection

Using a canonical basis, a graded vector space structure can be established. Elements of the geometric algebra that are simply scalar multiples of 1 are grade-0 blades and are called scalars. Nonzero multivectors that are in the span of  are grade-1 blades and are the ordinary vectors. Multivectors in the span of

are grade-1 blades and are the ordinary vectors. Multivectors in the span of  are grade-2 blades and are the bivectors. This terminology continues through to the last grade of n-vectors. Alternatively, grade-n blades are called pseudoscalars, grade-n−1 blades pseudovectors, etc. Many of the elements of the algebra are not graded by this scheme since they are sums of elements of differing grade. Such elements are said to be of mixed grade. The grading of multivectors is independent of the orthogonal basis chosen originally.

are grade-2 blades and are the bivectors. This terminology continues through to the last grade of n-vectors. Alternatively, grade-n blades are called pseudoscalars, grade-n−1 blades pseudovectors, etc. Many of the elements of the algebra are not graded by this scheme since they are sums of elements of differing grade. Such elements are said to be of mixed grade. The grading of multivectors is independent of the orthogonal basis chosen originally.

A multivector  may be decomposed with the grade-projection operator

may be decomposed with the grade-projection operator  which outputs the grade-r portion of A. As a result:

which outputs the grade-r portion of A. As a result:

As an example, the geometric product of two vectors  since

since  and

and  and

and  for i other than 0 and 2.

for i other than 0 and 2.

The decomposition of a multivector  may also be split into those components that are even and those that are odd:

may also be split into those components that are even and those that are odd:

This makes the algebra a Z2-graded algebra or superalgebra with the geometric product. Since the geometric product of two even multivectors is an even multivector, they define an even subalgebra. The even subalgebra of an n-dimensional geometric algebra is isomorphic to a full geometric algebra of (n−1) dimensions. Examples include  and

and  .

.

Representation of subspaces

Geometric algebra represents subspaces of V as multivectors, and so they coexist in the same algebra with vectors from V. A k dimensional subspace W of V is represented by taking an orthogonal basis  and using the geometric product to form the blade D = b1b2⋅⋅⋅bk. There are multiple blades representing W; all those representing W are scalar multiples of D. These blades can be separated into two sets: positive multiples of D and negative multiples of D. The positive multiples of D are said to have the same orientation as D, and the negative multiples the opposite orientation.

and using the geometric product to form the blade D = b1b2⋅⋅⋅bk. There are multiple blades representing W; all those representing W are scalar multiples of D. These blades can be separated into two sets: positive multiples of D and negative multiples of D. The positive multiples of D are said to have the same orientation as D, and the negative multiples the opposite orientation.

Blades are important since geometric operations such as projections, rotations and reflections depend on the factorability via the outer product that (the restricted class of) n-blades provide but that (the generalized class of) grade-n multivectors do not when n ≥ 4.

Unit pseudoscalars

Unit pseudoscalars are blades that play important roles in GA. A unit pseudoscalar for a non-degenerate subspace W of V is a blade that is the product of the members of an orthonormal basis for W. It can be shown that if I and I′ are both unit pseudoscalars for W, then I = ±I′ and I2 = ±1.

Suppose the geometric algebra  with the familiar positive definite inner product on Rn is formed. Given a plane (2-dimensional subspace) of Rn, one can find an orthonormal basis {b1,b2} spanning the plane, and thus find a unit pseudoscalar I = b1b2 representing this plane. The geometric product of any two vectors in the span of b1 and b2 lies in

with the familiar positive definite inner product on Rn is formed. Given a plane (2-dimensional subspace) of Rn, one can find an orthonormal basis {b1,b2} spanning the plane, and thus find a unit pseudoscalar I = b1b2 representing this plane. The geometric product of any two vectors in the span of b1 and b2 lies in  , that is, it is the sum of a 0-vector and a 2-vector.

, that is, it is the sum of a 0-vector and a 2-vector.

By the properties of the geometric product, I 2 = b1b2b1b2 = −b1b2b2b1 = −1. The resemblance to the imaginary unit is not accidental: the subspace  is R-algebra isomorphic to the complex numbers. In this way, a copy of the complex numbers is embedded in the geometric algebra for each 2-dimensional subspace of V on which the quadratic form is definite.

is R-algebra isomorphic to the complex numbers. In this way, a copy of the complex numbers is embedded in the geometric algebra for each 2-dimensional subspace of V on which the quadratic form is definite.

It is sometimes possible to identify the presence of an imaginary unit in a physical equation. Such units arise from one of the many quantities in the real algebra that square to −1, and these have geometric significance because of the properties of the algebra and the interaction of its various subspaces.

In  , an exceptional case occurs. Given a canonical basis built from orthonormal ei's from V, the set of all 2-vectors is generated by

, an exceptional case occurs. Given a canonical basis built from orthonormal ei's from V, the set of all 2-vectors is generated by

.

.

Labelling these i, j and k (momentarily deviating from our uppercase convention), the subspace generated by 0-vectors and 2-vectors is exactly  . This set is seen to be a subalgebra, and furthermore is R-algebra isomorphic to the quaternions, another important algebraic system.

. This set is seen to be a subalgebra, and furthermore is R-algebra isomorphic to the quaternions, another important algebraic system.

Dual basis

Let  be a basis of V, i.e. a set of n linearly independent vectors that span the n-dimensional vector space V. The basis that is dual to

be a basis of V, i.e. a set of n linearly independent vectors that span the n-dimensional vector space V. The basis that is dual to  is the set of elements of the dual vector space V∗ that forms a biorthogonal system with this basis, thus being the elements denoted

is the set of elements of the dual vector space V∗ that forms a biorthogonal system with this basis, thus being the elements denoted  satisfying

satisfying

where δ is the Kronecker delta.

Given a nondegenerate quadratic form on V, V∗ becomes naturally identified with V, and the dual basis may be regarded as elements of V, but are not in general the same set as the original basis.

Given further a GA of V, let

be the pseudoscalar (which does not necessarily square to ±1) formed from the basis  . The dual basis vectors may be constructed as

. The dual basis vectors may be constructed as

where the  denotes that the ith basis vector is omitted from the product.

denotes that the ith basis vector is omitted from the product.

Extensions of the inner and outer products

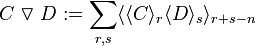

It is common practice to extend the outer product on vectors to the entire algebra. This may be done through the use of the grade projection operator:

-

(the outer product)

(the outer product)

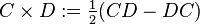

This generalization is consistent with the above definition involving antisymmetrization. Another generalization related to the outer product is the commutator product:

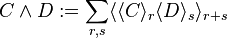

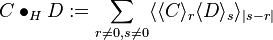

The regressive product is the dual of the outer product:[6]

The inner product on vectors can also be generalised, but in more than one non-equivalent way. The paper (Dorst 2002) gives a full treatment of several different inner products developed for geometric algebras and their interrelationships, and the notation is taken from there. Many authors use the same symbol as for the inner product of vectors for their chosen extension (e.g. Hestenes and Perwass). No consistent notation has emerged.

Among these several different generalizations of the inner product on vectors are:

-

(the left contraction)

(the left contraction) -

(the right contraction)

(the right contraction) -

(the scalar product)

(the scalar product) -

(the "(fat) dot" product)

(the "(fat) dot" product) -

(Hestenes's inner product)[7]

(Hestenes's inner product)[7]

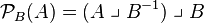

(Dorst 2002) makes an argument for the use of contractions in preference to Hestenes's inner product; they are algebraically more regular and have cleaner geometric interpretations. A number of identities incorporating the contractions are valid without restriction of their inputs. Benefits of using the left contraction as an extension of the inner product on vectors include that the identity  is extended to

is extended to  for any vector a and multivector B, and that the projection operation

for any vector a and multivector B, and that the projection operation  is extended to

is extended to  for any blades A and B (with a minor modification to accommodate null B, given below).

for any blades A and B (with a minor modification to accommodate null B, given below).

Terminology specific to geometric algebra

Some terms are used in geometric algebra with a meaning that differs from the use of those terms in other fields of mathematics. Some of these are listed here:

- Vector

- In GA this refers specifically to an element of the 1-vector subspace unless otherwise clear from the context, despite the entire algebra forming a vector space.

- Grade

- In GA this refers to a grading as an algebra under the outer product (an

-grading), and not under the geometric product (which produces a Z2n-grading).

-grading), and not under the geometric product (which produces a Z2n-grading). - Outer product

- In GA this refers to what is generally called the exterior product (including in GA as an alternative). It is not the outer product of linear algebra.

- Inner product

- In GA this generally refers to a scalar product on the vector subspace (which is not required to be positive definite) and may include any chosen extension of this product to the entire algebra. It is not specifically the inner product on a normed vector space.

- Versor

- In GA this refers to an object that can be constructed as the geometric product of any number of non-null vectors. The term otherwise may refer to a unit quaternion, analogous to a rotor in GA.

- Outermorphism

- This term is used only in GA, and refers to a linear map on the vector subspace, extended to apply to the entire algebra by defining it as preserving the outer product.

Geometric interpretation

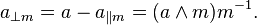

Projection and rejection

For any vector a and any invertible vector m,

where the projection of a onto m (or the parallel part) is

and the rejection of a onto m (or the perpendicular part) is

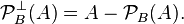

Using the concept of a k-blade B as representing a subspace of V and every multivector ultimately being expressed in terms of vectors, this generalizes to projection of a general multivector onto any invertible k-blade B as[8]

with the rejection being defined as

The projection and rejection generalize to null blades B by replacing the inverse B−1 with the pseudoinverse B+ with respect to the contractive product.[9] The outcome of the projection coincides in both cases for non-null blades.[10][11] For null blades B, the definition of the projection given here with the first contraction rather than the second being onto the pseudoinverse should be used,[12] as only then is the result necessarily in the subspace represented by B.[10] The projection generalizes through linearity to general multivectors A.[13] The projection is not linear in B and does not generalize to objects B that are not blades.

Reflections

The definition of a reflection occurs in two forms in the literature. Several authors work with reflection on a vector (negating all vector components except that parallel to the specifying vector), while others work with reflection along a vector (negating only the component parallel to the specifying vector, or reflection in the hypersurface orthogonal to that vector). Either may be used to build general versor operations, but the former has the advantage that it extends to the algebra in a simpler and algebraically more regular fashion.

Reflection on a vector

The result of reflecting a vector a on another vector n is to negate the rejection of a. It is akin to reflecting the vector a through the origin, except that the projection of a onto n is not reflected. Such an operation is described by

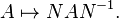

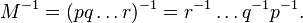

Repeating this operation results in a general versor operation (including both rotations and reflections) of a general multivector A being expressed as

This allows a general definition of any versor N (including both reflections and rotors) as an object that can be expressed as a geometric product of any number of non-null 1-vectors. Such a versor can be applied in a uniform sandwich product as above irrespective of whether it is of even (a proper rotation) or odd grade (an improper rotation i.e. general reflection). The set of all versors with the geometric product as the group operation constitutes the Clifford group of the Clifford algebra Cℓp,q(R).[14]

Reflection along a vector

The reflection of a vector a along a vector m, or equivalently in the hyperplane orthogonal to m, is the same as negating the component of a vector parallel to m. The result of the reflection will be

This is not the most general operation that may be regarded as a reflection when the dimension n ≥ 4. A general reflection may be expressed as the composite of any odd number of single-axis reflections. Thus, a general reflection of a vector may be written

where

and

and

If we define the reflection along a non-null vector m of the product of vectors as the reflection of every vector in the product along the same vector, we get for any product of an odd number of vectors that, by way of example,

and for the product of an even number of vectors that

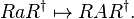

Using the concept of every multivector ultimately being expressed in terms of vectors, the reflection of a general multivector A using any reflection versor M may be written

where α is the automorphism of reflection through the origin of the vector space (v ↦ −v) extended through multilinearity to the whole algebra.

Hypervolume of an n-parallelotope spanned by n vectors

For vectors  and

and  spanning a parallelogram we have

spanning a parallelogram we have

with the result that  is linear in the product of the "altitude" and the "base" of the parallelogram, that is, its area.

is linear in the product of the "altitude" and the "base" of the parallelogram, that is, its area.

Similar interpretations are true for any number of vectors spanning an n-dimensional parallelotope; the outer product of vectors a1, a2, ... an, that is  , has a magnitude equal to the volume of the n-parallelotope. An n-vector doesn't necessarily have a shape of a parallelotope – this is a convenient visualization. It could be any shape, although the volume equals that of the parallelotope.

, has a magnitude equal to the volume of the n-parallelotope. An n-vector doesn't necessarily have a shape of a parallelotope – this is a convenient visualization. It could be any shape, although the volume equals that of the parallelotope.

Rotations

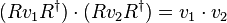

If we have a product of vectors  then we denote the reverse as

then we denote the reverse as

.

.

As an example, assume that  we get

we get

.

.

Scaling R so that RR† = 1 then

so  † leaves the length of

† leaves the length of  unchanged. We can also show that

unchanged. We can also show that

so the transformation RvR† preserves both length and angle. It therefore can be identified as a rotation or rotoreflection; R is called a rotor if it is a proper rotation (as it is if it can be expressed as a product of an even number of vectors) and is an instance of what is known in GA as a versor (presumably for historical reasons).

There is a general method for rotating a vector involving the formation of a multivector of the form  that produces a rotation

that produces a rotation  in the plane and with the orientation defined by a 2-blade

in the plane and with the orientation defined by a 2-blade  .

.

Rotors are a generalization of quaternions to n-D spaces.

For more about reflections, rotations and "sandwiching" products like RvR† see Plane of rotation.

Linear functions

An important class of functions of multivectors are the linear functions mapping multivectors to multivectors. The geometric algebra of an n-dimensional vector space is spanned by 2n canonical basis elements. If a multivector in this basis is represented by a 2n x 1 real column matrix, then in principle all linear transformations of the multivector can be written as the matrix multiplication of a 2n x 2n real matrix on the column, just as in the entire theory of linear algebra in 2n dimensions.

There are several issues with this naive generalization. To see this, recall that the eigenvalues of a real matrix may in general be complex. The scalar coefficients of blades must be real, so these complex values are of no use. If we attempt to proceed with an analogy for these complex eigenvalues anyway, we know that in ordinary linear algebra, complex eigenvalues are associated with rotation matrices. However if the linear function is truly general, it could allow arbitrary exchanges among the different grades, such as a "rotation" of a scalar into a vector. This operation has no clear geometric interpretation.

We seek to restrict the class of linear functions of multivectors to more geometrically sensible transformations. A common restriction is to require that the linear functions be grade-preserving. The grade-preserving linear functions are the linear functions that map scalars to scalars, vectors to vectors, bivectors to bivectors, etc. In matrix representation, the grade-preserving linear functions are block diagonal matrices, where each r-grade block is of size  . A weaker restriction allows the linear functions to map r-grade multivectors into linear combinations of r-grade and (n−r)-grade multivectors. These functions map scalars into scalars+pseudoscalars, vectors to vectors+pseudovectors, etc.

. A weaker restriction allows the linear functions to map r-grade multivectors into linear combinations of r-grade and (n−r)-grade multivectors. These functions map scalars into scalars+pseudoscalars, vectors to vectors+pseudovectors, etc.

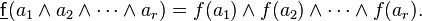

Often an invertible linear transformation from vectors to vectors is already of known interest. There is no unique way to generalize these transformations to the entire geometric algebra without further restriction. Even the restriction that the linear transformation be grade-preserving is not enough. We therefore desire a stronger rule, motivated by geometric interpretation, for generalizing these linear transformations of vectors in a standard way. The most natural choice is that of the outermorphism of the linear transformation because it extends the concepts of reflection and rotation straightforwardly. If f is a function that maps vectors to vectors, then its outermorphism is the function that obeys the rule

In particular, the outermorphism of the reflection of a vector on a vector is

and the outermorphism of the rotation of a vector by a rotor is

Examples and applications

Intersection of a line and a plane

We may define the line parametrically by  where p and t are position vectors for points T and P and v is the direction vector for the line.

where p and t are position vectors for points T and P and v is the direction vector for the line.

Then

and

and

so

and

.

.

Rotating systems

The mathematical description of rotational forces such as torque and angular momentum make use of the cross product.

The cross product can be viewed in terms of the outer product allowing a more natural geometric interpretation of the cross product as a bivector using the dual relationship

For example,torque is generally defined as the magnitude of the perpendicular force component times distance, or work per unit angle.

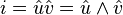

Suppose a circular path in an arbitrary plane containing orthonormal vectors  and

and is parameterized by angle.

is parameterized by angle.

By designating the unit bivector of this plane as the imaginary number

this path vector can be conveniently written in complex exponential form

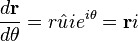

and the derivative with respect to angle is

So the torque, the rate of change of work W, due to a force F, is

Unlike the cross product description of torque,  , the geometric algebra description does not introduce a vector in the normal direction; a vector that does not exist in two and that is not unique in greater than three dimensions. The unit bivector describes the plane and the orientation of the rotation, and the sense of the rotation is relative to the angle between the vectors

, the geometric algebra description does not introduce a vector in the normal direction; a vector that does not exist in two and that is not unique in greater than three dimensions. The unit bivector describes the plane and the orientation of the rotation, and the sense of the rotation is relative to the angle between the vectors  and

and  .

.

Electrodynamics and special relativity

In physics, the main applications are the geometric algebra of Minkowski 3+1 spacetime, Cℓ1,3, called spacetime algebra (STA).[5] or less commonly, Cℓ3, called the algebra of physical space (APS) where Cℓ3 is isomorphic to the even subalgebra of the 3+1 Clifford algebra, Cℓ0

3,1.

While in STA points of spacetime are represented simply by vectors, in APS, points of (3+1)-dimensional spacetime are instead represented by paravectors: a 3-dimensional vector (space) plus a 1-dimensional scalar (time).

In spacetime algebra the electromagnetic field tensor has a bivector representation  .[15] Here, the imaginary unit is the (four-dimensional) volume element, and

.[15] Here, the imaginary unit is the (four-dimensional) volume element, and  is the unit vector in time direction. Using the four-current

is the unit vector in time direction. Using the four-current  , Maxwell's equations then become

, Maxwell's equations then become

Formulation Homogeneous equations Non-homogeneous equations Fields

Potentials (any gauge)

Potentials (Lorenz gauge)

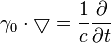

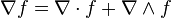

In geometric calculus, juxtapositioning of vectors such as in  indicate the geometric product and can be decomposed into parts as

indicate the geometric product and can be decomposed into parts as  . Here

. Here  is the covector derivative in any spacetime and reduces to

is the covector derivative in any spacetime and reduces to  in flat spacetime. Where

in flat spacetime. Where  plays a role in Minkowski 4-spacetime which is synonymous to the role of

plays a role in Minkowski 4-spacetime which is synonymous to the role of  in Euclidean 3-space and is related to the D'Alembertian by

in Euclidean 3-space and is related to the D'Alembertian by  . Indeed given an observer represented by a future pointing timelike vector

. Indeed given an observer represented by a future pointing timelike vector  we have

we have

Boosts in this Lorenzian metric space have the same expression  as rotation in Euclidean space, where

as rotation in Euclidean space, where  is the bivector generated by the time and the space directions involved, whereas in the Euclidean case it is the bivector generated by the two space directions, strengthening the "analogy" to almost identity.

is the bivector generated by the time and the space directions involved, whereas in the Euclidean case it is the bivector generated by the two space directions, strengthening the "analogy" to almost identity.

Relationship with other formalisms

may be directly compared to vector algebra.

may be directly compared to vector algebra.

The even subalgebra of  is isomorphic to the complex numbers, as may be seen by writing a vector P in terms of its components in an orthonormal basis and left multiplying by the basis vector e1, yielding

is isomorphic to the complex numbers, as may be seen by writing a vector P in terms of its components in an orthonormal basis and left multiplying by the basis vector e1, yielding

where we identify i ↦ e1e2 since

Similarly, the even subalgebra of  with basis {1, e2e3, e3e1, e1e2} is isomorphic to the quaternions as may be seen by identifying i ↦ −e2e3, j ↦ −e3e1 and k ↦ −e1e2.

with basis {1, e2e3, e3e1, e1e2} is isomorphic to the quaternions as may be seen by identifying i ↦ −e2e3, j ↦ −e3e1 and k ↦ −e1e2.

Every associative algebra has a matrix representation; the Pauli matrices are a representation of  and the Dirac matrices are a representation of

and the Dirac matrices are a representation of  , showing the equivalence with matrix representations used by physicists.

, showing the equivalence with matrix representations used by physicists.

Geometric calculus

Geometric calculus extends the formalism to include differentiation and integration including differential geometry and differential forms.[16]

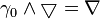

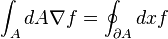

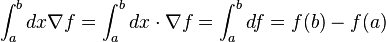

Essentially, the vector derivative is defined so that the GA version of Green's theorem is true,

and then one can write

as a geometric product, effectively generalizing Stokes' theorem (including the differential form version of it).

In  when A is a curve with endpoints

when A is a curve with endpoints  and

and  , then

, then

reduces to

or the fundamental theorem of integral calculus.

Also developed are the concept of vector manifold and geometric integration theory (which generalizes Cartan's differential forms).

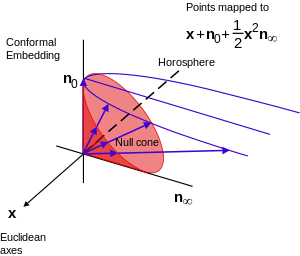

Conformal geometric algebra (CGA)

A compact description of the current state of the art is provided by Bayro-Corrochano and Scheuermann (2010),[17] which also includes further references, in particular to Dorst et al (2007).[18] Other useful references are Li (2008).[19] and Bayro (2010).[20]

Working within GA, Euclidian space  is embedded projectively in the CGA

is embedded projectively in the CGA  via the identification of Euclidean points with 1D subspaces in the 4D null cone of the 5D CGA vector subspace, and adding a point at infinity. This allows all conformal transformations to be done as rotations and reflections and is covariant, extending incidence relations of projective geometry to circles and spheres.

via the identification of Euclidean points with 1D subspaces in the 4D null cone of the 5D CGA vector subspace, and adding a point at infinity. This allows all conformal transformations to be done as rotations and reflections and is covariant, extending incidence relations of projective geometry to circles and spheres.

Specifically, we add orthogonal basis vectors  and

and  such that

such that  and

and  to the basis of

to the basis of  and identify null vectors

and identify null vectors

as an ideal point (point at infinity) (see Compactification) and

as an ideal point (point at infinity) (see Compactification) and as the point at the origin, giving

as the point at the origin, giving .

.

This procedure has some similarities to the procedure for working with homogeneous coordinates in projective geometry and in this case allows the modeling of Euclidean transformations as orthogonal transformations.

A fast changing and fluid area of GA, CGA is also being investigated for applications to relativistic physics.

History

- Before the 20th century

Although the connection of geometry with algebra dates as far back at least to Euclid's Elements in the 3rd century B.C. (see Greek geometric algebra), GA in the sense used in this article was not developed until 1844, when it was used in a systematic way to describe the geometrical properties and transformations of a space. In that year, Hermann Grassmann introduced the idea of a geometrical algebra in full generality as a certain calculus (analogous to the propositional calculus) that encoded all of the geometrical information of a space.[21] Grassmann's algebraic system could be applied to a number of different kinds of spaces, the chief among them being Euclidean space, affine space, and projective space. Following Grassmann, in 1878 William Kingdon Clifford examined Grassmann's algebraic system alongside the quaternions of William Rowan Hamilton in (Clifford 1878). From his point of view, the quaternions described certain transformations (which he called rotors), whereas Grassmann's algebra described certain properties (or Strecken such as length, area, and volume). His contribution was to define a new product — the geometric product — on an existing Grassmann algebra, which realized the quaternions as living within that algebra. Subsequently Rudolf Lipschitz in 1886 generalized Clifford's interpretation of the quaternions and applied them to the geometry of rotations in n dimensions. Later these developments would lead other 20th-century mathematicians to formalize and explore the properties of the Clifford algebra.

Nevertheless, another revolutionary development of the 19th-century would completely overshadow the geometric algebras: that of vector analysis, developed independently by Josiah Willard Gibbs and Oliver Heaviside. Vector analysis was motivated by James Clerk Maxwell's studies of electromagnetism, and specifically the need to express and manipulate conveniently certain differential equations. Vector analysis had a certain intuitive appeal compared to the rigors of the new algebras. Physicists and mathematicians alike readily adopted it as their geometrical toolkit of choice, particularly following the influential 1901 textbook Vector Analysis by Edwin Bidwell Wilson, following lectures of Gibbs.

In more detail, there have been three approaches to geometric algebra: quaternionic analysis, initiated by Hamilton in 1843 and geometrized as rotors by Clifford in 1878; geometric algebra, initiated by Grassmann in 1844; and vector analysis, developed out of quaternionic analysis in the late 19th century by Gibbs and Heaviside. The legacy of quaternionic analysis in vector analysis can be seen in the use of i, j, k to indicate the basis vectors of R3: it is being thought of as the purely imaginary quaternions. From the perspective of geometric algebra, quaternions can be identified as Cℓ03,0(R), the even part of the Clifford algebra on Euclidean 3-space, which unifies the three approaches.

- 20th century and present

Progress on the study of Clifford algebras quietly advanced through the twentieth century, although largely due to the work of abstract algebraists such as Hermann Weyl and Claude Chevalley. The geometrical approach to geometric algebras has seen a number of 20th-century revivals. In mathematics, Emil Artin's Geometric Algebra[22] discusses the algebra associated with each of a number of geometries, including affine geometry, projective geometry, symplectic geometry, and orthogonal geometry. In physics, geometric algebras have been revived as a "new" way to do classical mechanics and electromagnetism, together with more advanced topics such as quantum mechanics and gauge theory.[23] David Hestenes reinterpreted the Pauli and Dirac matrices as vectors in ordinary space and spacetime, respectively, and has been a primary contemporary advocate for the use of geometric algebra.

In computer graphics and robotics, geometric algebras have been revived in order to efficiently represent rotations and other transformations. For applications of GA in robotics (screw theory, kinematics and dynamics using versors), computer vision, control and neural computing (geometric learning) see Bayro (2010).

Software

GA is a very application oriented subject. There is a reasonably steep initial learning curve associated with it, but this can be eased somewhat by the use of applicable software.

The following is a list of freely available software that does not require ownership of commercial software or purchase of any commercial products for this purpose:

- GA Viewer Fontijne, Dorst, Bouma & Mann

The link provides a manual, introduction to GA and sample material as well as the software.

- CLUViz Perwass

Software allowing script creation and including sample visualizations, manual and GA introduction.

- Gaigen Fontijne

For programmers,this is a code generator with support for C,C++,C# and Java.

- Cinderella Visualizations Hitzer and Dorst.

- Gaalop Standalone GUI-Application that uses the Open-Source Computer Algebra Software Maxima to break down CLUViz code into C/C++ or Java code.

- Gaalop Precompiler Precompiler based on Gaalop integrated with CMake.

- Gaalet, C++ Expression Template Library Seybold.

- GALua, A Lua module adding GA data-types to the Lua programming language Parkin.

- Versor Colapinto, A lightweight templated C++ Library with an OpenGL interface for efficient geometric algebra programming in arbitrary metrics, including conformal

See also

- Comparison of vector algebra and geometric algebra

- Clifford algebra

- Spacetime algebra

- Spinor

- Quaternion

- Algebra of physical space (wikibooks:Physics in the Language of Geometric Algebra. An Approach with the Algebra of Physical Space)

- Universal geometric algebra

References

- ↑ Hestenes, David (February 2003), "Oersted Medal Lecture 2002: Reforming the Mathematical Language of Physics", Am. J. Phys. 71 (2): 104–121, Bibcode:2003AmJPh..71..104H, doi:10.1119/1.1522700

- ↑ Doran, Chris (1994). "Geometric Algebra and its Application to Mathematical Physics". PhD thesis (University of Cambridge).

- ↑ Lasenby & Lasenby Doran.

- ↑ McRobie, F. A.; Lasenby, J. (1999) Simo-Vu Quoc rods using Clifford algebra. Internat. J. Numer. Methods Engrg. Vol 45, #4, p. 377−398

- ↑ 5.0 5.1 Hestenes 1966.

- ↑ Perwass (2005), Geometry and Computing, §3.2.13 p.89

- ↑ Distinguishing notation here is from Dorst (2007) Geometric Algebra for computer Science §B.1 p.590.; the point is also made that scalars must be handled as a special case with this product.

- ↑ This definition follows Dorst (2007) and Perwass (2009) – the left contraction used by Dorst replaces the ("fat dot") inner product that Perwass uses, consistent with Perwass's constraint that grade of A may not exceed that of B.

- ↑ Dorst appears to merely assume B+ such that B ⨼ B+ = 1, whereas Perwass (2009) defines B+ = B†/(B ⨼ B†), where B† is the conjugate of B, equivalent to the reverse of B up to a sign.

- ↑ 10.0 10.1 Dorst, §3.6 p. 85.

- ↑ Perwass (2009) §3.2.10.2 p83

- ↑ That is to say, the projection must be defined as PB(A) = (A ⨼ B+) ⨼ B and not as (A ⨼ B) ⨼ B+, though the two are equivalent for non-null blades B

- ↑ This generalization to all A is apparently not considered by Perwass or Dorst.

- ↑ Perwass (2009) §3.3.1. Perwass also claims here that David Hestenes coined the term "versor", where he is presumably is referring to the GA context (the term versor appears to have been used by Hamilton to refer to an equivalent object of the quaternion algebra).

- ↑ "Electromagnetism using Geometric Algebra versus Components". Retrieved 19 March 2013.

- ↑ Clifford Algebra to Geometric Calculus, a Unified Language for mathematics and Physics (Dordrecht/Boston:G.Reidel Publ.Co.,1984

- ↑ Geometric Algebra Computing in Engineering and Computer Science, E.Bayro-Corrochano & Gerik Scheuermann (Eds),Springer 2010. Extract online at http://geocalc.clas.asu.edu/html/UAFCG.html #5 New Tools for Computational Geometry and rejuvenation of Screw Theory

- ↑ Dorst, Leo; Fontijne, Daniel; Mann, Stephen (2007). Geometric algebra for computer science: an object-oriented approach to geometry. Amsterdam: Elsevier/Morgan Kaufmann. ISBN 978-0-12-369465-2. OCLC 132691969.

- ↑ Hongbo Li (2008) Invariant Algebras and Geometric Reasoning, Singapore: World Scientific. Extract online at http://www.worldscibooks.com/etextbook/6514/6514_chap01.pdf

- ↑ Bayro-Corrochano, Eduardo (2010). Geometric Computing for Wavelet Transforms, Robot Vision, Learning, Control and Action. Springer Verlag

- ↑ Grassmann, Hermann (1844). Die lineale Ausdehnungslehre ein neuer Zweig der Mathematik: dargestellt und durch Anwendungen auf die übrigen Zweige der Mathematik, wie auch auf die Statik, Mechanik, die Lehre vom Magnetismus und die Krystallonomie erläutert. Leipzig: O. Wigand. OCLC 20521674.

- ↑ Artin, Emil (1988), Geometric algebra, Wiley Classics Library, New York: John Wiley & Sons Inc., pp. x+214, ISBN 0-471-60839-4, MR 1009557 (Reprint of the 1957 original; A Wiley-Interscience Publication)

- ↑ Doran, Chris J. L. (February 1994). Geometric Algebra and its Application to Mathematical Physics (Ph.D. thesis). University of Cambridge. OCLC 53604228.

- Baylis, W. E., ed. (1996), Clifford (Geometric) Algebra with Applications to Physics, Mathematics, and Engineering, Birkhäuser

- Baylis, W. E. (2002), Electrodynamics: A Modern Geometric Approach (2 ed.), Birkhäuser, ISBN 978-0-8176-4025-5

- Bourbaki, Nicolas (1980), Eléments de Mathématique. Algèbre, Ch. 9 "Algèbres de Clifford": Hermann

- Dorst, Leo (2002), The inner products of geometric algebra, Boston, MA: Birkhäuser Boston, p. 35−46

- Hestenes, David (1999), New Foundations for Classical Mechanics (2 ed.), Springer Verlag, ISBN 978-0-7923-5302-7

- Hestenes, David (1966). Space-time Algebra. New York: Gordon and Breach. ISBN 978-0-677-01390-9. OCLC 996371.

- Lasenby, J.; Lasenby, A. N.; Doran, C. J. L. (2000), "A Unified Mathematical Language for Physics and Engineering in the 21st Century", Philosophical Transactions of the Royal Society of London (pp. 1–18) (A 358)

- Doran, Chris; Lasenby, Anthony (2003). Geometric algebra for physicists. Cambridge University Press. ISBN 978-0-521-71595-9.

- Macdonald, Alan (2011). Linear and Geometric Algebra. Charleston: CreateSpace. ISBN 9781453854938. OCLC 704377582.

- J Bain (2006). "Spacetime structuralism: §5 Manifolds vs. geometric algebra". In Dennis Dieks. The ontology of spacetime. Elsevier. p. 54 ff. ISBN 978-0-444-52768-4.

- Bayro-Corrochano, Eduardo (2010). Geometric Computing for Wavelet Transforms, Robot Vision, Learning, Control and Action. Springer Verlag.

External links

- A Survey of Geometric Algebra and Geometric Calculus Alan Macdonald, Luther College, Iowa.

- Imaginary Numbers are not Real – the Geometric Algebra of Spacetime. Introduction (Cambridge GA group).

- Physical Applications of Geometric Algebra. Final-year undergraduate course by Chris Doran and Anthony Lasenby (Cambridge GA group; see also 1999 version).

- Maths for (Games) Programmers: 5 – Multivector methods. Comprehensive introduction and reference for programmers, from Ian Bell.

- Geometric Algebra, PlanetMath.org.

- Clifford algebra, geometric algebra, and applications Douglas Lundholm, Lars Svensson Lecture notes for a course on the theory of Clifford algebras, with special emphasis on their wide range of applications in mathematics and physics.

- IMPA SUmmer School 2010 Fernandes Oliveira Intro and Slides.

- University of Fukui E.S.M. Hitzer and Japan GA publications.

- Google Group for GA

- Geometric Algebra Primer Introduction to GA, Jaap Suter.

English translations of early books and papers

- G. Combebiac, "calculus of tri-quaternions" (Doctoral dissertation)

- M. Markic, "Transformants: A new mathematical vehicle. A synthesis of Combebiac's tri-quaternions and Grassmann's geometric system. The calculus of quadri-quaternions"

- C. Burali-Forti, "The Grassmann method in projective geometry" A compilation of three notes on the application of exterior algebra to projective geometry

- C. Burali-Forti, "Introduction to Differential Geometry, following the method of H. Grassmann" Early book on the application of Grassmann algebra

- H. Grassmann, "Mechanics, according to the principles of the theory of extension" One of his papers on the applications of exterior algebra.

Research groups

- Geometric Calculus International. Links to Research groups, Software, and Conferences, worldwide.

- Cambridge Geometric Algebra group. Full-text online publications, and other material.

- University of Amsterdam group

- Geometric Calculus research & development (Arizona State University).

- GA-Net blog and newsletter archive. Geometric Algebra/Clifford Algebra development news.

- Geometric Algebra for Perception Action Systems. Geometric Cybernetics Group (CINVESTAV, Campus Guadalajara, Mexico).

![[{\mathbf {A}}]_{{ij}}=a_{i}\cdot a_{j}](/2014-wikipedia_en_all_02_2014/I/media/8/6/6/8/866833d7e6a6126793defd9903b0150e.png)

![\sum _{{k,l}}[{\mathbf {O}}]_{{ik}}[{\mathbf {A}}]_{{kl}}[{\mathbf {O}}^{{{\mathrm {T}}}}]_{{lj}}=\sum _{{k,l}}[{\mathbf {O}}]_{{ik}}[{\mathbf {O}}]_{{jl}}[{\mathbf {A}}]_{{kl}}=[{\mathbf {D}}]_{{ij}}](/2014-wikipedia_en_all_02_2014/I/media/5/9/f/1/59f1ba43190e66b36964841288253e85.png)

![e_{i}=\sum _{j}[{\mathbf {O}}]_{{ij}}a_{j}](/2014-wikipedia_en_all_02_2014/I/media/8/c/a/5/8ca57b9d149881aec03797a880b2c0c6.png)