Fractional Brownian motion

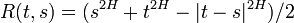

In probability theory, a normalized fractional Brownian motion (fBm), also called a fractal Brownian motion, is a generalization of Brownian motion without independent increments. It is a continuous-time Gaussian process BH(t) on [0, T], which starts at zero, has expectation zero for all t in [0, T], and has the following covariance function:

where H is a real number in (0, 1), called the Hurst index or Hurst parameter associated with the fractional Brownian motion. The Hurst exponent describes the raggedness of the resultant motion, with a higher value leading to a smoother motion. It was introduced by Mandelbrot & van Ness (1968).

The value of H determines what kind of process the fBm is:

- if H = 1/2 then the process is in fact a Brownian motion or Wiener process;

- if H > 1/2 then the increments of the process are positively correlated;

- if H < 1/2 then the increments of the process are negatively correlated.

The increment process, X(t) = BH(t+1) − BH(t), is known as fractional Gaussian noise.

Like the Brownian motion that it generalizes, fractional Brownian motion is named after 19th century biologist Robert Brown, and fractional Gaussian noise is named after mathematician Carl Friedrich Gauss.

Background and definition

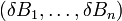

Prior to the introduction of the fractional Brownian motion, Lévy (1953) used the Riemann–Liouville fractional integral to define the process

where integration is with respect to the white noise measure dB(s). This integral turns out to be ill-suited to applications of fractional Brownian motion because of its over-emphasis of the origin (Mandelbrot & van Ness 1968, p. 424).

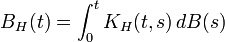

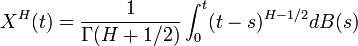

The idea instead is to use a different fractional integral of white noise to define the process: the Weyl integral

for t > 0 (and similarly for t < 0).

The main difference between fractional Brownian motion and regular Brownian motion is that while the increments in Brownian Motion are independent, the opposite is true for fractional Brownian motion. This dependence means that if there is an increasing pattern in the previous steps, then it is likely that the current step will be increasing as well. (If H > 1/2.)

Properties

Self-similarity

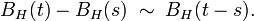

The process is self-similar, since in terms of probability distributions:

This property is due to the fact that the covariance function is homogeneous of order 2H and can be considered as a fractal property. Fractional Brownian motion is the only self-similar Gaussian process.

Stationary increments

It has stationary increments:

Long-range dependence

For H > ½ the process exhibits long-range dependence,

Regularity

Sample-paths are almost nowhere differentiable. However, almost-all trajectories are Hölder continuous of any order strictly less than H: for each such trajectory, there exists a constant c such that

for every ε > 0.

Dimension

With probability 1, the graph of BH(t) has both Hausdorff dimension and box dimension of 2−H.[citation needed]

Integration

As for regular Brownian motion, one can define stochastic integrals with respect to fractional Brownian motion, usually called "fractional stochastic integrals". In general though, unlike integrals with respect to regular Brownian motion, fractional stochastic integrals are not Semimartingales.

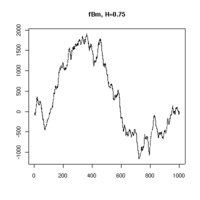

Sample paths

Practical computer realisations of an fBm can be generated, although they are only a finite approximation. The sample paths chosen can be thought of as showing discrete sampled points on an fBm process. Three realisations are shown below, each with 1000 points of an fBm with Hurst parameter 0.75.

"H" = 0.75 realisation 1 |

"H" = 0.75 realisation 2 |

"H" = 0.75 realisation 3 |

Two realisations are shown below, each showing 1000 points of an fBm, the first with Hurst parameter 0.95 and the second with Hurst parameter 0.55.

"H" = 0.95 |

"H" = 0.55 |

Method 1 of simulation

One can simulate sample-paths of an fBm using methods for generating stationary Gaussian processes with known covariance function. The simplest method

relies on the Cholesky decomposition method of the covariance matrix (explained below), which on a grid of size  has complexity of order

has complexity of order  . A more complex, but computationally faster method is the circulant embedding method of Dietrich and Newsam Dietrich & Newsam (1997), which is implemented in Matlab.

. A more complex, but computationally faster method is the circulant embedding method of Dietrich and Newsam Dietrich & Newsam (1997), which is implemented in Matlab.

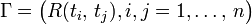

Suppose we want to simulate the values of the fBM at times  using the Cholesky decomposition method.

using the Cholesky decomposition method.

- Form the matrix

where

where  .

.

- Compute

the square root matrix of

the square root matrix of  , i.e.

, i.e.  . Loosely speaking,

. Loosely speaking,  is the "standard deviation" matrix associated to the variance-covariance matrix

is the "standard deviation" matrix associated to the variance-covariance matrix  .

.

- Construct a vector

of n numbers drawn according a standard Gaussian distribution,

of n numbers drawn according a standard Gaussian distribution,

- If we define

then

then  yields a sample path of an fBm.

yields a sample path of an fBm.

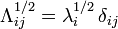

In order to compute  , we can use for instance the Cholesky decomposition method. An alternative method uses the eigenvalues of

, we can use for instance the Cholesky decomposition method. An alternative method uses the eigenvalues of  :

:

- Since

is symmetric, positive-definite matrix, it follows that all eigenvalues

is symmetric, positive-definite matrix, it follows that all eigenvalues  of

of  satisfy

satisfy  , (

, ( ).

).

- Let

be the diagonal matrix of the eigenvalues, i.e.

be the diagonal matrix of the eigenvalues, i.e.  where

where  is the Kronecker delta. We define

is the Kronecker delta. We define  as the diagonal matrix with entries

as the diagonal matrix with entries  , i.e.

, i.e.  .

.

Note that the result is real-valued because  .

.

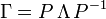

- Let

an eigenvector associated to the eigenvalue

an eigenvector associated to the eigenvalue  . Define

. Define  as the matrix whose

as the matrix whose  -th column is the eigenvector

-th column is the eigenvector  .

.

Note that since the eigenvectors are linearly independent, the matrix  is inversible.

is inversible.

- It follows then that

because

because  .

.

Method 2 of simulation

It is also known that[citation needed]

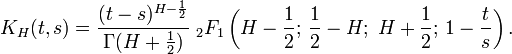

where B is a standard Brownian motion and

Where  is the Euler hypergeometric integral.

is the Euler hypergeometric integral.

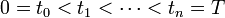

Say we want simulate an fBm at points  .

.

- Construct a vector of n numbers drawn according a standard Gaussian distribution.

- Multiply it component-wise by √(T/n) to obtain the increments of a Brownian motion on [0, T]. Denote this vector by

.

.

- For each

, compute

, compute

The integral may be efficiently computed by Gaussian quadrature. Hypergeometric functions are part of the GNU scientific library.

See also

- Brownian surface

- Autoregressive fractionally integrated moving average

- Multifractal: The generalized framework of fractional Brownian motions.

- Pink noise

- Tweedie distributions

References

- Beran, J. (1994), Statistics for Long-Memory Processes, Chapman & Hall, ISBN 0-412-04901-5.

- Dieker, T. (2004). Simulation of fractional Brownian motion (M.Sc. thesis). Retrieved 29 December 2012.

- Dietrich, C. R.; Newsam, G. N. (1997), "Fast and exact simulation of stationary Gaussian processes through circulant embedding of the covariance matrix.", SIAM Journal on Scientific Computing 18 (4): 1088–1107.

- Lévy, P. (1953), Random functions: General theory with special references to Laplacian random functions, University of California Publications in Statistics 1, pp. 331–390.

- Mandelbrot, B.; van Ness, J.W. (1968), "Fractional Brownian motions, fractional noises and applications", SIAM Review 10 (4): 422–437, JSTOR 2027184.

- Samorodnitsky G., Taqqu M.S. (1994), Stable Non-Gaussian Random Processes, Chapter 7: "Self-similar processes" (Chapman & Hall).

![E[B_{H}(t)B_{H}(s)]={\tfrac {1}{2}}(|t|^{{2H}}+|s|^{{2H}}-|t-s|^{{2H}}),](/2014-wikipedia_en_all_02_2014/I/media/b/4/b/f/b4bf65ba661160499e783672d852e4ea.png)

![B_{H}(t)=B_{H}(0)+{\frac {1}{\Gamma (H+1/2)}}\left\{\int _{{-\infty }}^{0}\left[(t-s)^{{H-1/2}}-(-s)^{{H-1/2}}\right]\,dB(s)+\int _{0}^{t}(t-s)^{{H-1/2}}\,dB(s)\right\}](/2014-wikipedia_en_all_02_2014/I/media/2/b/5/6/2b56d2d63e30be61ab3cc9924e18e441.png)

![\sum _{{n=1}}^{\infty }E[B_{H}(1)(B_{H}(n+1)-B_{H}(n))]=\infty .](/2014-wikipedia_en_all_02_2014/I/media/8/9/8/a/898a34a007a942a660166ebf5b9ef378.png)