Fieller's theorem

In statistics, Fieller's theorem allows the calculation of a confidence interval for the ratio of two means.

Approximate confidence interval

Variables a and b may be measured in different units, so there is no way to directly combine the standard errors as they may also be in different units. The most complete discussion of this is given by Fieller (1954).[1]

Fieller showed that if a and b are (possibly correlated) means of two samples with expectations  and

and  , and variances

, and variances  and

and  and covariance

and covariance  , and if

, and if  are all known, then a (1 − α) confidence interval (mL, mU) for

are all known, then a (1 − α) confidence interval (mL, mU) for  is given by

is given by

where

Here  is an unbiased estimator of

is an unbiased estimator of  based on r degrees of freedom, and

based on r degrees of freedom, and  is the

is the  -level deviate from the Student's t-distribution based on r degrees of freedom.

-level deviate from the Student's t-distribution based on r degrees of freedom.

Three features of this formula are important in this context:

a) The expression inside the square root has to be positive, or else the resulting interval will be imaginary.

b) When g is very close to 1, the confidence interval is infinite.

c) When g is greater than 1, the overall divisor outside the square brackets is negative and the confidence interval is exclusive.

Approximate formulae

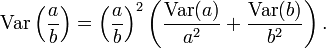

These equations approximation to the full formula, and are obtained via a Taylor series expansion of a function of two variables and then taking the variance (i.e. a generalisation to two variables of the formula for the approximate standard error for a function of an estimate).

Case 1

Assume that a and b are jointly normally distributed, and that b is not too near zero (i.e. more specifically, that the standard error of b is small compared to b),

From this a 95% confidence interval can be constructed in the usual way (degrees of freedom for t * is equal to the total number of values in the numerator and denominator minus 2).

This can be expressed in a more useful form for when (as is usually the case) logged data is used, using the following relation for a function of x and y, say ƒ(x, y):

to obtain either,

or

Case 2

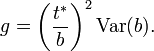

Assume that a and b are jointly normally distributed, and that b is near zero (i.e. SE(b) is not small compared to b).

First, calculate the intermediate quantity:

You cannot calculate the confidence interval of the quotient if  , as the CI for the denominator μb will include zero.

, as the CI for the denominator μb will include zero.

However if  then we can obtain

then we can obtain

Other

One problem is that, when g is not small, CIs can blow up when using Fieller's theorem. Andy Grieve has provided a Bayesian solution where the CIs are still sensible, albeit wide.[2] Bootstrapping provides another alternative that that does not require the assumption of normality.[3]

History

Edgar C. Fieller (1907–1960) first started working on this problem while in Karl Pearson's group at University College London, where he was employed for five years after graduating in Mathematics from King's College, Cambridge. He then worked for the Boots Pure Drug Company as a statistician and operational researcher before becoming deputy head of operational research at RAF Fighter Command during the Second World War, after which he was appointed the first head of the Statistics Section at the National Physical Laboratory.[4]

See also

- Gaussian ratio distribution

Notes

- ↑ Fieller, EC. (1954). "Some problems in interval estimation.". Journal of the Royal Statistical Society, Series B 16 (2): 175–185. JSTOR 2984043.

- ↑ O'Hagan, A., Stevens, JW. and Montmartin, J. (2000). "Inference for the cost-effectiveness acceptability curve and cost-effectiveness ratio.". Pharmacoeconomics 17 (4): 339–49. doi:10.2165/00019053-200017040-00004. PMID 10947489.

- ↑ Campbell, M. K.; Torgerson, D. J. (1999). "Bootstrapping: estimating confidence intervals for cost-effectiveness ratios". QJM: an International Journal of Medicine 92 (3): 177–182. doi:10.1093/qjmed/92.3.177.

- ↑ Irwin, J. O. and Rest, E. D. Van (1961). "Edgar Charles Fieller, 1907-1960". Journal of the Royal Statistical Society, Series A (Blackwell Publishing) 124 (2): 275–277. JSTOR 2984155.

Further reading

- Iris Pigeot, Juliane Schafer, Joachim Rohmel and Dieter Hauschke (2003) "Assessing non-inferiority of a new treatment in a three-arm clinical trial including a placebo". Statistics in Medicine, 22:883–899, doi: 10.1002/sim.1450

- Fieller, EC. (1932) "The distribution of the index in a bivariate Normal distribution". Biometrika, 24(3–4):428–440. doi: 10.1093/biomet/24.3-4.428

- Fieller, EC. (1940) "The biological standardisation of insulin". Journal of the Royal Statistical Society (Supplement). 1:1–54. JSTOR 2983630

- Fieller, EC. (1944) "A fundamental formula in the statistics of biological assay, and some applications". Quarterly Journal of Pharmacy and Pharmacology. 17: 117-123.

- Motulsky, Harvey (1995) Intuitive Biostatistics. Oxford University Press. ISBN 0-19-508607-4

- Senn, Steven (2007) Statistical Issues in Drug Development. Second Edition. Wiley. ISBN 0-471-97488-9

- Hirschberg, J.; Lye, J. (2010). "A Geometric Comparison of the Delta and Fieller Confidence Intervals". The American Statistician 64 (3): 234–241. doi:10.1198/tast.2010.08130.

![(m_{L},m_{{U}})={\frac {1}{(1-g)}}\left[{\frac {a}{b}}-{\frac {g\nu _{{12}}}{\nu _{{22}}}}\mp {\frac {t_{{r,\alpha }}s}{b}}{\sqrt {\nu _{{11}}-2{\frac {a}{b}}\nu _{{12}}+{\frac {a^{2}}{b^{2}}}\nu _{{22}}-g\left(\nu _{{11}}-{\frac {\nu _{{12}}^{2}}{\nu _{{22}}}}\right)}}\right]](/2014-wikipedia_en_all_02_2014/I/media/f/7/3/8/f7387e5491f45aacf94302926bd70b75.png)