Convex analysis

Convex analysis is the branch of mathematics devoted to the study of properties of convex functions and convex sets, often with applications in convex minimization, a subdomain of optimization theory.

Convex sets

A convex set is a set C ⊆ X, for some vector space X, such that for any x, y ∈ C and λ ∈ [0, 1] then[1]

.

.

Convex functions

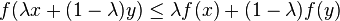

A convex function is any extended real-valued function f : X → R ∪ {±∞} which satisfies Jensen's inequality, i.e. for any x, y ∈ X and any λ ∈ [0, 1] then

.[1]

.[1]

Equivalently, a convex function is any (extended) real valued function such that its epigraph

is a convex set.[1]

Convex conjugate

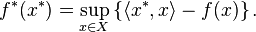

The convex conjugate of an extended real-valued (not necessarily convex) function f : X → R ∪ {±∞} is f* : X* → R ∪ {±∞} where X* is the dual space of X, and[2]:pp.75–79

Biconjugate

The biconjugate of a function f : X → R ∪ {±∞} is the conjugate of the conjugate, typically written as f** : X → R ∪ {±∞}. The biconjugate is useful for showing when strong or weak duality hold (via the perturbation function).

For any x ∈ X the inequality f**(x) ≤ f(x) follows from the Fenchel–Young inequality. For proper functions, f = f** if and only if f is convex and lower semi-continuous by Fenchel–Moreau theorem.[2]:pp.75–79[3]

Convex minimization

A convex minimization (primal) problem is one of the form

such that f : X → R ∪ {±∞} is a convex function and M ⊆ X is a convex set.

Dual problem

In optimization theory, the duality principle states that optimization problems may be viewed from either of two perspectives, the primal problem or the dual problem.

In general given two dual pairs separated locally convex spaces (X, X*) and (Y, Y*). Then given the function f : X → R ∪ {+∞}, we can define the primal problem as finding x such that

If there are constraint conditions, these can be built into the function f by letting  where I is the indicator function. Then let F : X × Y → R ∪ {±∞} be a perturbation function such that F(x, 0) = f(x).[4]

where I is the indicator function. Then let F : X × Y → R ∪ {±∞} be a perturbation function such that F(x, 0) = f(x).[4]

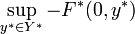

The dual problem with respect to the chosen perturbation function is given by

where F* is the convex conjugate in both variables of F.

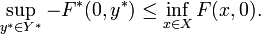

The duality gap is the difference of the right and left hand sides of the inequality[2]:pp. 106–113[4][5]

This principle is the same as weak duality. If the two sides are equal to each other then the problem is said to satisfy strong duality.

There are many conditions for strong duality to hold such as:

- F = F** where F is the perturbation function relating the primal and dual problems and F** is the biconjugate of F;[citation needed]

- the primal problem is a linear optimization problem;

- Slater's condition for a convex optimization problem.[6][7]

Lagrange duality

For a convex minimization problem with inequality constraints,

- minx f(x) subject to gi(x) ≤ 0 for i = 1, ..., m.

the Lagrangian dual problem is

- supu infx L(x, u) subject to ui(x) ≥ 0 for i = 1, ..., m.

where the objective function L(x, u) is the Lagrange dual function defined as follows:

See also

References

- ↑ 1.0 1.1 1.2 Rockafellar, R. Tyrrell (1997) [1970]. Convex Analysis. Princeton, NJ: Princeton University Press. ISBN 978-0-691-01586-6.

- ↑ 2.0 2.1 2.2 Zălinescu, Constantin (2002). Convex analysis in general vector spaces (J). River Edge, NJ: World Scientific Publishing Co., Inc. ISBN 981-238-067-1. MR 1921556.

- ↑ Borwein, Jonathan; Lewis, Adrian (2006). Convex Analysis and Nonlinear Optimization: Theory and Examples (2 ed.). Springer. pp. 76–77. ISBN 978-0-387-29570-1.

- ↑ 4.0 4.1 Boţ, Radu Ioan; Wanka, Gert; Grad, Sorin-Mihai (2009). Duality in Vector Optimization. Springer. ISBN 978-3-642-02885-4.

- ↑ Csetnek, Ernö Robert (2010). Overcoming the failure of the classical generalized interior-point regularity conditions in convex optimization. Applications of the duality theory to enlargements of maximal monotone operators. Logos Verlag Berlin GmbH. ISBN 978-3-8325-2503-3.

- ↑ Borwein, Jonathan; Lewis, Adrian (2006). Convex Analysis and Nonlinear Optimization: Theory and Examples (2 ed.). Springer. ISBN 978-0-387-29570-1.

- ↑ Boyd, Stephen; Vandenberghe, Lieven (2004). Convex Optimization (pdf). Cambridge University Press. ISBN 978-0-521-83378-3. Retrieved October 3, 2011.

- J.-B. Hiriart-Urruty; C. Lemaréchal (2001). Fundamentals of convex analysis. Berlin: Springer-Verlag. ISBN 978-3-540-42205-1.

- Singer, Ivan (1997). Abstract convex analysis. Canadian Mathematical Society series of monographs and advanced texts. New York: John Wiley & Sons, Inc. pp. xxii+491. ISBN 0-471-16015-6. MR 1461544.

- Stoer, J.; Witzgall, C. (1970). Convexity and optimization in finite dimensions 1. Berlin: Springer. ISBN 978-0-387-04835-2.