Background subtraction

Background subtraction, also known as Foreground Detection, is a technique in the fields of image processing and computer vision wherein an image's foreground is extracted for further processing (object recognition etc.). Generally an image's regions of interest are objects (humans, cars, text etc.) in its foreground. After the stage of image preprocessing (which may include image denoising etc.) object localisation is required which may make use of this technique. Background subtraction is a widely used approach for detecting moving objects in videos from static cameras. The rationale in the approach is that of detecting the moving objects from the difference between the current frame and a reference frame, often called “background image”, or “background model”.[1] Background subtraction is mostly done if the image in question is a part of a video stream.

Approaches

A robust background subtraction algorithm should be able to handle lighting changes, repetitive motions from clutter and long-term scene changes.[2] The following analyses make use of the function of V(x,y,t) as a video sequence where t is the time dimension, x and y are the pixel location variables. e.g. V(1,2,3) is the pixel intensity at (1,2) pixel location of the image at t = 3 in the video sequence.

Using frame differencing

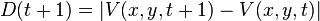

Frame difference (absolute) at time t + 1 is

The background is assumed to be the frame at time t. This difference image would only show some intensity for the pixel locations which have changed in the two frames. Though we have seemingly removed the background, this approach will only work for cases where all foreground pixels are moving and all background pixels are static.[2]

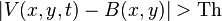

A threshold "Th" is put on this difference image to improve the subtraction (see Image thresholding).

(this means that the difference image's pixels' intensities are 'thresholded' or filtered on the basis of value of Th)

The accuracy of this approach is dependent on speed of movement in the scene. Faster movements may require higher thresholds.

Mean filter

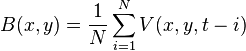

For calculating the image containing only the background, a series of preceding images are averaged. For calculating the background image at the instant t,

where N is the number of preceding images taken for averaging. This averaging refers to averaging corresponding pixels in the given images. N would depend on the video speed (number of images per second in the video) and the amount of movement in the video.[3] After calculating the background B(x,y) we can then subtract it from the image V(x,y,t) at time t=t and threshold it. Thus the foreground is

where Th is threshold. Similarly we can also use median instead of mean in the above calculation of B(x,y).

Usage of global and time-independent Thresholds (same Th value for all pixels in the image) may limit the accuracy of the above two approaches.[2]

Running Gaussian average

For this method, Wren et al.[4] propose fitting a Gaussian probabilistic density function (pdf) on the most recent  frames. In order to avoid fitting the pdf from scratch at each new frame time

frames. In order to avoid fitting the pdf from scratch at each new frame time  , a running (or on-line cumulative) average is computed.

, a running (or on-line cumulative) average is computed.

The pdf of every pixel is characterized by mean  and variance

and variance  . The following is a possible initial condition (assuming that initially every pixel is background):

. The following is a possible initial condition (assuming that initially every pixel is background):

some default value

some default value

where  is the value of the pixel's intensity at time

is the value of the pixel's intensity at time  . In order to initialize variance, we can, for example, use the variance in x and y from a small window around each pixel.

. In order to initialize variance, we can, for example, use the variance in x and y from a small window around each pixel.

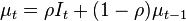

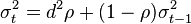

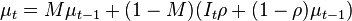

Note that background may change over time (e.g. due to illumination changes or non-static background objects). To accommodate for that change, at every frame  , every pixel's mean and variance must be updated, as follows:

, every pixel's mean and variance must be updated, as follows:

Where  determines the size of the temporal window that is used to fit the pdf (usually

determines the size of the temporal window that is used to fit the pdf (usually  ) and

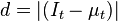

) and  is the Euclidean distance between the mean and the value of the pixel.

is the Euclidean distance between the mean and the value of the pixel.

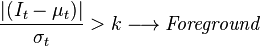

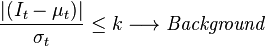

We can now classify a pixel as background if its current intensity lies within some confidence interval of its distribution's mean:

where the parameter  is a free threshold (usually

is a free threshold (usually  ). A larger value for

). A larger value for  allows for more dynamic background, while a smaller

allows for more dynamic background, while a smaller  increases the probability of a transition from background to foreground due to more subtle changes.

increases the probability of a transition from background to foreground due to more subtle changes.

In a variant of the method, a pixel's distribution is only updated if it is classified as background. This is to prevent newly introduced foreground objects from fading into the background. The update formula for the mean is changed accordingly:

where  when

when  is considered foreground and

is considered foreground and  otherwise. So when

otherwise. So when  , that is, when the pixel is detected as foreground, the mean will stay the same. As a result, a pixel, once it has become foreground, can only become background again when the intensity value gets close to what it was before turning foreground. This method, however, has several issues: It only works if all pixels are initially background pixels (or foreground pixels are annotated as such). Also, it cannot cope with gradual background changes: If a pixel is categorized as foreground for a too long period of time, the background intensity in that location might have changed (because illumination has changed etc.). As a result, once the foreground object is gone, the new background intensity might not be recognized as such anymore.

, that is, when the pixel is detected as foreground, the mean will stay the same. As a result, a pixel, once it has become foreground, can only become background again when the intensity value gets close to what it was before turning foreground. This method, however, has several issues: It only works if all pixels are initially background pixels (or foreground pixels are annotated as such). Also, it cannot cope with gradual background changes: If a pixel is categorized as foreground for a too long period of time, the background intensity in that location might have changed (because illumination has changed etc.). As a result, once the foreground object is gone, the new background intensity might not be recognized as such anymore.

Background mixture models

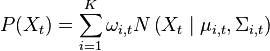

In this technique, it is assumed that every pixel's intensity values in the video can be modeled using a Gaussian mixture model.[5] A simple heuristic determines which intensities are most probably of the background. Then the pixels which do not match to these are called the foreground pixels. Foreground pixels are grouped using 2D connected component analysis.[5]

At any time t, a particular pixel ( )'s history is

)'s history is

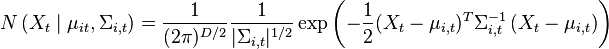

This history is modeled by a mixture of K Gaussian distributions:

where

An on-line K-means approximation is used to update the Gaussians. Numerous improvements of this original method developed by Stauffer and Grimson [5] have been proposed and a complete survey can be found in Bouwmans et al.[6]

Surveys

Several surveys which concern categories or sub-categories of models can be found as follows:

- MOG Background Subtraction [6]

- Subspace Learning Background Subtraction [7]

- Fuzzy Background Subtraction [10]

Resources, Datasets and Codes

Book

T. Bouwmans, F. Porikli, B. Horferlin, A. Vacavant, Handbook on "Background Modeling and Foreground Detection for Video Surveillance: Traditional and Recent Approaches, Implementations, Benchmarking and Evaluation", CRC Press, Taylor and Francis Group, June 2014. (For more information: http://www.crcpress.com/product/isbn/9781482205374)

Special Issue

T. Bouwmans, L. Davis, J. Gonzalez, M. Piccardi, C. Shan, Special Issue on “Background Modeling for Foreground Detection in Real-World Dynamic Scenes”, Special Issue in Machine Vision and Applications, July 2014.

BGS Web Site

The Background Subtraction Web Site (T. Bouwmans, Univ. La Rochelle, France) contains a full list of the references in the field, links to available datasets and codes. (For more information: http://sites.google.com/site/backgroundsubtraction/Home)

BGS Datasets

- ChangeDetection.net (For more information: http://www.changedetection.net/)

- Background Models Challenge (For more information: http://bmc.univ-bpclermont.fr/)

- Stuttgart Artificial Background Subtraction Dataset (For more information: http://www.vis.uni-stuttgart.de/index.php?id=sabs)

BGS Library

The BGS Library (A. Sobral) provides a C++ framework to perform background subtraction algorithms. The code works either on Windows or on Linux. Currently the library offers 29 BGS algorithms. (For more information: http://code.google.com/p/bgslibrary/)

Applications

- Video Surveillance

- Optical Motion Capture

- Human Computer Interaction

- Content based Video Coding

See also

- ViBe

- PBAS

- SOBS

References

- ↑ M. Piccardi (October 2004). "Background subtraction techniques: a review". IEEE International Conference on Systems, Man and Cybernetics 4. pp. 3099–3104. doi:10.1109/icsmc.2004.1400815.

- ↑ 2.0 2.1 2.2 B. Tamersoy (September 29, 2009). "Background Subtraction – Lecture Notes". University of Texas at Austin.

- ↑ Y. Benezeth; B. Emile; H. Laurent; C. Rosenberger (December 2008). "Review and evaluation of commonly-implemented background subtraction algorithms". 19th International Conference on Pattern Recognition. pp. 1–4. doi:10.1109/ICPR.2008.4760998.

- ↑ C.R. Wren; A. Azarbayejani;T. Darrell; A.P. Pentland (July 1997). "Pfinder: real-time tracking of the human body". IEEE Transactions on Pattern Analysis and Machine Intelligence 19 (7): 780–785. doi:10.1109/34.598236.

- ↑ 5.0 5.1 5.2 C. Stauffer, W. E. L. Grimson (August 1999). "Adaptive background mixture models for real-time tracking". IEEE Computer Society Conference on Computer Vision and Pattern Recognition 2. pp. 246–252. doi:10.1109/CVPR.1999.784637.

- ↑ 6.0 6.1 T. Bouwmans; F. El Baf; B. Vachon (November 2008). "Background Modeling using Mixture of Gaussians for Foreground Detection - A Survey". Recent Patents on Computer Science 1: 219–237.

- ↑ T. Bouwmans (November 2009). "Subspace Learning for Background Modeling: A Survey". Recent Patents on Computer Science 2: 223–234.

- ↑ T. Bouwmans (January 2010). "Statistical Background Modeling for Foreground Detection: A Survey". Chapter 3 in the Handbook of Pattern Recognition and Computer Vision, World Scientific Publishing: 181–199.

- ↑ T. Bouwmans (September 2011). "Recent Advanced Statistical Background Modeling for Foreground Detection: A Systematic Survey". Recent Patents on Computer Science 4: 147–176.

- ↑ T. Bouwmans (March 2012). "Background Subtraction For Visual Surveillance: A Fuzzy Approach". Chapter 5 in Handbook on Soft Computing for Video Surveillance: 103–134.

- ↑ T. Bouwmans (March 2012). "Robust Principal Component Analysis for Background Subtraction: Systematic Evaluation and Comparative Analysis". Chapter 12 in Book on Principal Component Analysis, INTECH: 223–238.

- ↑ T. Bouwmans; E. Zahzah (2014). "Robust PCA via Principal Component Pursuit: A Review for a Comparative Evaluation in Video Surveillance". Special Issue on Background Models Challenge, Computer Vision and Image Understanding.

- ↑ T. Bouwmans. "Traditional Approaches in Background Modeling for Static Cameras". Chapter 1 in Handbook on "Background Modeling and Foreground Detection for Video Surveillance", CRC Press, Taylor and Francis Group.

- ↑ T. Bouwmans. "Recent Approaches in Background Modeling for Static Cameras". Chapter 2 in Handbook on "Background Modeling and Foreground Detection for Video Surveillance", CRC Press, Taylor and Francis Group.

- Triche, T. J.; Weisenberger, D. J.; Van Den Berg, D.; Laird, P. W.; Siegmund, K. D. (2013). "Low-level processing of Illumina Infinium DNA Methylation BeadArrays". Nucleic Acids Research 41 (7): e90. doi:10.1093/nar/gkt090. PMC 3627582. PMID 23476028.