Bias of an estimator

In statistics, bias (or bias function) of an estimator is the difference between this estimator's expected value and the true value of the parameter being estimated. An estimator or decision rule with zero bias is called unbiased. Otherwise the estimator is said to be biased.

In ordinary English, the term bias is pejorative. In statistics, there are problems for which it may be good to use an estimator with a small, but nonzero, bias. In some cases, an estimator with a small bias may have lesser mean squared error or be median-unbiased (rather than mean-unbiased, the standard unbiasedness property). The property of median-unbiasedness is invariant under transformations, while the property of mean-unbiasedness may be lost under nonlinear transformations.

Contents |

Definition

Suppose we have a statistical model parameterized by θ giving rise to a probability distribution for observed data,  , and a statistic θ^ which serves as an estimator of θ based on any observed data

, and a statistic θ^ which serves as an estimator of θ based on any observed data  . That is, we assume that our data follows some unknown distribution

. That is, we assume that our data follows some unknown distribution  (where

(where  is a fixed constant that is part of this distribution, but is unknown), and then we construct some estimator

is a fixed constant that is part of this distribution, but is unknown), and then we construct some estimator  that maps observed data to values that we hope are close to

that maps observed data to values that we hope are close to  . Then the bias of this estimator is defined to be

. Then the bias of this estimator is defined to be

where E[ ] denotes expected value over the distribution  , i.e. averaging over all possible observations

, i.e. averaging over all possible observations  .

.

An estimator is said to be unbiased if its bias is equal to zero for all values of parameter θ.

There are more general notions of bias and unbiasedness. What this article calls "bias" is called "mean-bias", to distinguish mean-bias from the other notions, the notable ones being "median-unbiased" estimators. The general theory of unbiased estimators is briefly discussed near the end of this article.

In a simulation experiment concerning the properties of an estimator, the bias of the estimator may be assessed using the mean signed difference.

Examples

Sample variance

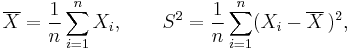

Suppose X1, ..., Xn are independent and identically distributed (i.i.d) random variables with expectation μ and variance σ2. If the sample mean and uncorrected sample variance are defined as

then S2 is a biased estimator of σ2, because

In other words, the expected value of the uncorrected sample variance does not equal the population variance σ2, unless multiplied by a normalization factor. The sample mean, on the other hand, is an unbiased estimator of the population mean μ.

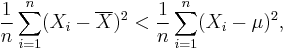

The reason that S2 is biased stems from the fact that the sample mean is an ordinary least squares (OLS) estimator for μ: It is such a number that makes the sum Σ(Xi − μ)2 as small as possible. That is, when any other number is plugged into this sum, the sum can only increase. In particular, the choice m = μ gives, first (or most outcomes)

and then

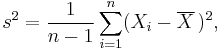

Note that the usual definition of sample variance is

and this is an unbiased estimator of the population variance. This can be seen by noting the following formula for the term in the inequality for the expectation of the uncorrected sample variance above:

The ratio between the biased (uncorrected) and unbiased estimates of the variance is known as Bessel's correction.

Estimating a Poisson probability

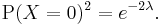

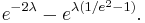

A far more extreme case of a biased estimator being better than any unbiased estimator arises from the Poisson distribution:[1][2]: Suppose X has a Poisson distribution with expectation λ. Suppose it is desired to estimate

(For example, when incoming calls at a telephone switchboard are modeled as a Poisson process, and λ is the average number of calls per minute, then e−2λ is the probability that no calls arrive in the next two minutes.)

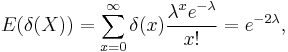

Since the expectation of an unbiased estimator δ(X) is equal to the estimand, i.e.

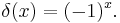

the only function of the data constituting an unbiased estimator is

To see this, note that when decomposing e−λ from the above expression for expectation, the sum that is left is a Taylor series expansion of e−λ as well, yielding e−λe−λ = e−2λ (see Characterizations of the exponential function).

If the observed value of X is 100, then the estimate is 1, although the true value of the quantity being estimated is very likely to be near 0, which is the opposite extreme. And, if X is observed to be 101, then the estimate is even more absurd: It is −1, although the quantity being estimated must be positive.

The (biased) maximum likelihood estimator

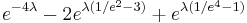

is far better than this unbiased estimator. Not only is its value always positive but it is also more accurate in the sense that its mean squared error

is smaller; compare the unbiased estimator's MSE of

The MSEs are functions of the true value λ. The bias of the maximum-likelihood estimator is:

Maximum of a discrete uniform distribution

The bias of maximum-likelihood estimators can be substantial. Consider a case where n tickets numbered from 1 through to n are placed in a box and one is selected at random, giving a value X. If n is unknown, then the maximum-likelihood estimator of n is X, even though the expectation of X is only (n + 1)/2; we can be certain only that n is at least X and is probably more. In this case, the natural unbiased estimator is 2X − 1.

Median-unbiased estimators

The theory of median-unbiased estimators was revived by George W. Brown in 1947:

An estimate of a one-dimensional parameter θ will be said to be median-unbiased, if, for fixed θ, the median of the distribution of the estimate is at the value θ; i.e., the estimate underestimates just as often as it overestimates. This requirement seems for most purposes to accomplish as much as the mean-unbiased requirement and has the additional property that it is invariant under one-to-one transformation.[3]

Further properties of median-unbiased estimators have been noted by Lehmann, Birnbaum, van der Vaart and Pfanzagl. In particular, median-unbiased estimators exist in cases where mean-unbiased and maximum-likelihood estimators do not exist. Besides being invariant under one-to-one transformations, median-unbiased estimators have surprising robustness.

Bias with respect to other loss functions

Any mean-unbiased minimum-variance estimator minimizes the risk (expected loss) with respect to the squared-error loss function, as observed by Gauss. A median-unbiased estimator minimizes the risk with respect to the absolute loss function, as observed by Laplace. Other loss functions are used in statistical theory, particularly in robust statistics.

Effect of transformations

Note that, when a transformation is applied to a mean-unbiased estimator, the result need not be a mean-unbiased estimator of its corresponding population statistic. That is, for a non-linear function f and a mean-unbiased estimator U of a parameter p, the composite estimator f(U) need not be a mean-unbiased estimator of f(p). For example, the square root of the unbiased estimator of the population variance is not a mean-unbiased estimator of the population standard deviation.

See also

- Omitted-variable bias

- Consistent estimator

- Estimation theory

- Expected loss

- Expected value

- Loss function

- Median

- Statistical decision theory

Notes

- ^ J.P. Romano and A.F. Siegel, Counterexamples in Probability and Statistics, Wadsworth & Brooks/Cole, Monterey, CA, 1986

- ^ Hardy, M. (1 March 2003). "An Illuminating Counterexample". American Mathematical Monthly 110 (3): 234–238. doi:10.2307/3647938. ISSN 0002-9890. JSTOR 3647938.

- ^ Brown (1947), page 583

References

- Brown, George W. "On Small-Sample Estimation." The Annals of Mathematical Statistics, Vol. 18, No. 4 (Dec., 1947), pp. 582–585. JSTOR 2236236

- Lehmann, E.L. "A General Concept of Unbiasedness" The Annals of Mathematical Statistics, Vol. 22, No. 4 (Dec., 1951), pp. 587–592. JSTOR 2236928

- Allan Birnbaum. 1961. "A Unified Theory of Estimation, I", The Annals of Mathematical Statistics, Vol. 32, No. 1 (Mar., 1961), pp. 112–135

- van der Vaart, H.R. 1961. "Some Extensions of the Idea of Bias" The Annals of Mathematical Statistics, Vol. 32, No. 2 (Jun., 1961), pp. 436–447.

- Pfanzagl, Johann. 1994. Parametric Statistical Theory. Walter de Gruyter.

- Stuart, Alan; Ord, Keith; Arnold, Steven [F.] (1999). Classical Inference and the Linear Model. Kendall's Advanced Theory of Statistics. 2A (Sixth ed.). London: Arnold. pp. xxii+885. ISBN 0-340-66230-1. MR1687411.

- Voinov, Vassily [G.]; Nikulin, Mikhail [S.] (1993). Unbiased estimators and their applications. 1: Univariate case. Dordrect: Kluwer Academic Publishers. ISBN 0-7923-2382-3.

- Voinov, Vassily [G.]; Nikulin, Mikhail [S.] (1996). Unbiased estimators and their applications. 2: Multivariate case. Dordrect: Kluwer Academic Publishers. ISBN 0-7923-3939-8.

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|||||||||||

![\operatorname{Bias}[\,\hat\theta\,] = \operatorname{E}[\,\hat{\theta}\,]-\theta = \operatorname{E}[\, \hat\theta - \theta \,],](/2012-wikipedia_en_all_nopic_01_2012/I/7e921027cf07cd22d61f2affdf245016.png)

![\begin{align}

\operatorname{E}[S^2]

&= \operatorname{E}\bigg[ \frac{1}{n}\sum_{i=1}^n (X_i-\overline{X})^2 \bigg]

= \operatorname{E}\bigg[ \frac{1}{n}\sum_{i=1}^n \big((X_i-\mu)-(\overline{X}-\mu)\big)^2 \bigg] \\

&= \operatorname{E}\bigg[ \frac{1}{n}\sum_{i=1}^n (X_i-\mu)^2 -

2(\overline{X}-\mu)\frac{1}{n}\sum_{i=1}^n (X_i-\mu) %2B

(\overline{X}-\mu)^2 \bigg] \\

&= \operatorname{E}\bigg[ \frac{1}{n}\sum_{i=1}^n (X_i-\mu)^2 - (\overline{X}-\mu)^2 \bigg]

= \sigma^2 - \operatorname{E}\big[ (\overline{X}-\mu)^2 \big] < \sigma^2.

\end{align}](/2012-wikipedia_en_all_nopic_01_2012/I/b19e93bf77a63719bd839b8c9ab7a6b2.png)

![\begin{align}

\operatorname{E}[S^2]

&= \operatorname{E}\bigg[ \frac{1}{n}\sum_{i=1}^n (X_i-\overline{X})^2 \bigg]

< \operatorname{E}\bigg[ \frac{1}{n}\sum_{i=1}^n (X_i-\mu)^2 \bigg] = \sigma^2.

\end{align}](/2012-wikipedia_en_all_nopic_01_2012/I/e188e9b4276644e9f53bcf1f3c05882d.png)

![\operatorname{E}\big[ (\overline{X}-\mu)^2 \big] = \frac{1}{n}\sigma^2 .](/2012-wikipedia_en_all_nopic_01_2012/I/fbda7a988c1834c99b231d00b11b3304.png)